Google Introduces New LLM Development Method: Faster, Stronger, and Cheaper

Since OpenAI launched GPT-3 in 2022 – the platform behind ChatGPT – large language models (LLMs) have taken the world by storm. They are widely used in many fields, from coding to search. However, the process of generating feedback (called inference ) is quite slow and computationally expensive. As more and more people use LLMs, speeding up, reducing costs while ensuring quality becomes a matter of survival for developers.

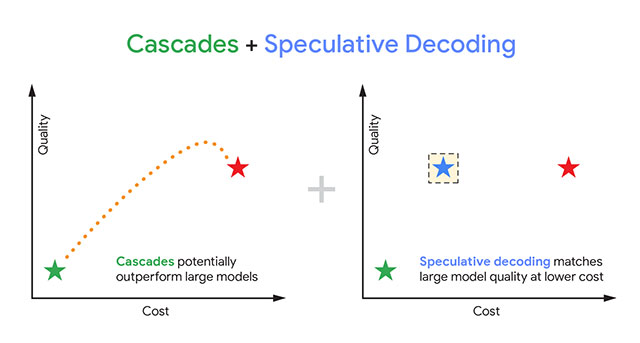

There are currently two methods that were hoped to solve this problem: cascades and speculative decoding .

- Cascades : use a small, faster model to process first, then switch to a larger model if needed. This saves on computation costs but has the disadvantage of having to 'wait' for the small model to decide. If it is uncertain, the response time is still prolonged and the quality of the answer is subject to fluctuations.

- Speculative decoding : a small model acts as a 'draft', predicting tokens in parallel. The larger model then quickly verifies the results. This method prioritizes speed but is quite strict: if just 1 token is wrong, the entire draft is discarded, even if most of the answers are correct. This sometimes causes the speed advantage to be lost and the computational cost does not decrease as expected.

Clearly, both approaches have their limitations. So Google Research has developed a new hybrid approach called speculative cascades. The core of this is a flexible delay rule that can decide to accept the results of the small model or switch to the larger model depending on the situation. This avoids the 'waiting' bottleneck of cascades and escapes the 'reject all drafts' stricture of speculative decoding.

In other words, even if the small model gives an answer that does not match the large model, the system can still accept it if it is a reasonable answer.

Google Research tested this method on models like Gemma and T5 for a variety of language tasks: text summarization, inference, and coding. The results showed that speculative cascades outperformed traditional methods in terms of cost-to-performance and speed. In many cases, they produced correct answers faster than speculative decoding.

Right now, this is just lab research. But if successful and implemented in the real world, users will have the opportunity to experience LLM that is faster, more powerful, and significantly cheaper.