Stop using Perplexity! Your local LLM does everything better.

Many people have been paying $20/month for Perplexity AI Pro for almost a year now. They find that price reasonable because they get real-time web search capabilities, citation sources, and a polished web interface, making research easier. But considering there are applications that allow anyone to enjoy the benefits of a local LLM , many find they can replace Perplexity with a local LLM system for most of their tasks.

This isn't a complete rejection of cloud services. Perplexity still excels at real-time web search and instant multi-source aggregation. But when it comes to everyday tasks—reviewing code, writing documentation, analyzing data, troubleshooting technical issues—local setups deliver faster, more private, and increasingly efficient results without costing a dime.

Reasons for establishing a local LLM system.

The journey to exploring local LLM systems begins with Ollama . This is an open-source tool that has become the standard for running local LLM systems. Installation on Windows only takes a few minutes. Then, combine it with LM Studio as your graphical user interface (GUI), although you can also use it as a standalone AI application. There are many other applications you can use to enjoy the benefits of local AI, so choose the one you prefer.

The hardware isn't top-of-the-line either. Some people are using laptops with RTX 4060 8GB graphics cards, 16GB of LPDDR5X memory, and an Intel Core Ultra 7 processor. This hardware won't give you instant results or allow you to run high-end models, but it's sufficient to run models like the Qwen 2.5 Coder 32B quite well.

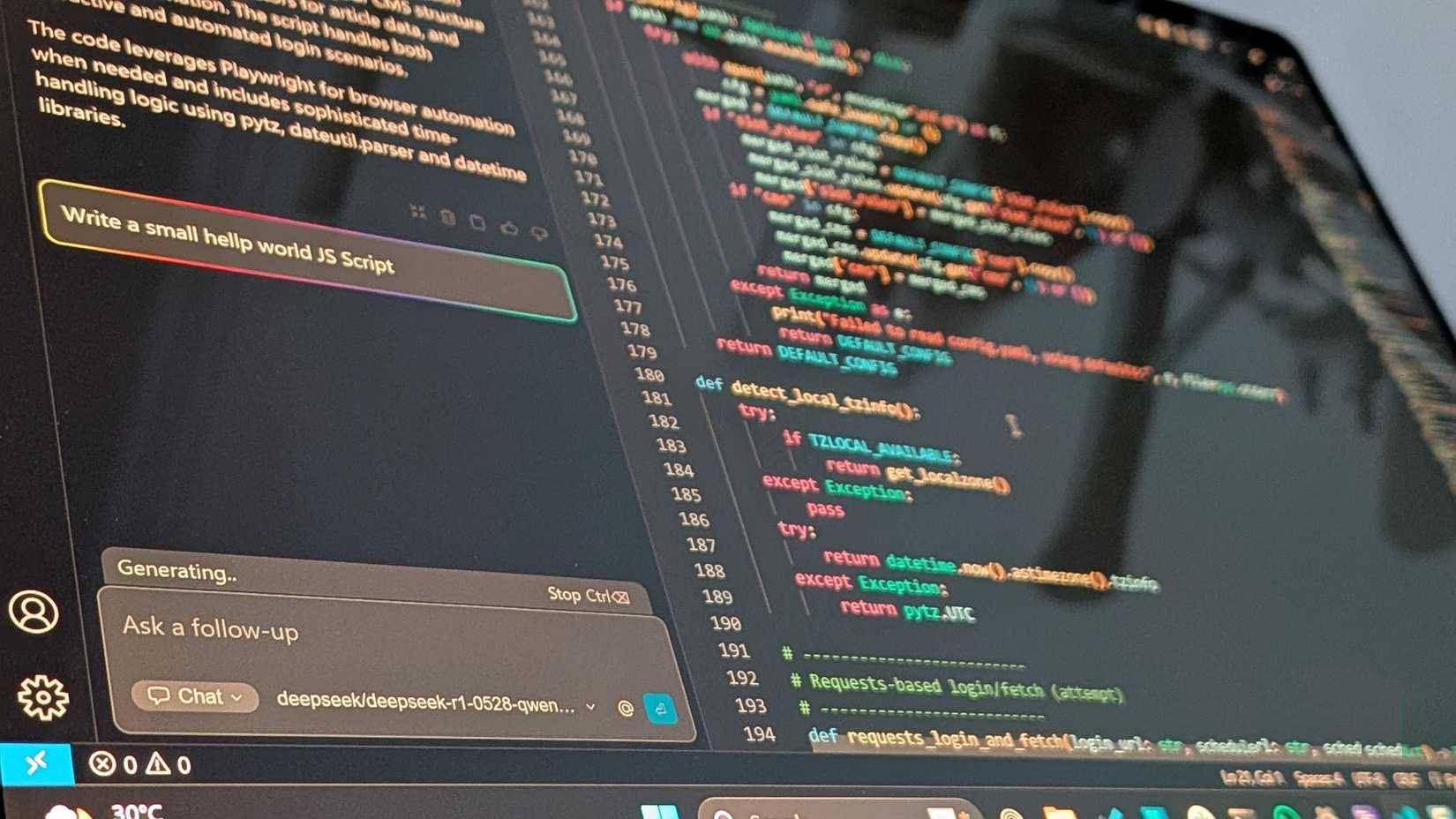

Incidentally, many people are using the aforementioned model quantized to 4-bit precision. It runs smoothly on 8 GB of VRAM and generates code at a rate of 25 to 30 tokens per second. It supports Python , VBA, PowerShell , and almost any other programming language you need. The model can also interpret legacy code with a 128k-token context window.

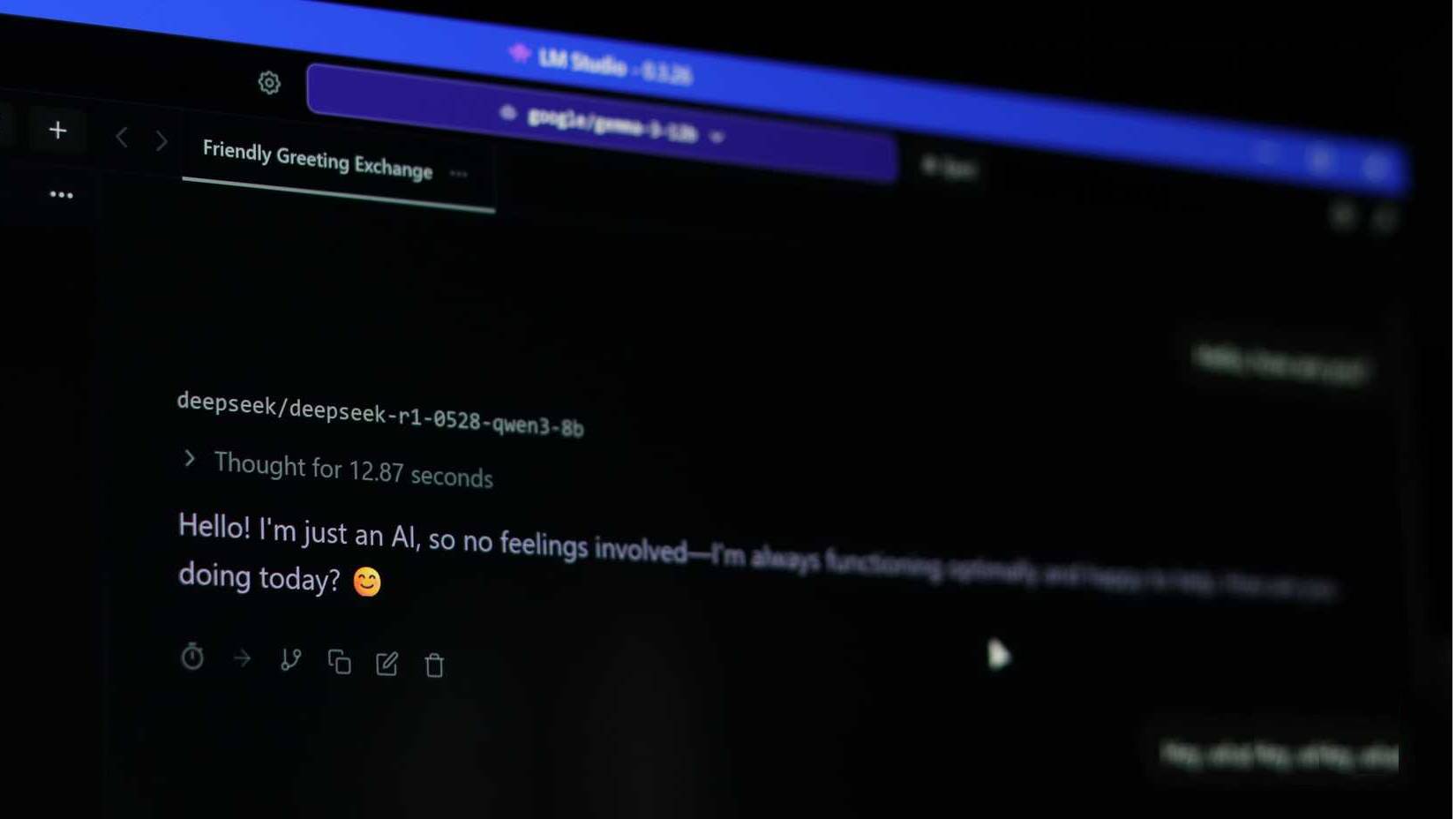

For typical tasks, alternate between the Llama 3.1 70B and the optimized DeepSeek R1 models. The quality gap has also narrowed significantly. This means you can achieve GPT-4 performance on consumer hardware at a much lower cost than self-hosted cloud computing.

Where local LLM models are completely superior to Perplexity.

Privacy, control, and unlimited speed.

Privacy is an immediate benefit. Every line of proprietary code resides on your machine. There are no third-party service logs for queries. For industries with data storage requirements such as healthcare, law, and finance, this eliminates the hassles of HIPAA or GDPR compliance.

Cost also plays a big role. For example, a person's laptop might cost around $1,600 when new. That's 80 months of Perplexity Pro, and it allows you to do almost everything else for the same price. You can also run queries that would cost hundreds of dollars per month using cloud APIs.

Offline capability might sound trivial until needed. You can access your AI anytime, anywhere, without a stable internet connection . You won't have connection issues, Wi-Fi speed limits, or data usage restrictions.

Performance is not a miracle.

The pros, cons, and GPU-intensive aspects.

Local LLMs are slower in absolute terms. Qwen version 2.5 generates between 25 and 30 tokens per second, nearly half the speed of cloud-based GPT-4.

But for specific workflows, this rarely matters. When reviewing code or drafting documentation, don't wait for the model. The bottleneck here is your comprehension, not token generation. A 25-token response per second to a 500-token explanation will cause local LLMs to take several minutes longer than usual.

Latency also tells a different story. Cloud services can cause delays in terms of network costs. Local AI, on the other hand, gets the job done instantly. For interactive programming support with rapid iterative capabilities, that responsiveness is fantastic. These are also the benefits you get when creating a local programming AI for VS Code.

Areas where Perplexity has a clear advantage

Direct web search is what you'll be missing the most.

Real-time web search remains Perplexity's best feature. When you need current regulatory requirements, recent API documentation, or aggregators with citations, it's all in seconds. You can use many free chatbots to avoid paying for AI, but Perplexity is hard to replace.

Local LLM systems can perform web searches with open WebUI integration, but setup complexity increases significantly. Perplexity also handles multimodal tasks better, supporting image analysis and document processing via GPT-4 Vision and Claude 3. Local setups work well with text but require separate tools for images, depending on your media.

The issue of perplexity needs to be addressed. Perplexity can provide inaccurate information despite citations. Local LLM systems also suffer from perplexity, but without real-time fact-checking, error detection requires greater vigilance.