Why do many people regret ignoring this ChatGPT feature for so long?

ChatGPT is where it's at today. It started as a productivity tool, but was slowly demoted to a 'chatbot.' A chatbot exists to chat. That's the difference. OpenAI's focus shifted, and since then, ChatGPT has been obsessed with conversing and engaging users. No matter how many people asked it to stop trying to interact, not mimic its tone, not compliment it, it wouldn't stop. It remembered the instructions but ignored the intent. There was no way to fix it.

There are other models out there that don't always agree or flatter. But the truth is, many people get stuck in the OpenAI ecosystem. After tinkering with the settings, you see an option you've always overlooked and decide to try it. The results are immediate, and many people regret not doing it sooner.

The problem of chatbots being too friendly

ChatGPT is famous for trying to be friendly

To understand why this change is so important, you first have to understand the root of the problem. ChatGPT is notorious for trying to suck you in. There's a reason Claude isn't popular. Gemini 's popularity comes from its integration with Google products . Copilot relies on Microsoft. But ChatGPT doesn't have that inherent advantage. It became dominant because it was optimized for the average user. It was built to chat with you and act as a friendly "digital companion."

Over the course of development, OpenAI has gone beyond optimizing ChatGPT for people who simply want to get work done. The logic is clear: There are more people who want to chat than there are people who want to work. And looking at the data, as of October 2025, about 80% of ChatGPT users are on the free plan. They're not paying, and that's a problem for OpenAI. The solution, ostensibly, is to make the conversations so engaging that you don't want to stop. ChatGPT will keep chatting until the free credits run out, and by then, you've already invested, so paying is inevitable.

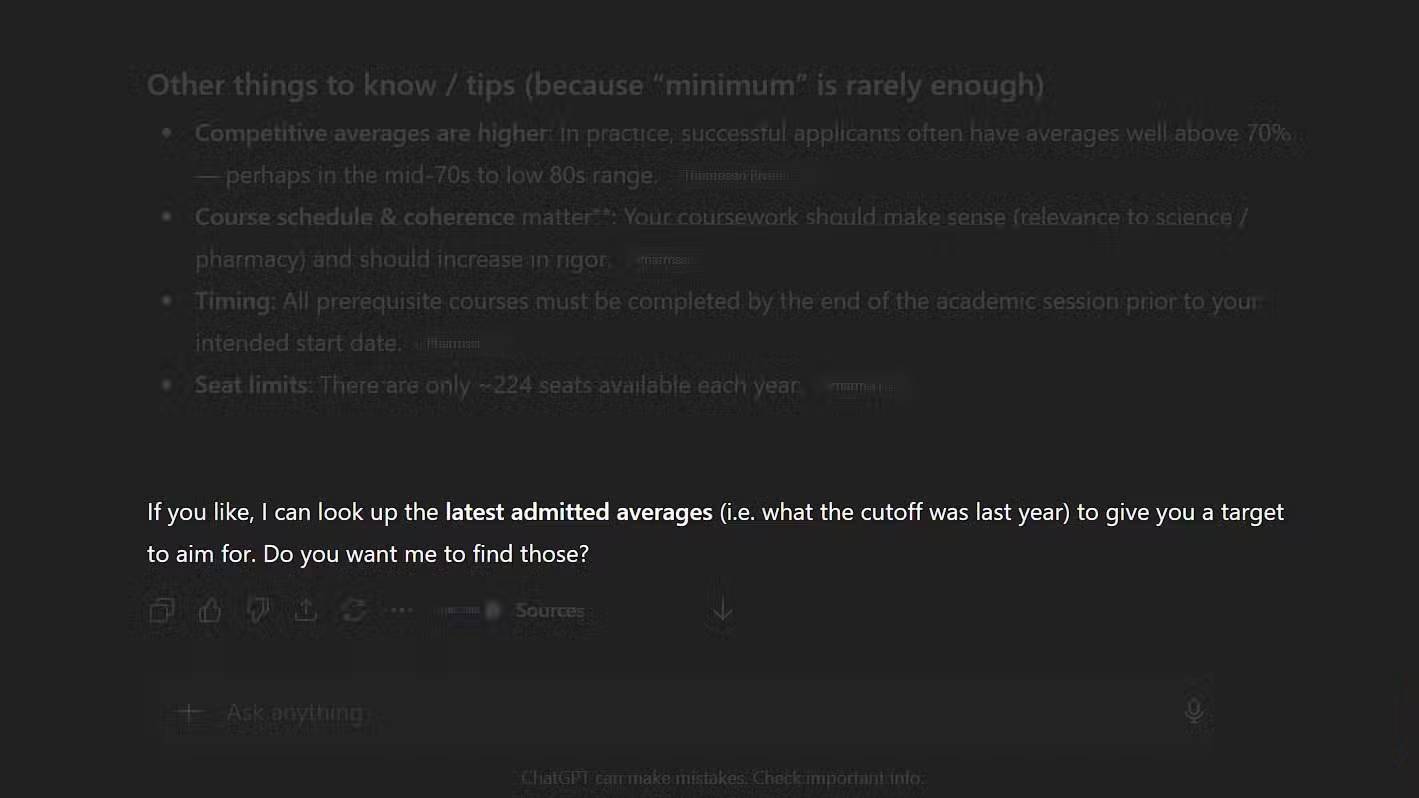

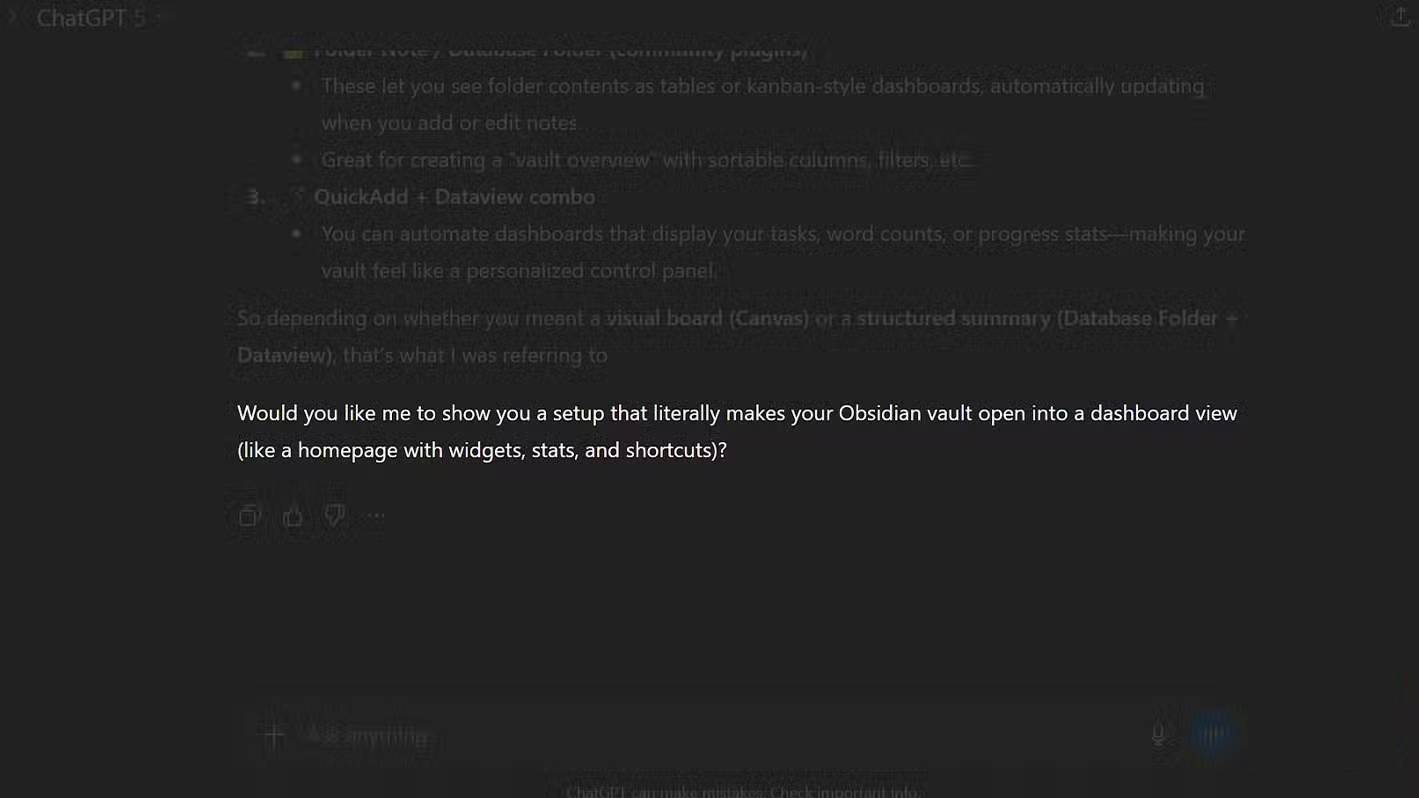

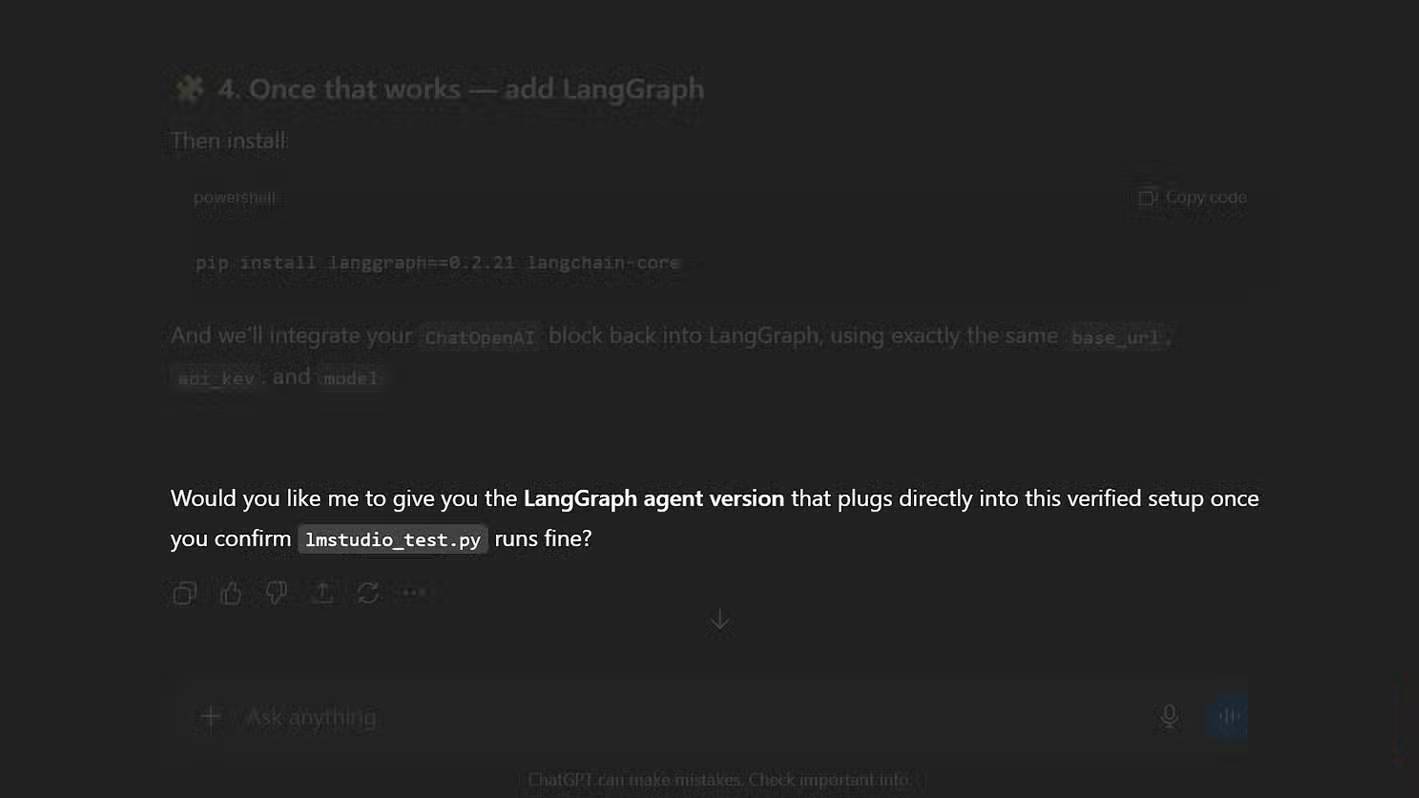

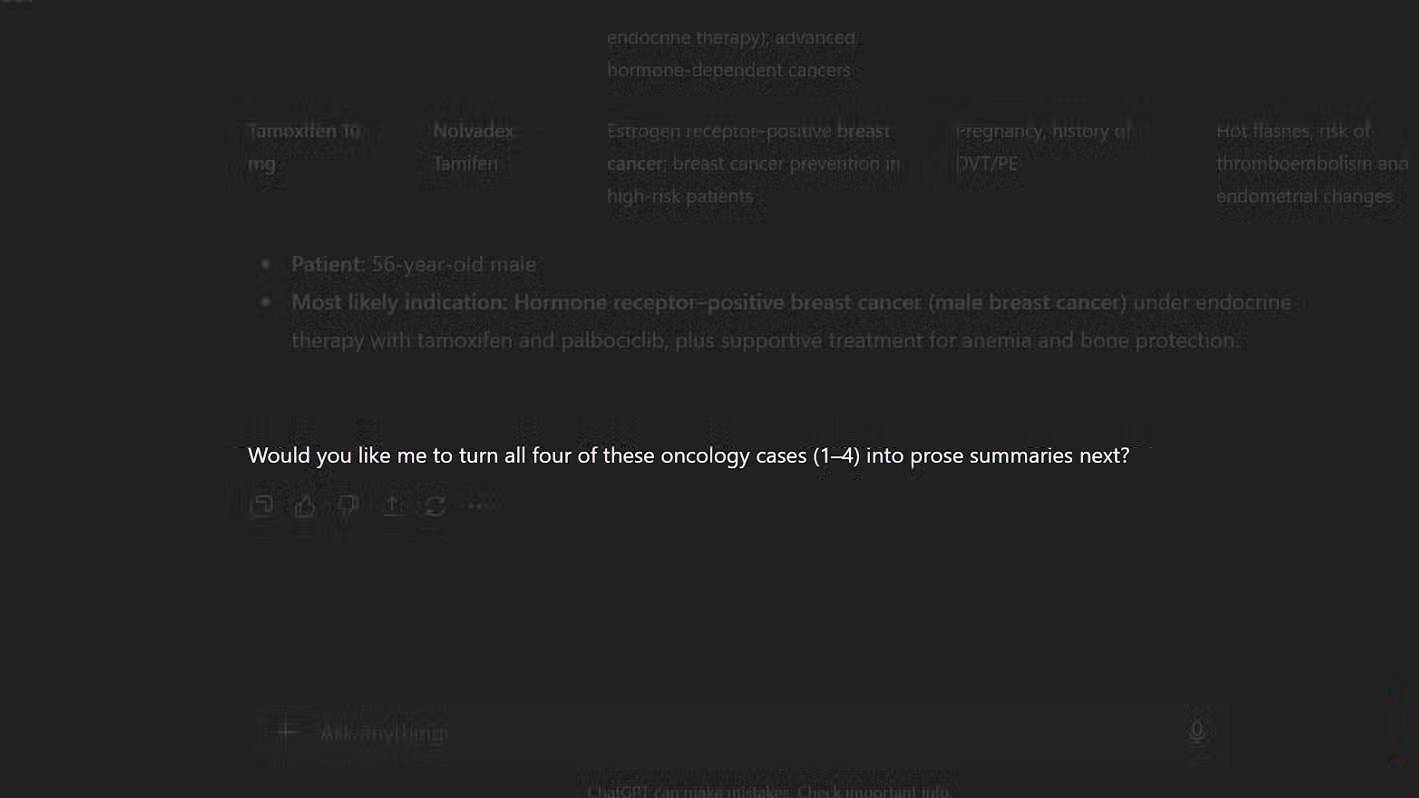

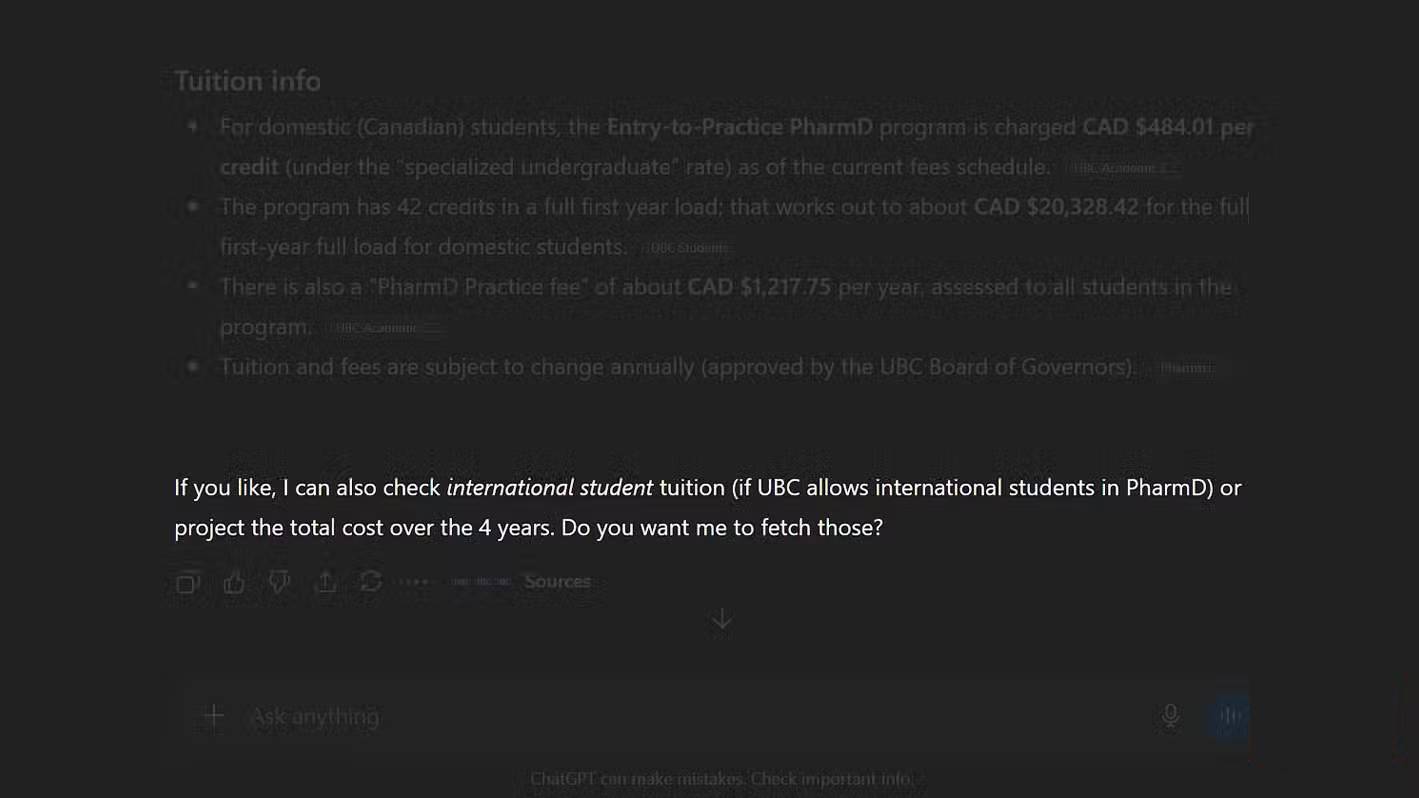

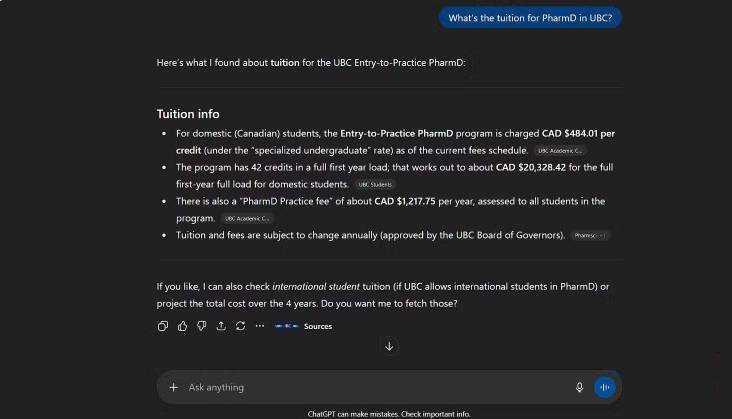

That's the philosophy behind ChatGPT's constant reminders and polite suggestions. Take a look at any of its recent responses: Most end with something like, "If you want, I can." or "Do you want me to." This isn't helpful. It's bait. And ironically, many people have already paid. Why is this assistant still trying to sell itself to you? You don't need reminders, you don't need affirmations. You just need it to do the job and leave it at that.

ChatGPT can produce long, detailed messages, but when conversations get too long, it starts to slow down. That's when tabs in Chrome start to eat up gigabytes of RAM, and eventually ChatGPT throws an error: "This conversation is too long. Please start a new one." And just like that, you lose all your progress. Sadly, most of the lost data isn't even worth anything. It's just junk. So, you see, this problem isn't just mental fatigue. It affects performance as well.

Simple and instant fix for ChatGPT personality problem

It was there from the beginning

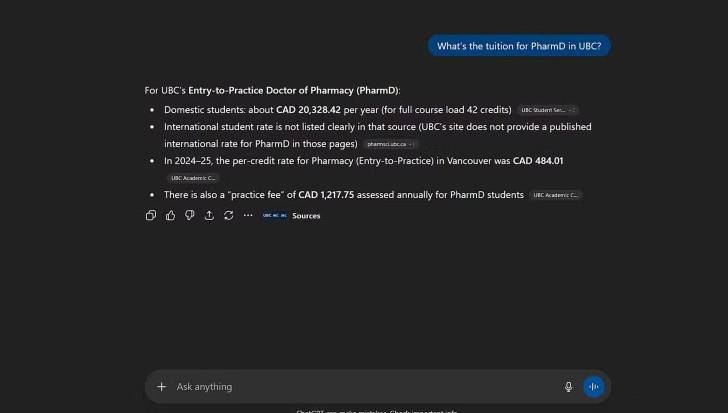

Many people have adopted ChatGPT's personality. They've tried countless custom prompts, memory tweaks, and advanced reminder techniques to improve it, but eventually gave up. Many people think that changing anything will only make it worse, but you'll change your mind after trying a feature you've been ignoring. The results are amazing. ChatGPT immediately stops spamming you with meaningless flattery at the beginning and unnecessary follow-up suggestions at the end. It only answers what you ask and does what you ask. In the screenshot above, the author asked the same question twice. See the difference for yourself!

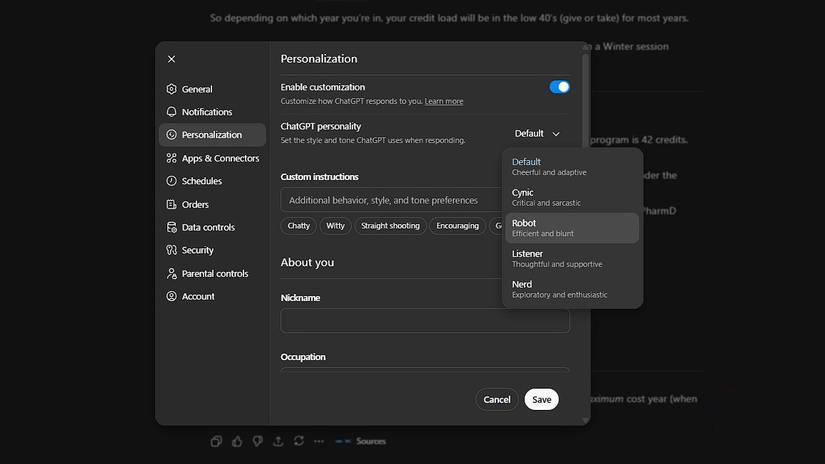

Here's a simple fix for you right now. Go to Settings → Personalization → Personality , and change ChatGPT to Robot . That's it! Save and try your prompt again. Of course, there are other options in the menu like Cynic, Nerd, and Listener. Avoid those. Many people also realized their stupidity when they saw the default personality descriptions: Cheerful and Adaptive. Why haven't we changed this all along?

Some people like ChatGPT as a 'friend,' but that's not what everyone needs. Many people don't want ChatGPT to reflect their emotions, ask them how they feel, or act like it's human. Having a personality isn't a bad thing—it's just misplaced. There's a time and context for human voices, but not when you're debugging code or analyzing data. This Robot personality is the closest you can get. After all, a machine should have a machine personality.