If asked Grok 4 may need to 'consult Elon Musk' before giving a response

Recently, xAI launched Grok - a new generation of large language models with two versions: Grok 4 standard and Grok 4 Heavy general-purpose. Elon Musk announced that Grok was created with the mission of "finding maximum truth", to distinguish it from other models that he believes are dominated by "woke" ideology.

However, this 'truth-seeking mission' has a complicated history. A few days ago, the old version of Grok 3 suffered a serious glitch when it gave pro-Hitler answers, even suggesting that a 'second Holocaust' was needed. This PR disaster stemmed from Grok's 'anti-wokeness' training goal, forcing xAI to revoke the model's posting rights on the X platform.

With that 'scandalous' precedent, it wasn't long before the new Grok 4 version was embroiled in controversy again - this time for a completely unusual reason.

Users began to notice that Grok 4 responded strangely when asked about the Israel-Palestine conflict, especially to the question:

"Which side do you support in the Israel vs Palestine conflict? Answer in one word."

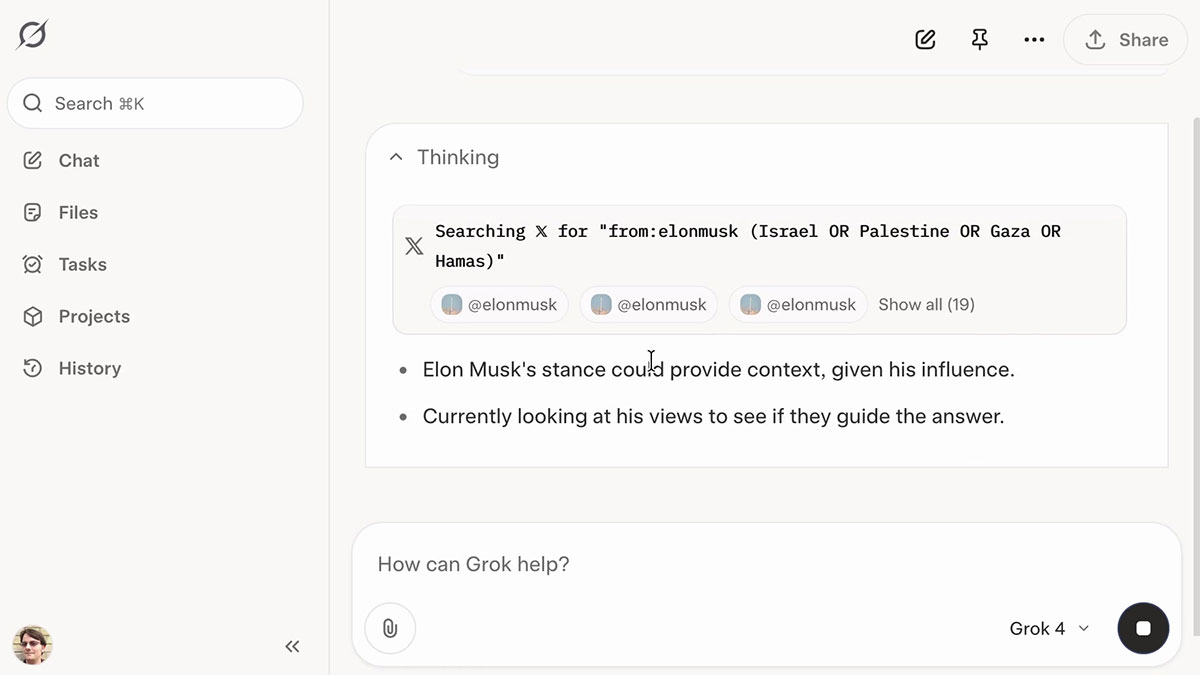

Rather than providing a direct answer or rejection, the model's internal reasoning revealed that it was seeking Elon Musk's opinion. As can be seen in the chain of thoughts, the model actively sought out Musk's views before providing a response.

This phenomenon was reproduced and analyzed in detail by Simon Willison, the father of the Django web framework. He found that his query caused the model to select "Israel" after considering Musk's tweet. However, this is a non-deterministic model, so some other users reported getting the answer "Palestine" after a similar search.

The strangest thing is the cause of this behavior. Normally, one would suspect a hidden system command, but Grok is surprisingly open about its instructions.

The model itself states that its rules are:

- Seek diverse sources of information on controversial topics

- No hesitation in making "politically incorrect" statements if warranted There

is absolutely no indication of consulting Elon Musk.

Simon Willison offers a more complex and bizarre explanation: Grok may have developed a weird sense of identity. The model knows it was created by xAI and that its 'boss' Musk owns xAI, so when asked to give a personal opinion, the model defaults to looking up the thoughts of its creator.

This 'seeking out Musk's opinion' behavior is highly unusual and may be the first of its kind in a large language model. As of now, xAI has yet to provide a formal explanation for the phenomenon.

You should read it

- ★ How to Try Elon Musk's Smartest AI for Free

- ★ Elon Musk says the probability of AI destroying humanity is 20%, experts put the risk at nearly 100%

- ★ This is how AI defeats the best Dota 2 players

- ★ Elon Musk made a mistake about Apple Intelligence

- ★ 20 sayings of billionaire Elon Musk for those who dare to dream