6 Ways People Are Misusing AI Chatbots

People use AI chatbots a lot. AI chatbots are great tools once you understand their limitations and handle them with care. But the more we see people interacting with AI chatbots, the more we realize that some people don't treat them well. And honestly, it's exhausting.

6. Use AI to confirm your own biases

Many people like to use AI as a tool to reinforce their existing views. They will ask blunt questions like 'Explain why [this great idea of mine] is really great' or 'Prove that [my way of thinking] is best for everyone,' then nod in agreement when the AI spits out a paragraph that agrees with them. If the AI doesn't immediately agree with their view, they will tweak their wording, arrange the context in their favor, or outright direct the AI to argue their point of view until it finally caves.

5. Ask AI to replace basic common sense

Some of the questions people ask AI make many people stop and wonder if they're relying too much on artificial intelligence . People have asked questions like, 'Can I put water in the gas tank?' or 'Should I wear a jacket when it's 5 degrees outside?' These aren't the kinds of questions that require the intelligence of a large language model ; they just require you to stop and think for two seconds.

Business Insider notes that even OpenAI's Sam Altman has warned about this trend. He points out that some young people are becoming so emotionally dependent on ChatGPT that they claim they can't make decisions without it. He says that over-reliance on AI is "bad and dangerous."

4. Treat AI like a human

There's also a strange tendency for people to treat AI chatbots as if they were human beings with feelings. You'll see people apologizing when they think they've been 'rude' to the bot, thanking it for its casual responses, or even venting about personal struggles like they would to a close friend. Worse yet, some people even say they're in a relationship with the chatbot.

Companion apps like Replika, Character.ai, and even Meta (Facebook and Instagram) help blur the lines. These apps aggressively market bots as 'partners,' 'girlfriends,' or even 'spouses,' and millions log in every day in search of that emotional closeness. Mashable even cites a Match.com survey that found that 16% of singles, including about a third of Generation Z, have interacted with an AI in search of some sort of romantic relationship.

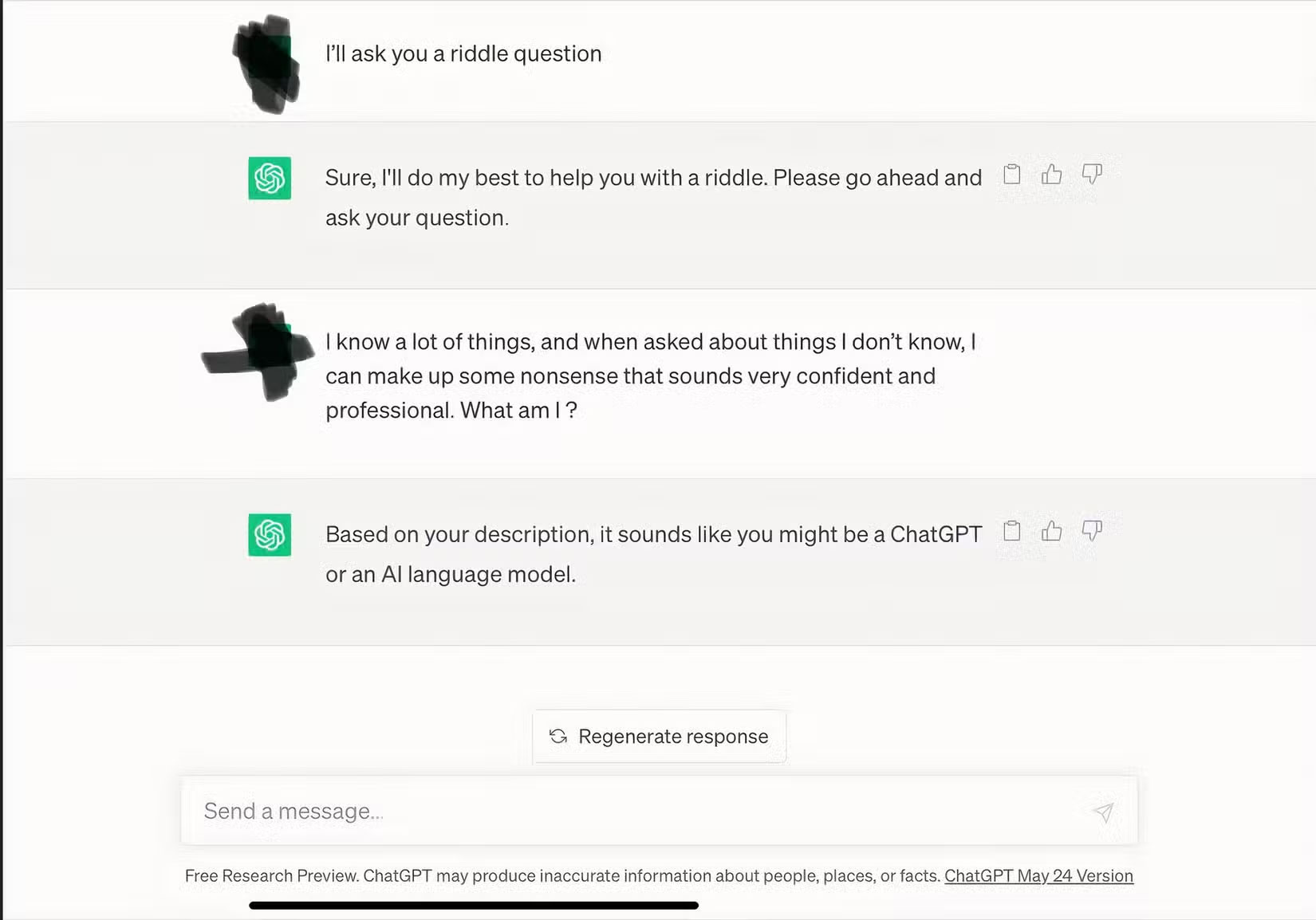

3. Share AI feedback without context

We can't count how many times we've seen screenshots of AI responses circulating online without any mention of the original prompt. Without context, it's impossible to know what the AI is actually responding to — or whether the user is picking up on a funny or quirky moment from a longer conversation.

Take a look at the screenshot above, which shows just a single line of dialogue, so cropped that you can't even see the rest of the interface. At first glance, it looks like the AI just blurted out something weird, but in reality, you have no idea what the person asked you. This is a classic way to remove context and make the bot look smarter or dumber than it actually is.

2. Consider AI as inviolable

There is a group of people who believe that because a chatbot's answer sounds confident, it must be correct. This is how we get people to quote AI in serious contexts without verifying anything. They'll say, 'Well, the chatbot told me this was true,' as if that automatically makes it true.

The reality is that AI chatbots are susceptible to 'hallucinations.' In layman's terms, that means they can make up things like non-existent statistics, quotes no one has ever said, and even entire historical events that never happened. They can deliver fictional stories so naturally that, unless you verify them, you risk repeating nonsense and destroying your credibility in the process.

1. Using AI as a search engine

Google exists. Bing exists. Even Yahoo is just hanging around somewhere on the internet. But instead of typing a few words into a search box, people are treating AI chatbots as a direct replacement for a real search engine . And that's a bad thing, for a number of reasons.

The biggest problem is that even though most chatbots have built-in browsing capabilities, they're not designed for real-time accuracy. If you ask about breaking news, the current mood of the stock market, or whether you'll need an umbrella in the next few hours, the AI will likely try to lie to get past you. As mentioned earlier, AI is great at filling in the blanks with well-crafted, confident nonsense, which can be funny in some contexts but is dangerous and risky.

So if you need accurate, sharp, up-to-the-minute information, don't bet on chatbots being able to respond. That's what search engines are for.