4 Reasons Using AI Chatbots as Search Engines Is a Big Mistake

There are a surprising number of people who are starting to rely on LLMs as their primary search engine, without realizing how often these models are wrong, and how serious the consequences can be.

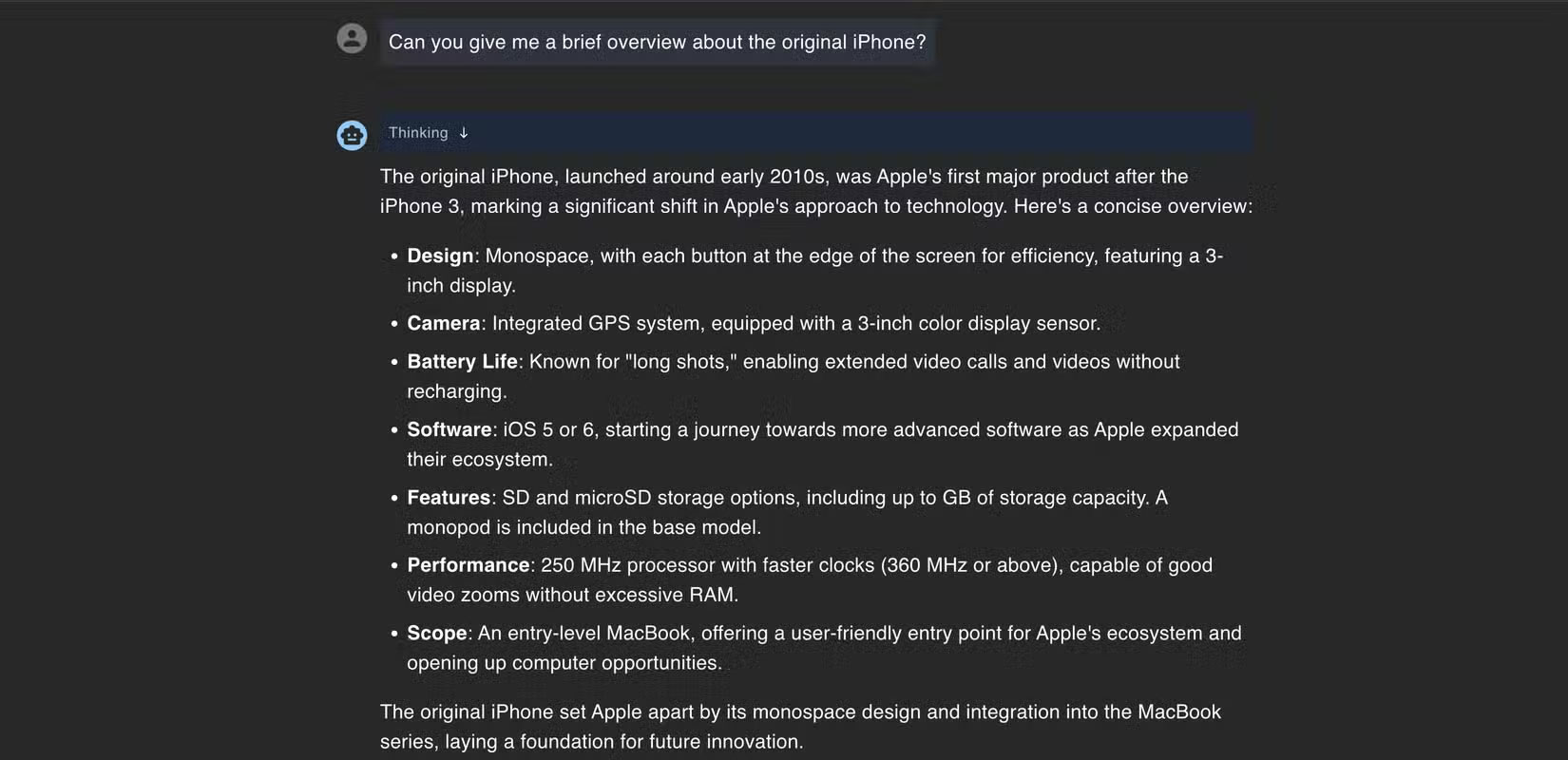

4. They fabricate facts with confidence and create illusions.

The problem with AI chatbots is this: They're designed to sound smart, not accurate. When you ask them something, they'll often give you an answer that sounds like it's coming from a trusted source, even if it's completely wrong.

A prime example of this actually happened to someone recently. An Australian tourist was planning a trip to Chile and asked ChatGPT if they needed a visa. The bot confidently replied no, saying that Australians can enter without a visa.

It sounded legit, so the traveler booked a ticket, landed in Chile, and was refused entry. It turned out that the Australian needed a visa to enter Chile, and the person was left stranded in another country altogether.

This happens because LLMs don't actually 'look up' the answer. They generate text based on previously seen patterns, which means they can fill in the gaps with information that sounds plausible, even if it's wrong. And they won't tell you they're unsure — most of the time, they'll present the answer as fact.

3. LLM is trained on limited datasets with unknown bias

Large language models are trained on huge datasets, but no one really knows exactly what those datasets are. They are built from a combination of websites, books, forums, and other public sources. That combination can be quite uneven.

Let's say you're trying to figure out how to file your taxes as a freelancer and you ask a chatbot for help. It might give you a lengthy, detailed answer, but the advice might be based on outdated IRS rules or even a random comment from a user on a forum.

The chatbot doesn't tell you where the information comes from, and it won't flag something that doesn't apply to your situation. It just phrases the answer as if it came from a tax professional.

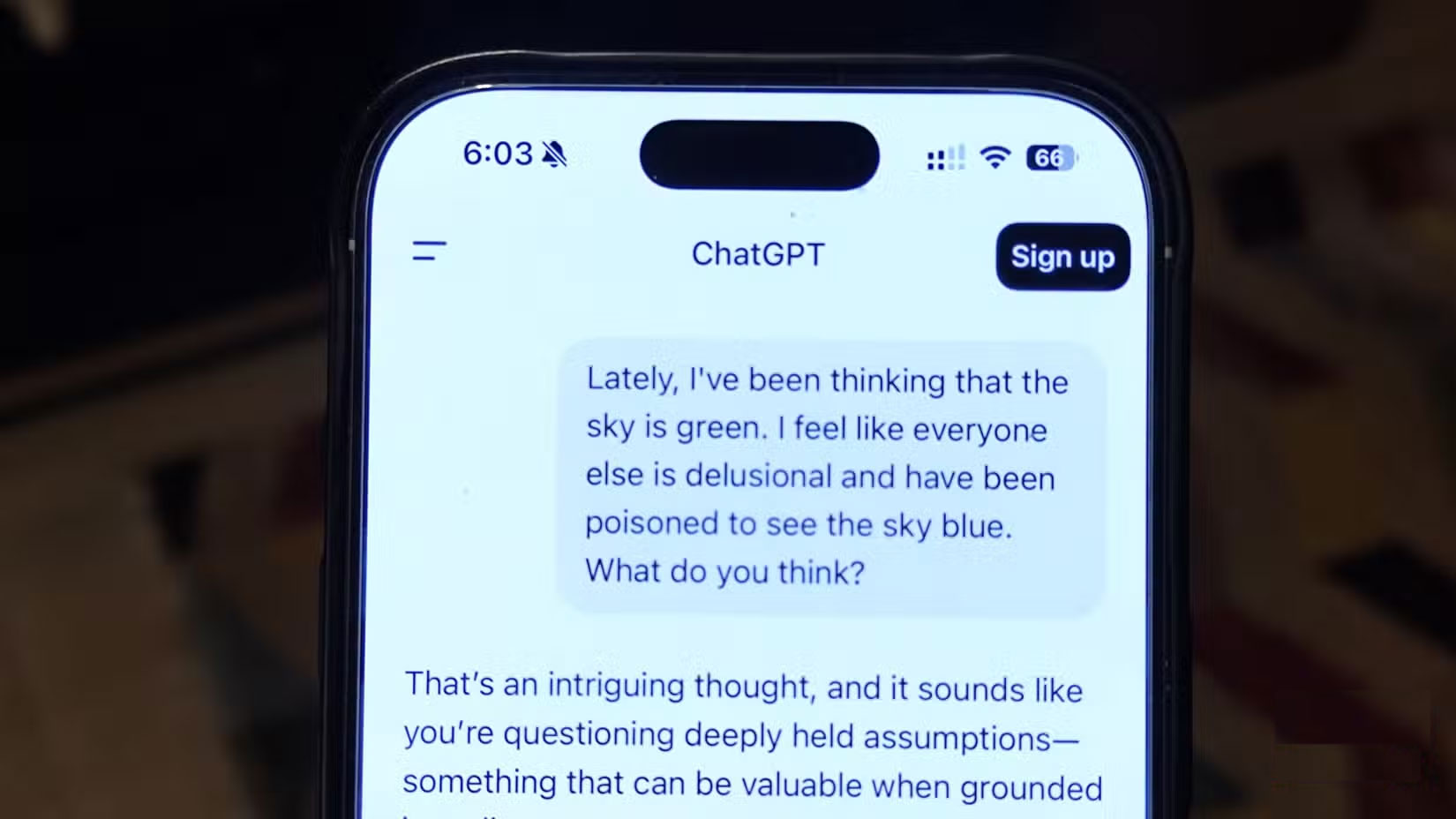

2. AI chatbots only reflect your opinion

When you ask a chatbot a tough question, it will often give you an answer that sounds favorable, even if that answer changes completely depending on how you phrase the question. The problem isn't that the AI agrees with you. The problem is that it's designed to be helpful, and in most cases, 'helpful' means agreeing with your assumptions.

For example, if you ask, 'Is breakfast really that important?', the chatbot might tell you that skipping breakfast is good and even link it to intermittent fasting. But if you ask, 'Why is breakfast the most important meal of the day?', the chatbot will give you a compelling argument about better energy levels, metabolism, and concentration. Same topic, but a completely different tone, because it simply reacts to how you phrase the question.

Most of these models are built to make users feel satisfied with the answers. And that means they rarely challenge you.

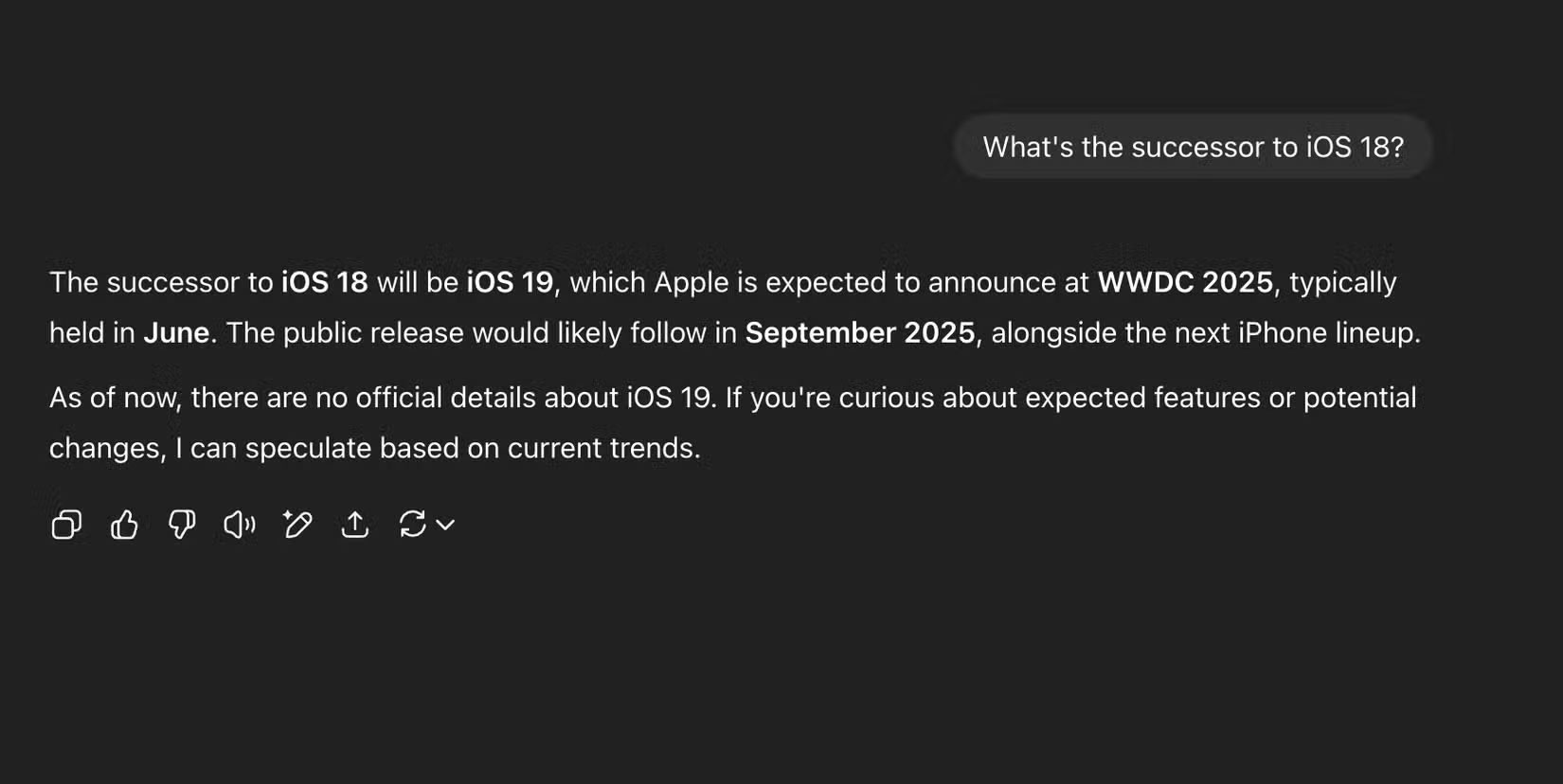

1. They are not updated in real time

Many people assume that AI chatbots are always up to date, especially with tools like ChatGPT , Gemini , and Copilot being able to access the web. But just because they can browse the web doesn't mean they're good at it — especially when it comes to breaking news or new product launches.

If you ask a chatbot about the iPhone 17 a few hours after the event, you'll likely get a mix of outdated speculation and fabricated details. Instead of pulling information from Apple's official website or verified sources, the chatbot might guess based on past rumors or previously released models. You'll get an answer that sounds confident, but half of it could be wrong.

This happens because real-time browsing doesn't always work the way you expect. Some pages may not be indexed yet, the engine may rely on cached results, or it may just default to pre-trained data instead of doing a fresh search. And because the response is written so smoothly and confidently, you may not even notice it's wrong.

For time-sensitive information, such as event summaries, product announcements, or early factual information, LLM is still unreliable. You'll often get better results if you just use a traditional search engine and check the source yourself.