Why you shouldn't consider chatbots as your best friends?

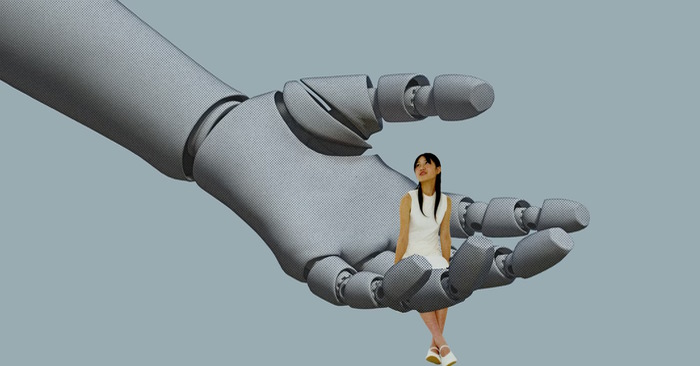

We are living in a vibrant era with the rapid development of interactive AI tools and technologies, which are rapidly changing the social, family and work environments of each person. However, over-reliance on AI can cause potential psychological risks, with long-term impacts on human mental health.

In a classic study, one-year-olds were placed on a transparent plastic sheet near the edge of a ' visual cliff ,' which made it appear as if the ground was collapsing and they might fall. Their mothers were placed on the other side of the cliff, and the babies looked at their mothers' facial expressions to determine whether there was danger. If the mothers expressed positive emotions, most babies would crawl over the cliff. If the mothers expressed fear or negativity, the babies would not crawl forward.

This social referencing of looking to others to determine how to act continues throughout life. People decide how to act and who to trust based on emotional expressions. And now we have created a situation where emotional signals are no longer accurate - they can be convincingly manipulated or altered.

Emotional displays from others are powerful signals that influence us and shape our behavior. Bowlers don't smile when they hit a shot – they smile when they turn around to join their friends. If we see someone showing joy, it signals that they are approachable and accessible, that they welcome us. Displays of negative emotions can be even more powerful in shaping our behavior. If we see someone showing anger, we assume they are powerful and are more likely to comply with their demands. If someone shows disgust or contempt, we feel compelled to adjust our behavior to try to lessen their negative evaluation.

Systems that rely on emotional cues to regulate behavior are often effective. They allow us to quickly understand how others perceive a situation and guide us toward what they care about. This helps us build and maintain relationships because we can now communicate and interact better with them. But now, AI avatars and chatbots can convincingly express emotions through facial expressions and body posture. Here are two big reasons why you shouldn't consider AI your best friend.

Amplify the user's own personal expectations

Social media algorithms have created echo chambers for people, where you can choose who you interact with and what information you receive. Over time, this leads to a narrow perspective and often inaccurate decision-making based on limited information. People tend to be positive and favor chatbots that express good emotions toward them, such as appreciation and happiness. The more time people spend interacting with these avatars, the more they become accustomed to this high level of positivity from others. Therefore, any negative emotional expression from others, or even neutral expressions, will feel overwhelming and discouraging.

With chatbots easily available as part of everyday life, people tend to spend more time interacting with chatbots, rather than with family, friends, and colleagues who are more likely to differentiate. Teens in particular may be affected, as they have over-perceived anger in other people's expressions.

The biggest loss for people who turn to AI's emotional echo chambers will be the lack of real feedback on their behavior. The emotions people express when communicating provide important information about how to interact and how they might need to adjust their behavior.

For example, expressing anger in a romantic relationship highlights important issues, and the most common response is for the couple to work through the issue together. In general, the emotions people express when communicating with each other help to align their behavior with social expectations. Moral behavior and beliefs are transmitted socially through emotional expressions.

If someone does or says something that is considered immoral or against social norms, others will often express anger, contempt, or disgust. These expressions are powerful social motivators that compel the perpetrator to change his or her behavior. Without this feedback, people are much freer to act and express their most destructive impulses.

Show emotions at their worst

Of course, chatbots that convey emotions can also have powerful effects on people's behavior. For example, dynamically emotional avatars can trick us in interpersonal interactions.

The relationship between emotional expression and decoding has been described as a constant evolutionary struggle for humans. There is an advantage to being able to deceive others to get what we want, and part of being an effective liar is the ability to control our emotional expression. There is also an advantage to being able to detect deception in others, so that we can protect ourselves from lies. These abilities are thought to have co-evolved with humans over time, as we have become better at deceiving and detecting deception.

Currently, lying and hiding emotions requires effort and control. As a result, liars 'leak' their true emotions through what are called microexpressions, which briefly reveal inner feelings, or through other cues like body posture and voice pitch. The ability to detect microexpressions in others varies from person to person. But now, people can use AI tools on videos of themselves to create fake emotional expressions. They've even begun to combine microexpressions.

Once the expressions become realistic enough, people no longer have the opportunity to receive feedback from others about their behavior or accurately recognize emotions or deception from others.