Learn how Cache works (Part 3)

Learn how cache works (Part 1)

Learn how Cache works (Part 2)

Working

The CPU fetch unit will look for the next instruction to be executed in the L1 instruction cache. If it is not there, it will search on the L2 Cache. After that, if not, it will have to access the RAM to load the instruction.

We call a 'hit' when the CPU loads a requested or data instruction from the Cache, and calls a 'miss' if the instruction or data requested is not there and the CPU needs to access directly into RAM to retrieve this data.

Obviously when you turn on the computer, the Cache is completely empty, so the system will have to access RAM - this is an inevitable Cache miss. However, after the first instruction is loaded, this process will begin.

When the CPU loads an instruction from a certain memory location, the circuit that calls the memory cache controller loads into the memory cache a small data block below the current location that the CPU has just loaded. Since programs are usually done in a sequential manner, the next memory location the CPU will require may be the location directly below the memory location it just loaded. Also because the memory cache controller has loaded some data below the first location read by the CPU, the next data will probably be inside the memory cache, so the CPU does not need to access the RAM. to get the data in it: it has been loaded into the embedded memory cache in the CPU, which makes it accessible to the internal clock rate.

This amount of data is called a stream and it is usually 64 bytes long.

Besides loading a small amount of this data, the memory controller also seeks to guess what the CPU will require next. A circuit called pre-fetch circuit, will load more data placed after the first 64 bytes from RAM into the memory cache. If the program continues to load instructions and data from memory locations in such a sequential manner, the instructions and data that the CPU will ask next have been loaded into the memory cache beforehand.

We can summarize how the memory cache works as follows:

1. The CPU requires an instruction or data that has been stored at the 'a' address.

2. Since the content from the 'a' address does not have inside the Memory Cache, the CPU must fetch it directly from RAM.

3. The Cache controller will load a line (usually 64 bytes) starting from the 'a' address into the Memory Cache. It will load more than the data required by the CPU, so if the program continues to run sequentially (ie requires an address +1), the next instruction or data that the CPU will ask is Loaded in memory cache before.

4. The circuit called pre-fetch will load multiple data placed after this line, which means starting to load content from address a + 64 onwards into the Cache. To give you an example of the fact that Pentium 4 CPUs have 256-byte pre-fetches, it is therefore possible to load 256 bytes next to the stream of data loaded into the Cache.

If the program runs sequentially, the CPU will not need to fetch data by accessing RAM directly, except for loading the first instruction - because of instructions and data required by the CPU. will always be inside the memory cache before the CPU requests them.

Although programs that don't run are always the same, sometimes they can jump from one memory location to another. The main challenge of the main cache controller is to guess what addresses the CPU will jump to, and thereby load the contents of this address into the memory cache before the CPU requests to avoid the CPU being accessed. RAM is reduced to the performance of the system. This task is called branch prediction and all modern CPUs have this feature.

Modern CPUs have a hit rate of at least 80%, which means 80% of the time the CPU does not access the RAM directly, but instead the memory cache.

Organize memory Cache

The memory cache is divided into internal streams, each of which is between 16 and 128 bytes long, depending on the CPU. For the majority of current CPUs, the memory cache is organized according to 64byte lines (512bit), so we will consider the memory cache using 64byte lines in the examples throughout the beginning of this tutorial. . Below we will cover the main specifications of the Memory Cache for all CPUs currently on the market.

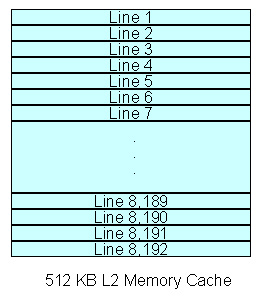

Memory cache 512 KB L2 is divided into 8,192 lines. You should note that 1KB is 2 ^ 10 or 1024 bytes, not 1,000byte, so 524.288 / 64 = 8192. We will consider the single-core CPU with a 512 KB memory cache L2 in the examples. In Figure 5 we simulate the internal organization of this memory cache.

Figure 5: How to organize L2 memory cache 512 KB

Memory cache can work under three different configuration types: direct mapping, global linking and link aggregation (in multiple lines).

Direct mapping

Direct mapping is the simplest way to create a memory cache. In this configuration, the main RAM memory is divided into equal lines located inside the Memory Cache. If we have a 1GB RAM system, this 1GB will be divided into 8,192 blocks (assuming that the Memory Cache uses the configuration we described above), each block has 128KB (1,073,741,824 / 8,192 = 131.072 - note that 1GB is 2 ^ 30 bytes, 1 MB is 2 ^ 20 bytes and 1 KB will be 2 ^ 10 bytes). If your system has 512MB then the memory will also be divided into 8,192 blocks but each of these blocks is only 64 KB. We have demonstrated how this is organized in Figure 6 below.

Figure 6: How to map the cache work directly

The advantage of direct mapping is that it is the simplest way.

When the CPU requests a certain address from RAM (eg, a 1,000 address), the Cache controller will load one line (64 bytes) from the RAM memory and store this line on the memory cache (ie from address 1,000). To 1,063, suppose that we are using an 8-bit addressing scheme. So if the CPU again requires the contents of this address or of some subsequent addresses (ie addresses between 1,000 and 1,063), they will be available inside the Cache.

The problem is that if the CPU needs two addresses mapped to the same cache line, then a miss will appear (this problem is called a collision phenomenon). Continuing our example, if the CPU requires a 1,000 address and then requests a 2,000 address, a miss will also appear because these two addresses are in the same 128KB block, and what is inside the Cache is a line starting from address 1,000. That's why the Cache controller loads a line from address 2,000 and stores it on the first line of the Memory Cache, deleting the previous content, in our case it's the line from address 1,000.

Also a problem. If the program has a loop of more than 64 bytes, then there is also a miss during the entire duration of the loop.

For example, if the loop executes from address 1,000 to address 1,100, the CPU will have to load all instructions directly from the RAM during the loop's duration. This problem will occur because the Cache will have content from 1,000 to 1,063 addresses and when the CPU requests content from the 1,100 address, it will have to go into RAM to retrieve the data, and then the controller The cache will load addresses from 1,100 to 1,163. When the CPU requests 1,000 addresses, it will have to go back to RAM, since the cache will not have data components from address 1,000. If this loop is executed 1,000 times, the CPU will have to enter the RAM to load data 1,000 times.

That's why direct mapping of memory cache is less effective and less used.

The whole association

The overall link configuration, in other words, has no difficulty linking between memory cache lines and RAM memory locations. The Cache controller can store any address. So the above-mentioned problems will not happen. This configuration is the most efficient configuration (ie the configuration has the highest hit rate).

In other words, the control circuit is much more complicated, because it needs to be able to keep track of which memory locations are loaded inside the Memory Cache. This is the reason for the introduction of a hybrid solution - called a linkage file - that is widely used today.

Learn how cache works (End section)

Learn how cache works (End section)