Learn how cache works

Memory Cache is the type of high-speed memory available inside the CPU to speed up access to data and instructions stored in RAM. In this tutorial, we will show you how this memory works in the most understandable way.

A computer will be completely useless if you do not catch the processor (CPU) performing a certain task. The work will be done through a program, which includes lots of instructions to tell the CPU to work.

CPU fetches programs from RAM. There is a problem with RAM, however, that when its power supply is cut, the data components stored in RAM will be lost - this is why some people say that RAM is an environment. 'volatile'. Such programs and data must be stored on a non-volatile environment after turning off the computer (like hard drives or optical devices such as CDs and DVDs).

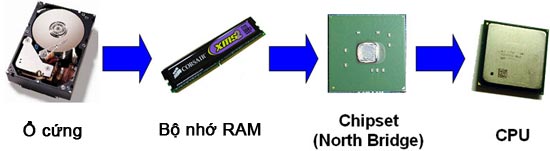

When you double click on an icon in Windows to run a program. Programs are usually stored on the computer's hard drive, when called it will be loaded into RAM then from RAM, the CPU loads the program through a circuit called memory controller, the component This is placed inside the chipset (north bridge chip) on Intel microprocessors or inside the CPU on AMD microprocessors. In Figure 1, we briefly summarized the principle of doing this (with AMD processors, please ignore the chipset set).

Figure 1: How data is transferred to the CPU

The CPU cannot fetch data directly from hard drives because the hard drive's data access rate is too low for it, even if you have a hard drive with the largest access speed. Let's take some examples to illustrate this, SATA-300 hard drives - one of the fastest speed hard drives currently offered today to most users - have theoretical transfer rates. is 300 MB / s. A CPU running at 2GHz with a 64-bit * data stream will transmit internal data at a rate of 16GB / s - that's 50 times bigger.

- Data line: Lines between circuits inside the CPU. Just a simple operation you can know that each CPU has a number of different data lines inside, each of them has different lengths. For AMD processors, for example, the data path between the L2 memory cache and the L1 memory cache is 128-bit wide, while Intel's is 256-bit. This is just an explanation of the number that we published in the previous paragraph not fixed, but nonetheless the CPU is always a lot faster than hard drives.

The difference in speed also stems from the fact that hard drives also include mechanical systems, which are always slower than pure electronic systems, mechanical components. gas must be moved so that new data can be read out (this is much slower than electron movement). In other words, RAM memory is 100% electronic, which means it will be faster than the speed of hard and optical drives.

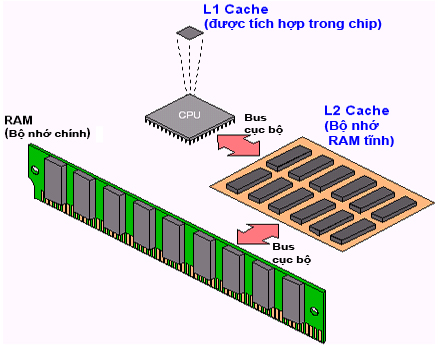

However, this is the problem, even the fastest RAM is not as fast as the CPU. If you use DDR2-800 memory, they transmit data at 6,400 MB / s - 12,800 MB / s if using two-channel mode. Even this number can be up to 16GB / s in the previous example, because current CPUs can fetch data from L2 memory cache at 128-bit or 256-bit speed, we are talking about 32 GB / s or 64 GB / s if the CPU works internally at 2GHz. You should not worry about problems with 'L2 memory cache', we will explain this issue later. All you need to remember is that RAM is slower than CPU.

That way, the transfer rate can be calculated using the following formula (in all the examples up to now, 'data per clock' is still calculated by '1'):

[Transmission speed] = [Width (number of bits)] x [clock rate] x [data per clock] / 8

The problem does not stop at the transmission rate but also the latency. Latency (access time) is the amount of time that memory keeps slowing in returning data that the CPU requested before - this is not possible immediately. When the CPU requires an instruction (or data) to be stored at a certain address, the memory will hold for a period of time to distribute this command (or data) back. On current memory, if it is labeled with CL equal to 5 (CAS Latency, this is the delay we are talking about) then that means that the memory will provide the required data later. 5 memory clock cycles - meaning the CPU will have to wait.

Waiting will reduce CPU performance. If the CPU has to wait up to 5 clock cycles to receive the instruction or data it has requested, its performance will be only 1/5 of the memory performance that can provide instant data. . In other words, when accessing DDR2-800 memory with CL5, CPU performance is equal to the performance of the CPU working with 160 MHz memory (800 MHz / 5) with instant data provisioning. In the real world, performance degradation is not much because the memory working under the mode is called burst mode, where the second centralized data can be provided one. immediately, if this data is stored on a serial address (usually the instruction of a program is stored in continuous addresses). This is expressed by the formula 'x-1-1-1' (meaning '5-1-1-1' is for memory used in our example), meaning that the first data is provided after 5 clock cycles, but from the second data on, they are provided in only one clock cycle - if it is stored on the same address as what we said.

Dynamic RAM and static Ram

There are two types of RAM, dynamic RAM (DRAM) and static RAM (SRAM). The RAM used on computers is dynamic RAM. This type of RAM, each bit of data is stored inside the memory chip with a small capacitor, these capacitors are very small components, meaning that there are millions of capacitors on a small circuit area, this is still It is called 'high density'. These capacitors can lose voltage accumulating after a while, so dynamic memory needs to have a recharging process, which is often called 'refresh'. During this cycle data cannot be read or written to. Dynamic memory is cheaper than static memory and also consumes less energy than static memory. However, as we know, on dynamic RAM, the data is not provided readily and it may not work as fast as the CPU.

With static memory, this is the type of memory that can work as fast as the CPU, since each data bit is stored on a circuit called flip-flop (FF), each of which can provide data with very low latency, because FFs do not require a refresh cycle. The problem is that these FFs require some transistors, which are larger than a dynamic RAM capacitor. This means that on the same area, where only one FF exists in static memory, there are hundreds of capacitors on dynamic memory. Therefore, static memory is usually lower density - chips with lower capacity. Two other problems with static memory are: it is usually much more expensive and consumes more energy (hence hotter) than static memory.

In the table below, we have summarized the main differences between dynamic RAM (DRAM) and static RAM (SRAM).

Although Ram is faster than dynamic RAM, its disadvantages prevent it from becoming the main RAM.

The solution found to reduce the impact in using RAM slower than CPU is to use a small amount of static RAM between CPU and RAM. This technology is called cache memory and today there is a small amount of static memory that is placed inside the CPU.

Memory Cache copies most of the recently accessed data from RAM to static memory and guesses what data the CPU will ask next, loads them to static memory before the CPU actually requires. The goal is to make the CPU accessible to cache memory instead of directly accessing RAM, as it can query data from the cache immediately or almost instantly instead of must wait when accessing data placed in RAM. The more CPU access to memory cache instead of RAM, the faster the system will operate. Also, we will use the exchange of two terms 'data' and 'instructions' for each other because what is stored inside each address remember nothing different to memory.

We will explain exactly how the Memory Cache works in the next part of the lesson.

- Learn how Cache works (Part 2)

- Learn how Cache works (Part 3)

- Learn how cache works (End section)