What is HDR? What is the difference between HDR formats?

HDR is one of the most discussed technologies on the web in the last few years. Whether it is TV, movies, computer monitors and games, HDR is appearing on many devices. Do you want to know what HDR is? What types, standards and HDR certification are there? Find the answer in the following article!

What is HDR?

HDR, which stands for High Dynamic Range, is a technology designed to make images look as close to the real world as possible. HDR is a term that you can hear in photography, as well as in everything related to screens.

To make images as realistic as possible, HDR-enabled devices use a wider color range, brighter areas and darker areas. All of this, along with a much more balanced contrast ratio, makes images look more realistic and accurate, closer to what the human eye sees in the real world.

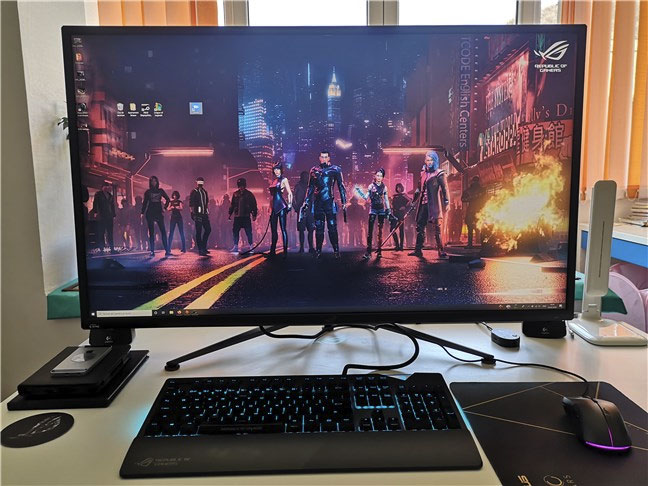

HDR is a technology designed to make images look as close to the real world as possible

HDR is a technology designed to make images look as close to the real world as possible When it comes to the digital images displayed on monitors, TVs or any other similar devices, HDR is especially noticeable in images or videos that have a complex combination of colors, areas, and bright and dark, like sunsets and sunrises, bright skies, snowy scenes, and so on.

What are the differences between HDR 10, HDR +, Dolby Vision and HLG formats?

In terms of screens, there are three main HDR formats: HDR 10, HDR + and Dolby Vision. All of these media profiles apply to how to record or render video content, as well as how to display that content on devices with HDR screens. While all of them are aimed at the same purpose: Display images more faithfully, they have different requirements, specifications, and attributes.

Essential criteria determine the various HDR profiles related to image quality. See the table below for comparison:

Essential criteria define different HDR profiles

Essential criteria define different HDR profiles - Bit depth:

Typically, laptop screens, TVs and most other screens, including smartphones, use 8-bit colors, allowing them to display 16.7 million colors. HDR displays have a depth of 10 bits or 12 bits, allowing them to display respectively 1.07 or 68.7 billion colors.

HDR 10 and HDR10 + have 10-bit colors, while Dolby Vision supports bit depths of 12. All are huge and impressive numbers. However, you should know that, for the time being, there are only 10-bit screens on the market (HDR and HDR +), so even if Dolby Vision sounds great, you still can't enjoy it. it on any consumer screen.

- Maximum brightness:

This parameter refers to the minimum level of luminance achieved with screens with HDR. In order for monitors to display HDR images, they need a higher brightness level than regular SDR (Standard Dynamic Range) screens. Maximum brightness is measured in cd / m2 and usually must be at least 400 cd / m2.

- Maximum brightness in black areas :

As you know, HDR displays are intended to display images as close to reality as possible. To do that, in addition to the extremely high luminance for bright areas, they must also be able to display very dark areas.

The typical values for this attribute are less than 0.4 cd / m2, but there are no requirements regarding HDR protocols. However, VESA DisplayHDR standards have specific values for glare in the maximum black area. Any screen that can display black at a brightness below 0.0005 cd / m2 is considered True Black (true black).

Any monitor that can display black at brightness below 0.0005 cd / m2 is considered True Black (true black).

Any monitor that can display black at brightness below 0.0005 cd / m2 is considered True Black (true black). - Tone mapping:

Content created with HDR quality, such as movies or games, can have a much higher brightness value than what HDR screens can actually display. For example, some scenes in movies may have brightness levels above 1000 cd / m2, but the HDR screen you are viewing only has a maximum brightness of 400 cd / m2. What happens after that?

You may think that any part of the image brighter than 400 cd / m2 is lost. But the reality is not, at least not entirely. What HDR displays do is Tone mapping, which basically uses algorithms to reduce the brightness of the captured image, making it not exceed the maximum brightness. Certainly, some information will be lost and the contrast may actually look worse than on the SDR screen. However, the picture still has more detail on the SDR screen.

- Metadata (metadata):

In order for the HDR display to display HDR content, regardless of whether it is a movie or a game, that content must be created in HDR. For example, you cannot record movies in SDR and expect the movie to be displayed in HDR mode on the TV. Content created with HDR stores information, called metadata, about how it is displayed. That information is then used by the devices you stream to decode the content correctly and use the right brightness.

The problem is that not all HDR formats use the same metadata type.

-

- HDR10 uses static metadata, which means that the settings are applied to the same way of displaying content from start to finish.

- HDR10 + and Dolby Vision, on the other hand, use dynamic metadata, which means that the displayed image can be adjusted quickly. In other words, HDR content can use different brightness ranges on different scenes or even for each video frame.

You may notice that the article has not mentioned anything about HLG. HLG stands for Hybrid Log Gamma and represents an HDR standard, allowing content distributors, such as television companies, to stream content in both SDR (Standard Dynamic Range) and HDR (High Dynamic Range) with a single stream. When streaming to your TV, the content is displayed in SDR or HDR, depending on the capabilities of the TV.

What is DisplayHDR?

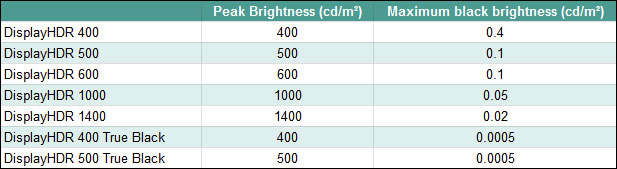

Besides HDR formats, there's also an HDR performance parameter called DisplayHDR. DisplayHDR-certified devices must meet a set of standards to ensure that they can display HDR images at a certain quality. If you've ever searched the Internet or at electronics stores to buy a new TV or monitor, you might have seen the terms DisplayHDR 400, DisplayHDR 600 or Display HDR 1000, etc. What do they mean?

VESA (Video Electronics Standards Association) is an international association of more than 200 companies worldwide, creating and maintaining technical standards for all types of video monitors, including TVs and computer monitors. One of the areas in which this organization has set such standards is HDR. The organization's standards for HDR displays are called DisplayHDRs and apply to screens that support at least HDR10. To be certified for DisplayHDR, TVs, monitors and any other devices with HDR screens, they must meet the following brightness standards, along with many other specifications:

Standard for DisplayHDR certification

Standard for DisplayHDR certification