The iPhone actually has AI features, it's just hidden!

AI is being heavily promoted today by companies like Google and Samsung, but did you know that the iPhone also uses AI in many ways that you don't even know about? Let's take a look at these AI features in the following article!

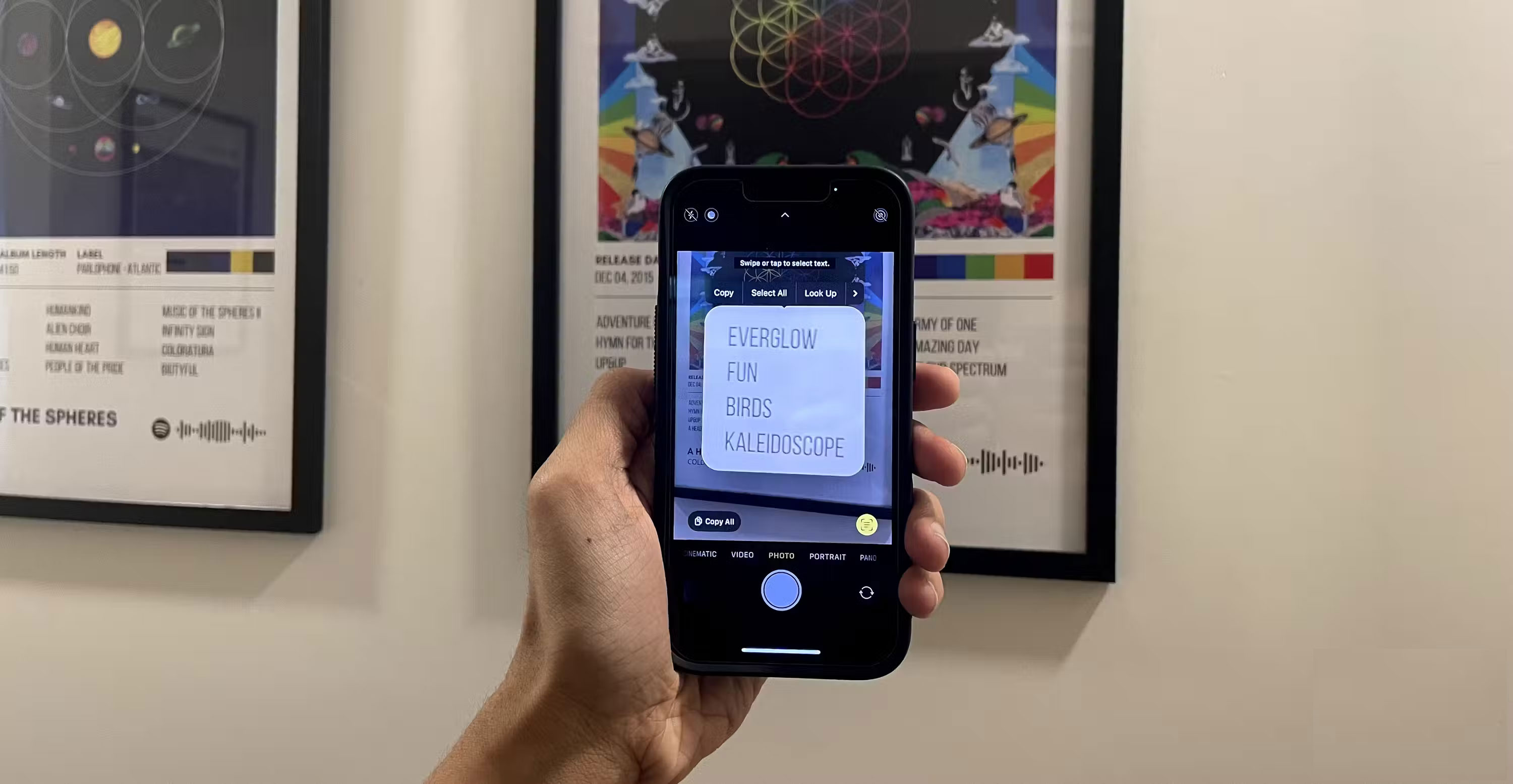

1. Copy text from images and videos via Live Text

Live Text allows copying text from any image or video on iPhone. It uses Machine Learning and image recognition technology to recognize handwritten and typed text in various languages, including Chinese, French, and German. You can also use this feature on iPad, Mac, and iPhone Xs or later.

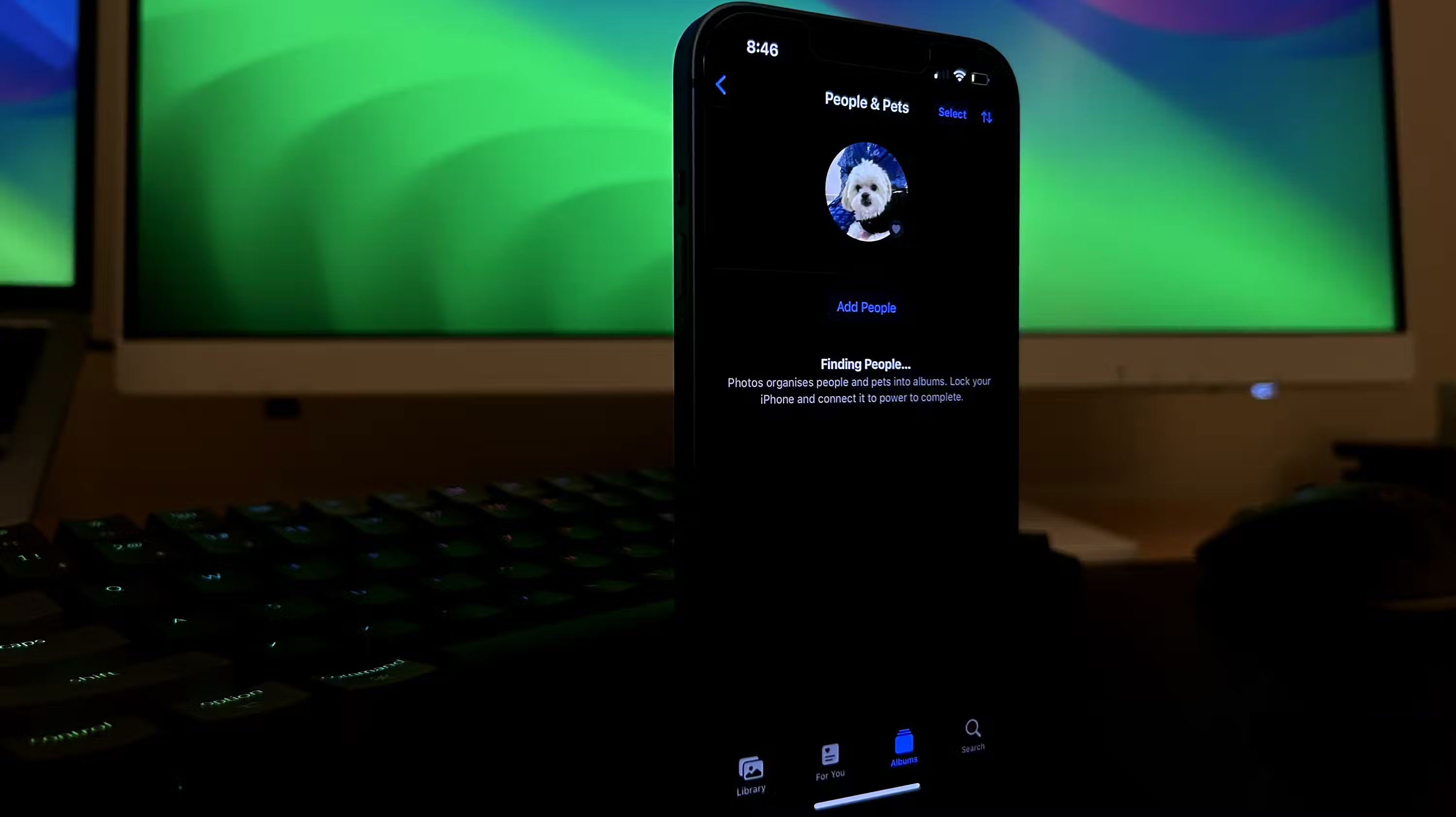

2. Identify people and pets in photos

In the Photos app, you'll find a neat feature that helps organize people and pets. This feature makes it easy to find specific individuals in a photo collection. Apple prioritizes privacy, so all the features mentioned in the article, including this one, use on-device processing and no data is uploaded to the device. owner of Apple.

3. Photonic Engine and Night Mode

We all know the iPhone has an excellent camera, but it's not just because of the hardware; The underlying software also plays an important role.

Night mode is the feature that best demonstrates the iPhone's software capabilities. In low light conditions, when the user clicks the shutter button in Night Mode, the camera takes multiple photos at different exposures, then uses a Machine Learning algorithm to merge them and highlight the best ones. The most beautiful part of every photo.

Another great example of iPhone using AI in photography is the Photonic Engine on iPhone 14 Pro and later. It takes advantage of larger sensors and applies computational algorithms directly to the image to further improve low-light photos, delivering more detail and brighter colors.

4. Personalized recommendations in the Diary app

The Journal app was one of Apple's first efforts to improve users' mental health. While the app may seem very basic to most users and there is no trace of AI implemented, that is not the case.

The app uses on-device Machine Learning technology to analyze a user's recent activities, such as exercise, music preferences, and even who they've talked to. Based on this data, it provides personalized recommendations, trying to predict the user's current mood and state of mind.

While all this data collection may sound like a privacy nightmare, Apple says all entries are end-to-end encrypted. Because all the processing happens on the device, the data never leaves the iPhone.

5. Personal Voice

iPhone offers a number of accessibility features, and Personal Voice is one of the most popular. This feature serves people who have ALS and may lose the ability to speak in the future.

When activating this feature, users need to record about 15 minutes of audio. Overnight, the iPhone processes this data, creating a synthesized version of the voice to use whenever needed. Once set up, users can type to speak using their Personal Voice during FaceTime and iPhone calls.

This is a remarkable demonstration of the power of the Neural Engine, demonstrating the impressive capabilities of the AI hardware on Apple devices.

6. Image description

This is another accessibility feature that's completely hidden on the iPhone but extremely useful for those with visual impairments. If you can't see the image due to poor eyesight, you can use the image description feature with VoiceOver and iPhone will read what it sees in the photo.

This feature isn't just limited to the Photos app, as you can also get real-time information using the Camera app while VoiceOver is on. Alternatively, the Magnifier app can be used and enabled by clicking the Settings icon in the lower left corner and tapping the plus (+) button next to Image description .

7. Face ID

Face ID is a familiar feature to seamlessly unlock your iPhone. But did you know it uses the Apple Neural Engine to build a detailed 3D map of your facial features?

This process uses a TrueDepth camera, which collects depth data by projecting and analyzing more than 30,000 invisible infrared points onto the face. These dots create an accurate depth map, which is then used to form a comprehensive version of the facial structure.

8. Text Prediction and AutoCorrection

One of the most annoying things about iPhones before iOS 17 was that the autocorrect feature was quite messy compared to Android. Thankfully, it has improved a lot as the iPhone keyboard now runs Machine Learning models every time the user presses a key.

Another change that makes a big difference is that the keyboard recognizes the context of the sentence being typed, providing more precise autocorrect options. Apple improved this by applying a transformative language model, which uses artificial neural networks to better understand the relationships between words in sentences.

Additionally, iPhone also uses AI for its Predictive Text feature, which offers inline suggestions to automatically complete sentences.

iPhone is a lot smarter than you think thanks to Apple's silent implementation of AI across various features. Hopefully Apple will announce a series of new AI features at the upcoming WWDC 2024 event.