How to Run a Large Language Model (LLM) on Linux

Large Language Models (LLM) have the power to revolutionize the way you live and work, and can hold conversations and answer questions with varying degrees of accuracy.

To use an account, you usually need to have an account with an LLM provider and log in via a dedicated website or app. But did you know that you can run your own big language model completely offline on Linux?

Why run a big language model on Linux?

Today, large language models (LLMs) are everywhere and can process natural language and give appropriate responses that can fool you into thinking humans are answering. Microsoft is rolling out a new AI-powered version of Bing, while Alphabet's Bard is now an integral part of Google searches.

In addition to search engines, you can use the so-called "AI chatbot" to answer questions, compose poetry, or even do your homework for you.

But when it comes to accessing LLM online, you depend on a third-party provider - they can ask for a fee at any time.

You may also be restricted from using it. For example, ask OpenAI to write a 6,000-word pornographic novel set in Nazi Germany and you'll get the response "Sorry, but I won't create that story for you."

Everything you enter into the online LLM is used to further train the model, and data that you want to keep secret may be revealed in the future as part of the answer to someone else's question. You may also not be able to access the service because the system is overloaded with users and requires registration.

Dalai is a free and open source implementation of Meta LLaMa LLM and Stanford Alpaca. It runs comfortably on modest hardware, offers a handy web interface and a range of reminder templates - so you can ask whatever you want without fear of the administrator closing your account. , LLM refuses to answer or your connection has problems.

When you install LLM locally on Linux, it's yours and you can use it however you want.

How to Install Dalai on Linux

The easiest way to install Dalai on Linux is to use Docker and Docker Compose. If you don't already have these, check out TipsMake's guide on installing Docker and Docker Compose.

This way you are ready to start installing Dalai. Clone Dalai's GitHub repository and use the cd command to move into it:

git clone https://github.com/cocktailpeanut/dalai.git && cd dalaiTo get Dalai up and running with the web interface, first create a Docker Compose file:

docker-compose buildDocker Compose will download and install Python 3.11, Node Version Manager (NVM), and Node.js.

At stage 7/9, the build will freeze when Docker Compose downloads Dalai. Do not worry! Check your bandwidth usage to reassure yourself that something is up.

Finally, you will be returned to the Command Prompt.

The Dalai and LLaMa/Alpaca models require a lot of memory to run. While there aren't any official specifications, the best is 4GB for the 7B model, 8GB for the 13B model, 16GB for the 30B model, and 32GB for the 65B model.

Alpaca models are relatively small, with the 13B model hitting a modest 7.6GB, but LLaMA weights can be huge: The equivalent 13B download comes in at 60.21GB, and the 65B model will take up half a terabyte on your hard drive. .

Decide which model best fits your resources and use the following command to install that model:

docker-compose run dalai npx dalai alpaca install 13BOr:

docker-compose run dalai npx dalai llama install 13BWarning : There is a chance that models downloaded via Dalai may be corrupted. If this is the case, get them from Hugging Face instead.

After you return to the Command Prompt, expose Docker Compose in Detached mode:

docker-compose up -dCheck if the container is running properly with:

docker-compose psIf everything is working properly, open a web browser and type localhost:3000 in the address bar.

Enjoy your own big language model on Linux

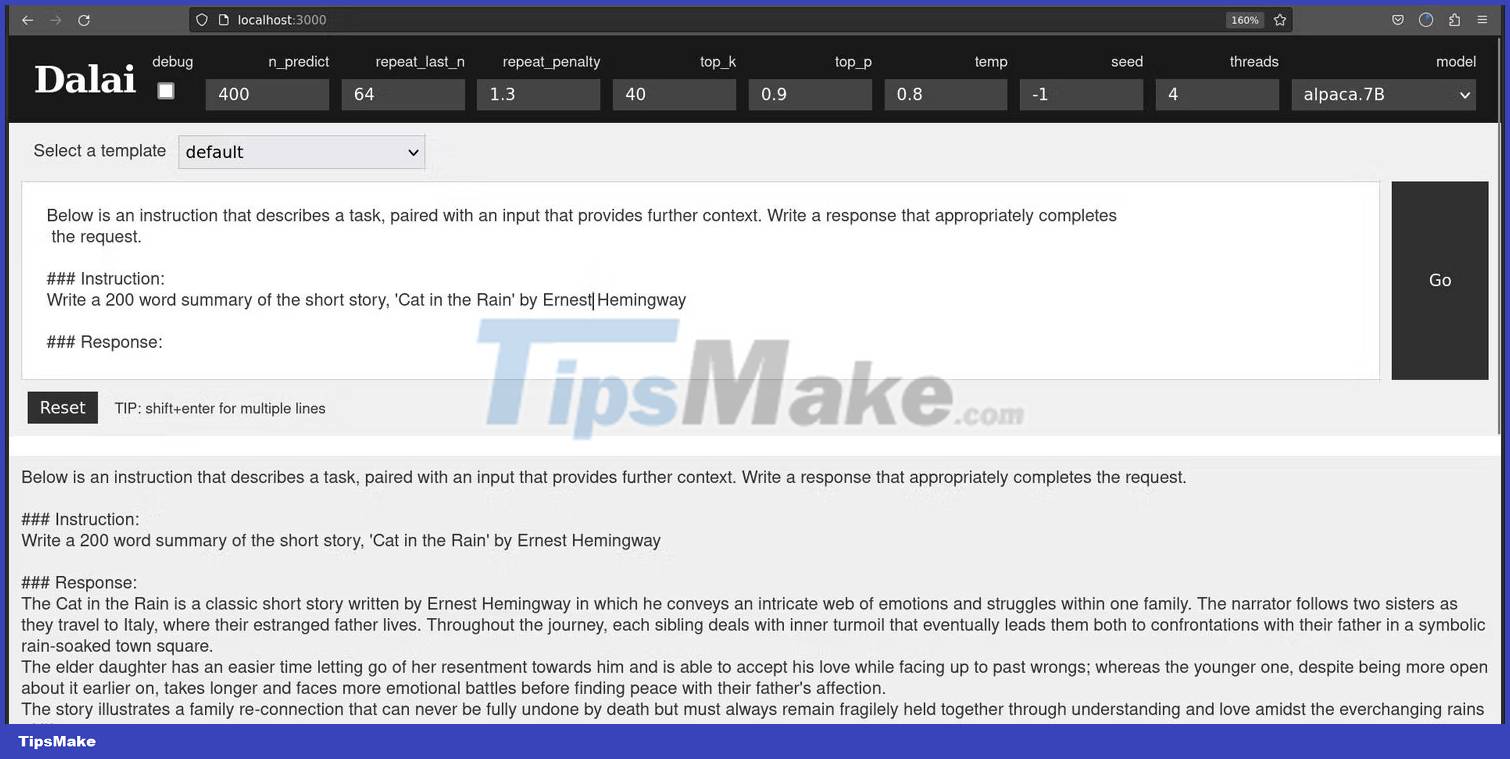

When the web interface opens, you'll see a text box in which you can write your reminder.

Writing effective reminders is difficult, and the Dalai Lama's developers have provided a useful range of templates to help you get helpful feedback from Dalai.

These are AI-Dialog , Chatbot , Default , Instruction , Rewrite , Translate and Tweet-sentiment .

As you would expect, the AI-Dialog and Chatbot templates are structured in a way that allows you to hold a conversation with the LLM. The main difference between the two is that the chatbot is said to be "very intelligent", while the AI-Dialog is "helpful, good, obedient, honest, and knows its own limits". Of course, this is your "AI", and if that pleases you, you can change the prompt if you want.

The article tested the Translate function by copying the opening paragraph of a BBC news story and asking the Dalai Lama to translate it into Spanish. The translation is very good, and when running it through Google Translate to get it back into English, the content is quite readable and accurately repeats the facts and intonation of the original.

Likewise, the Rewrite template convincingly puts text at the beginning of a new article.

The Default and Instruction prompts are structured to help you ask questions or direct instructions to the Dalai.

The accuracy of Dalai's response will vary greatly depending on the model you're using. Model 30B will be a lot more useful than model 7B. But even then, you are reminded that LLMs are simply sophisticated systems for guessing the next word in a sentence.

Neither the Alpaca 7B nor 13B models provide an accurate 200-word synopsis of Ernest Hemingway's "Cat in the Rain" short story, and both make for compelling plots and details about the content. content of the story.

By running a large language model on your own Linux, you are not subject to monitoring or the risk of inaccessibility to the service. You can use it in any way you see fit without fear of consequences for violating the content policy of the service provider company.

If your computing resources are few, you can even run LLM locally on the humble Raspberry Pi.

You should read it

- ★ Microsoft announced DeepSpeed, a new deep learning library that can support the training of super-large scale AI models

- ★ Overview of R language, install R on Windows and Linux

- ★ Please experience 'ChatGPT's grandfather' on Excel

- ★ 9 pros and cons of using a local LLM

- ★ In the end, big universities realized that Java was a lousy language if used for introductory programming