Google launched TensorFlow Lite 1.0 for mobile devices and embedded devices

Google today officially introduced TensorFlow Lite 1.0, a developer-only tool for declaring AI models on mobile devices and IoT. Major improvements include selective registration and quantization during and after training for smaller, faster models. Quantization was able to make some AI models compressed up to 4 times. If you are having any issue updating your android mobile's OS then you should look for some best softwares at Get into pc.

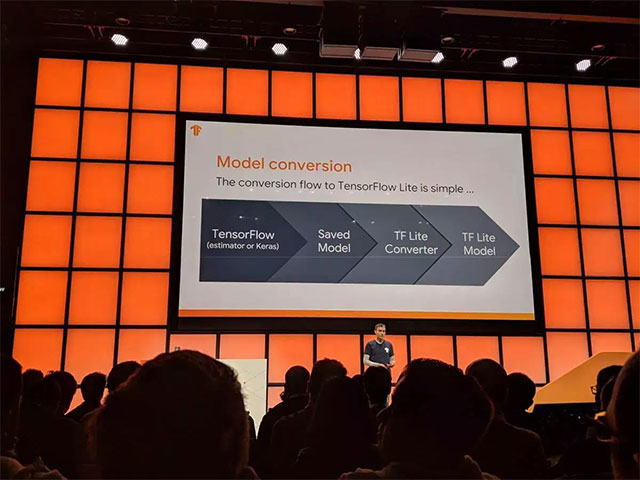

Lite started by training AI models on TensorFlow, then converted to create Lite models that can work on mobile devices. Lite was first introduced at the I / O developer conference in May 2017, and the preview (preview) was released later that year.

- Google announced a serious vulnerability in the macOS kernel

The team responsible for TensorFlow Lite at Google also presented the roadmap for developing this platform in the future today. Accordingly, the main focus in developing TensorFlow Lite is to minimize and speed up AI models, especially enabling Android developers to use neural networks, as well as connectors. Based on Keras and additional quantization improvements.

Some other typical changes include:

- Support flow control, which is essential for the operation of models such as recurrent neural networks.

- Optimize CPU performance with Lite models, potentially related to partnerships with other companies.

- Expand the scope of GPU operation and improve the API to be ready for use.

The TensorFlow 2.0 model converter for creating Lite models will be provided to developers to help them better understand the mistakes that may occur in the conversion process and how to overcome them. TensorFlow Lite is currently being deployed and used by more than 2 billion devices worldwide, according to a speech from Raziel Alvarez on the stage of the TensorFlow Dev Summit. Besides, the constant innovation of TensorFlow Lite has also made TensorFlow Mobile become more and more obsolete.

- Google and DeepMind apply AI to predict the output of wind farms

In the past, a series of new techniques have been discovered that help reduce the size of AI models and optimize them for use on mobile devices, such as quantization. and delegates (structured layers to deploy different diagrams in hardware to improve reasoning speed).

In addition, the mobile GPU acceleration feature for some devices has also been provided in the developer preview in January. This feature can optimize the deployment of the model 2 to 7 times faster than floating point CPU (floating point CPU). Edge TPU delegates can speed up 64 times faster than floating point CPU.

In the future, Google plans to make GPU delegates become more useful, expand the scope and improve the API.

Some of Google's original apps and services use TensorFlow Lite, including GBoard, Google Photos, AutoML and Nest. All calculations are required for CPU models when Google Assistant needs to respond to queries in the offline state currently implemented by Lite. In addition, Lite can also run on devices like the new Raspberry Pi and Coral Dev Board.

- This is the interface of Lite OS, Microsoft's new operating system, competitor with Chrome OS

Besides TensorFlow Lite, today, Google also introduced the alpha release of TensorFlow 2.0 for a simpler user experience, TensorFlow.js 1.0, and the TensorFlow 0.2 version for written developers. code in Apple Swift programming language. At the same time TensorFlow Federated and TensorFlow Privacy were also released early today.

Lite for Core ML - Apple's machine learning framework was introduced in December 2017. Custom TensorFlow Lite models can also work with ML Kit, which can be a quick way for developers to create Mobile AI model, introduced last year for Android and iOS developers using Firebase.

You should read it

- How to install TensorFlow in Linux and Raspberry Pi

- Google released the TensorFlow machine learning framework specifically for graphical data

- 3.5 million WSL users can now use GPU Compute from Linux right on Windows

- How to Set Up a Python Environment for Deep Learning

- Android devices will be 'stamped' trademarks

- Google launched Google Go worldwide, extremely light version with many useful features, can replace the Googe application

Google introduced Coral, the platform that supports building AI IoT hardware integration

Google introduced Coral, the platform that supports building AI IoT hardware integration Google launches Backstory - New network security tool for businesses

Google launches Backstory - New network security tool for businesses Nintendo launched the Labo VR kit for Switch

Nintendo launched the Labo VR kit for Switch Chrome for Android owns a super useful desktop feature

Chrome for Android owns a super useful desktop feature Google is preparing to launch a game console, ready to 'battle' the game hardware market

Google is preparing to launch a game console, ready to 'battle' the game hardware market Your next smartphone will probably be equipped with a 192MP camera

Your next smartphone will probably be equipped with a 192MP camera