What is Apple's R1 chip? How does the R1 chip compare to the M1 and M2?

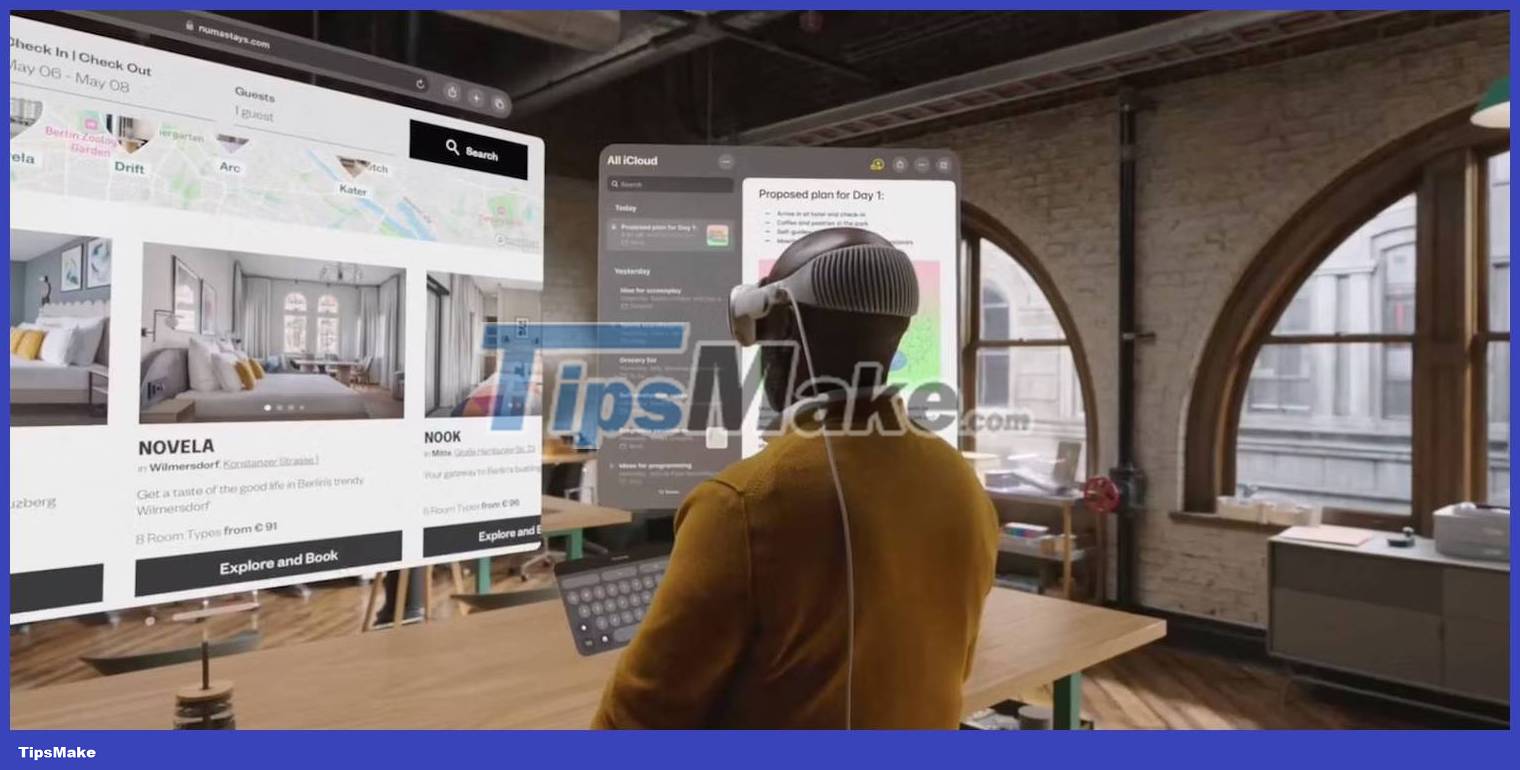

Vision Pro brings Apple's new silicon, the R1 chip, which processes real-time data from all the onboard sensors. It is responsible for eye, hand and head tracking, lag-free display of the user's environment in video pass-through mode and other visionOS features.

R1 offloads the processing burden from the main processor and optimizes performance. Let's explore how the Apple R1 chip works, compare it with the M2 chip, and find out what Vision Pro features it supports, etc.

What is Apple's R1 chip? How does it work?

Apple R1 processes a continuous stream of real-time data to Vision Pro using 12 cameras, 5 sensors and 6 microphones.

Two main external cameras capture your world, pushing more than a billion pixels onto the headset's 4K display every second. Additionally, a pair of side cameras, along with two bottom-mounted cameras and two infrared illuminators, track hand movements from a variety of positions - even in low light conditions.

Outward-facing sensors also include LiDAR Scanner and Apple's TrueDepth camera that captures a depth map of your surroundings, allowing Vision Pro to accurately locate digital objects in your space. On the inside, a ring of LEDs around each display and two infrared cameras track your eye movements, providing the basis for visionOS's navigation.

R1 is tasked with processing data from all those sensors, including every inertial measurement unit, with imperceptible latency. This is extremely important in making the spatial experience smooth and reliable.

Compare Apple R1 with M1 and M2

M1 and M2 are general-purpose processors optimized for Mac computers. The R1 is a narrow focus coprocessor designed to support smooth AR experiences. It does its job faster than the M1 or M2, offering perks like a lag-free experience.

Note : Apple did not specify how many CPU and GPU cores the R1 has, nor did it detail the CPU and RAM frequencies, making direct comparisons between the R1, M1, and M2 difficult.

The main domains of the R1 are eye and head tracking, hand gestures, and real-time 3D mapping through the LiDAR sensor. Offloading those compute-intensive operations allows M2 to run VisionOS's various subsystems, algorithms, and applications efficiently.

Key features in Vision Pro's R1 chip

R1 has the following key capabilities:

- Fast processing : Dedicated algorithms and image signal processing in the R1 are optimized to understand sensor, camera and microphone inputs.

- Low latency : Optimized hardware architecture results in very low latency.

- Power efficiency : R1 handles a specific set of tasks while using minimal power, thanks to an efficient memory architecture and TSMC's 5nm manufacturing process.

On the other hand, the Vision Pro's dual-chip design and the R1's sophistication contribute to the headset's high price and two-hour battery life.

What benefits does R1 bring to Vision Pro?

The R1 enables precise tracking with "active" eye and hand components. For example, to navigate visionOS, you direct your gaze to buttons and other elements.

Vision Pro uses hand gestures to select items, scroll, etc. The sophistication and precision of eye and hand tracking allowed Apple engineers to create a mixed reality headset without the need for a headset. physical controls.

The R1's precise tracking and minimal latency enable additional features, such as typing on a virtual keyboard. The R1 also provides reliable head tracking - crucial for creating a spatial computational picture of the user's surroundings. Again, precision is key here - you want all the AR objects to maintain their position no matter how you tilt and turn your head.

Spatial awareness is another factor that contributes to the experience. The R1 takes depth data from the LiDAR sensor and TrueDepth camera, performing real-time 3D mapping. Depth information allows the headset to understand its environment, such as walls and furniture.

This, in turn, is important for the persistence of AR, which refers to the fixed position of virtual objects. It also helps Vision Pro notify users before they hit objects, helping to reduce the risk of accidents in AR applications.

How does the R1 Fusion sensor reduce AR motion sickness?

The Vision Pro's dual-chip design offloads sensor processing from the main M2 chip, which runs applications and the visionOS operating system.

According to Vision Pro's press release, the R1 transmits images from the external camera to the internal display within 12 milliseconds, or eight times faster than blinking, minimizing latency.

Latency refers to the time difference between what the camera sees and the image displayed on the headset's 4K screen. The shorter the delay, the better.

Motion sickness occurs when there is a noticeable delay between the input your brain receives from your eyes and what your inner ear senses. It can happen in many situations, including in amusement parks, on boats or yachts, while using VR equipment, etc.

VR can make people sick due to sensory conflicts, leading to symptoms of motion sickness such as disorientation, nausea, dizziness, headaches, eyestrain, sitting, vomiting, etc.

VR can also harm your eyes by causing eye strain, with symptoms including sore or itchy eyes, shiny appearance, headaches and neck pain. Some people may feel one or more of these symptoms for several hours after removing the headset.

As a rule of thumb, VR devices must refresh the screen at least 90 times per second (FPS) and screen latency must be less than 20 milliseconds to avoid causing users to experience motion sickness.

With a stated latency of just 12 milliseconds, the R1 reduces latency to unnoticeable levels. Although the R1 helps minimize the effects of motion sickness, some Vision Pro testers reported symptoms of motion sickness after wearing the headset for more than 30 minutes.

You should read it

- ★ Apple will stop using Intel chips on Macs from 2020, switching to 'homegrown' chips.

- ★ Apple began manufacturing 7nm A12 processors for iPhone 2018

- ★ One more unpatched vulnerability on Apple M1 chip, users remain unaffected

- ★ Apple plans to produce a 5-nanometer chip by 2020, a 3-nanometer chip by 2022

- ★ How does the security chip on smartphones work?