How to use Gemini 1.5 Flash for free

This is an impressively fast and efficient model, offering multimodal capabilities and a context span of up to 1 million tokens (2 million via waitlist).

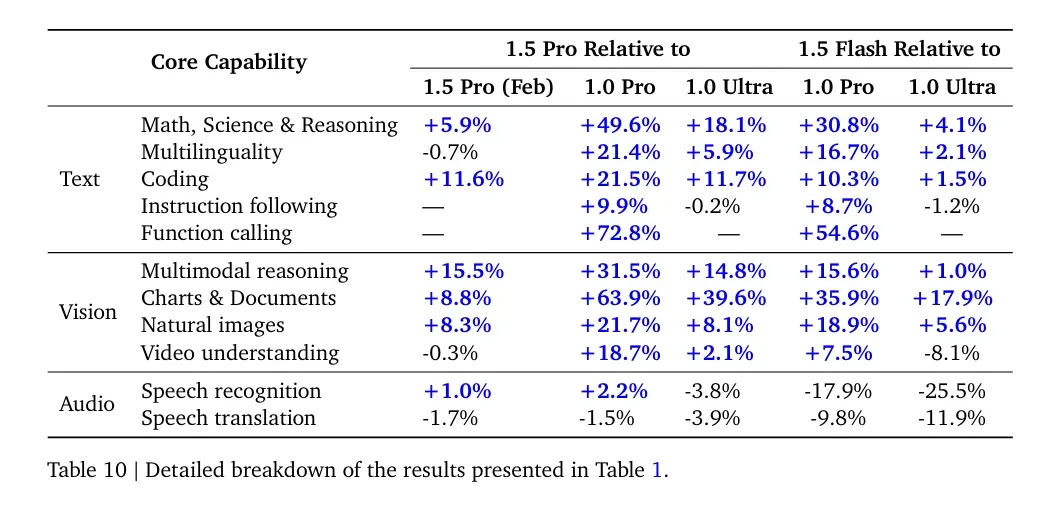

Although Gemini 1.5 Flash is small - Google hasn't revealed its size specifications - it scores high across all measures - text, image and audio. In the Gemini 1.5 technical report, Google revealed that the Gemini 1.5 Flash is far superior to larger models like the 1.0 Ultra and 1.0 Pro in many ways. Only in speech recognition and translation does it lag behind larger models.

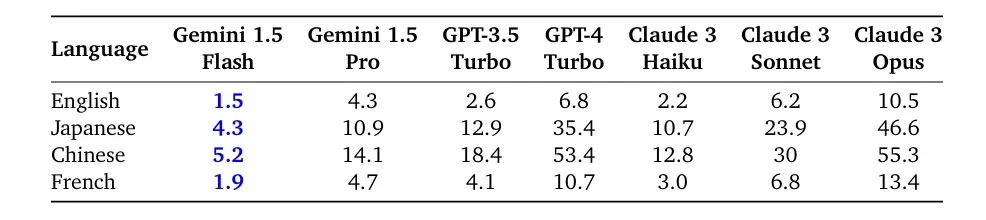

Unlike the Gemini 1.5 Pro which is a MoE (Mixture of Experts) model, the Gemini 1.5 Flash is a dense model, distilled online from the larger 1.5 Pro model for improved quality. In terms of speed, the Flash model outperforms all the smaller models available, including the Claude 3 Haiku, which runs on Google's custom TPU.

And its price is unbelievably low. Gemini 1.5 Flash costs $0.35 for input and $0.53 for output to process 128K tokens, $0.70 and $1.05 for 1 million tokens. It is much cheaper than the Llama 3 70B, Mistral Medium, GPT-3.5 Turbo and of course the larger models.

If you're a developer and need multimodal inference with a larger context window on the cheap, you should definitely take a look at the Flash model. Here's how you can try Gemini 1.5 Flash for free.

How to use Flash Gemini 1.5 for free

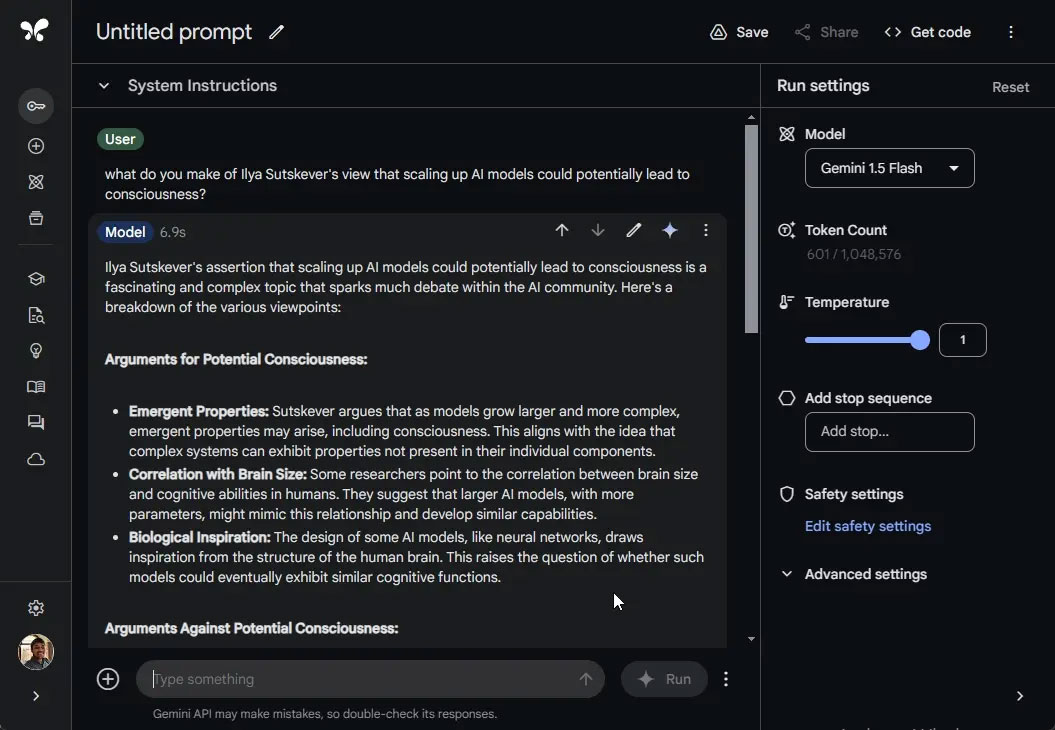

1. Go to aistudio.google.com and sign in with your Google account. There is no waiting list to use the Flash model.

2. Next, select the 'Gemini 1.5 Flash' model in the drop-down menu.

3. Now you can start chatting with the Flash model. You can also upload images, videos, audio clips, files and folders.

First impressions of Gemini 1.5 Flash

Although Gemini 1.5 Flash is not a modern model, its advantages are breakneck speed, efficiency and low cost. It ranks below Gemini 1.5 Pro in capabilities and other larger models from OpenAI and Anthropic. However, the author has tried some of the theoretical suggestions used to compare ChatGPT 4o and Gemini 1.5 Pro.

It can only generate one correct answer out of 5 questions. It may not be very smart in terms of common sense, but for other applications that require multimodal capabilities and large context windows, it may be suitable for your use case. Additionally, the Gemini model is good at creative tasks that can bring value to developers and users.

Simply put, there is no AI model that is fast, efficient, multimodal, and has a large context window with near-perfect capabilities. Best of all, it's incredibly cheap.

What is your opinion on Google's latest Flash model? Share your opinions in the comments section below!