Google Panda - Algorithm for real rankings?

TipsMake.com - Recently, Google has updated an important component of ranking websites that affected 12% of search results and reduced the number of visits for many sites .

With the name Farmer or Panda Update, it currently only affects Google US search results, but people outside the US need to be careful because it will soon be expanded. Here's how to find out whether the webstie you have - will be affected and what you should do to deal with it.

Is your business website reduced by 50% of traffic through Google search engine (excluding ads from Google Adwords)? That may be due to the influence of Google's Panda update algorithm.

Has your website been touched by Panda?

At the time this article was written, Panda only affected results in the US. Here's how to use Google Analytics (GA) to see if your page is affected.

If your website gets most of the search engine traffic from the US market, you've probably got an answer about whether you've been affected or not. With this tutorial, you can view detailed information about the damage as well as learn how to analyze where the problem may occur.

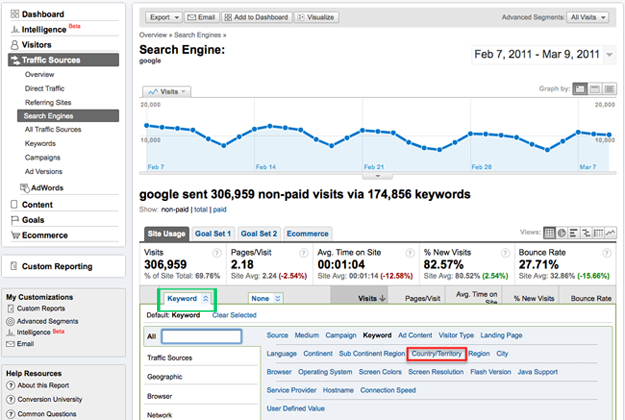

First, go to the control panel of GA. If the site is not affected, you can see a chart similar to the image below and rest assured that everything is fine.

However, when going deeper. Go to the report Search Engines in the menu Traffic Sources (and choose ' non-paid '):

Then click ' Google ' to see only Google traffic.

Click on the ' Keyword ' row at the top of the list of keywords (highlighted in blue below), you will see a huge submenu and click on ' Country / Territory ':

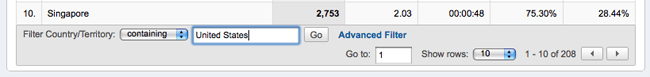

Enter ' United States ' in the filter field at the bottom of the list of countries.

Press ' Go ' and pray that you will not see anything similar to the picture below:

More than 50% of traffic is not passed Google Adwords is slumped from Google US.

Use Advanced Segments to see organic access in Google US

Using Advanced Segments in GA will help you to analyze more strongly what is happening.

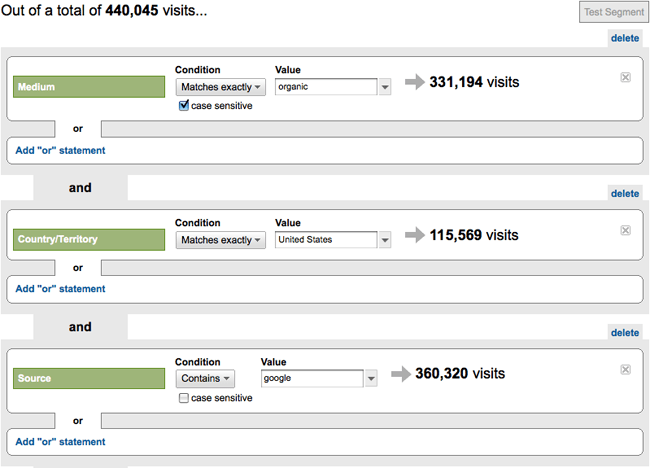

Select ' Advanced Segments ' from the left menu and then select ' Create new custom segment '.

Configure the following parameters:

'Medium' Matches exactly 'organic'

AND

'Country / Territory' Matches exactly 'United States

AND

'Source' Contains 'google'

When finished, they will look like this:

It is possible to name this segment as ' GoogleUS organic '

Applying this segment to GA's report and all the data you see will only be a visitor, it lets you know which pages are best and worst treated by Panda.

What is Google planning to do?

The purpose of Panda is very high: to remove poor quality pages from Google's top results page . Or, according to Matt Cutts, Google's spam expert, posted on a blog about Panda: ' This update is designed to reduce ranking of poor quality sites - low-value pages. , copying content from other websites or pages is not helpful at all. At the same time, it will provide better ranking for high quality pages - pages with original content and information such as research, in-depth reports, in-depth analysis, . .

The last thing Google proposed is that researchers are increasingly unhappy about what they find. They can try another search engine if this goes on forever.

However, all major Google updates leave behind consequences: pages that are not targeted are also affected . Google has been aware of this and requires high quality pages that are still affected inform them.

The website used as an example in this article is a high quality site but still affected by Panda. The main content of this website is the in-depth research articles of experts with a forum so users can ask and receive answers (Questions & Answers) for issues.

Maybe the Q&A pages are the problem (these pages can make Google think they have 'weak' content). However, we later found 2 similar sites in other markets that were affected but without the Answers forum. Certainly, finding out why innocent sites have to endure this problem is not easy.

What factors make a page affected by Panda?

Google loves keeping secrets, but two employees working with Panda, Matt Cutts and Amit Singhal, gave us some key clues in an interview with the Wired Times.

We have shortened the issues as follows:

• Conduct quality research (ask directly to individuals and only small / brief questions) to find out which websites are low quality and why.

• Use the results of identifying poor quality sites by factors that Google can evaluate. This helps Google get a more precise definition of low quality.

From here, let's think about some of the factors that Google can use to evaluate:

• Pages with high duplicate content (for example, the same content but you run on different domains .).

• The amount of original content on the site or each site is low.

• There are many pages with a low percentage of original content.

• The number of inappropriate keywords on the page is particularly high (not suitable for search queries).

• The content and title (title) of the page do not match search keywords.

• unnatural language is used too much on a page to increase SEO.

• High Bounce rate.

• Low number of pageviews or sites.

• The percentage of users returning is low

• Percentage of users who click through to Google results pages is low (for sites or sites)

• High percentage of dishonest content (the same on all pages).

• Inbound links to a site or site are low or not of quality.

• No links or little links to social networking sites or other sites.

Many factors seem to be for 'Panda points' only (and the score does not mean that they will receive prizes). Panda points will be added. Through this door (Panda Line) and all the pages on your site are affected. This includes original sites that may be ranked lower than content-stealing sites.

Google has said that ' low quality content on a part of a site can affect that site's entire ranking .'

Panda is an algorithm change but not the same as the usual change. This is a change of algorithm that works as a penalty because if your site does not pass Panda Line, the entire site will be affected, so will the quality of the site.

Panda Slap is applied to the entire site or only at the page level?

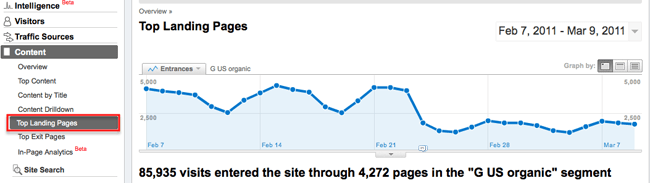

If a Panda Slap is widely adopted, all sites will be affected along with Google's organic traffic. On the test site, we use ' GoogleUS organic ' to see if this is true or not.

To enter Nội dung > Top Landing Pages . See below (remember, in this segment, we only work with access from Google's organic search in the US market, so there's no need to limit GA reporting except ' Landing pages '):

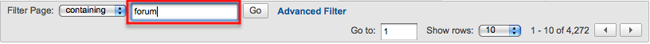

This report lists all 4,272 landing pages. To check if all pages are affected by Panda, you can filter the report:

• Independent pages. Select a template and look for exceptions to the reduced access shown above.

• Filter pages defined by shared strings in the URL. For example, the forum page may all contain strings / forum / in their URL.

Use the filter at the end of the report to do this:

We have done on a few pages affected by Panda and can say that some sites are more affected than others and a few work well thanks to Panda.

So, at least Farmer Panda to some extent works at the page level.

Find out what type of page has been affected on your site

If your site is affected, use filters on GA to find out which pages are most affected by Panda.

We find that many pages with high-quality, unique, specialized articles (sometimes thousands of words long) are harder to attack than average pages. So, it's not simple to get an answer. However, these pages have more ads than average pages.

Some forum pages have a significant increase in access. These pages have long been a great threat because there are quite a few ads on them (including pop-ups) but still less than other sites.

On this site, we tried to change some ads, specifically try to block ads on forums.

However, this does not bring results or it can be said that it is not enough because no changes have occurred.

Is Panda's penalty applied at the keyword level?

To know if Panda applies at the keyword level, you can:

• Find a page that receives results from different keywords.

• See if Panda has other effects on the traffic of these keywords (at the same page).

If so, we can confirm that Panda is also applied at the keyword level.

We have seen some cases where Panda has reduced the access of the same page to some keywords, but for some other sites it has no effect. However, they are all exceptions.

Suggestions about Panda operating at the page level and site are reinforced when we search on Google US some keywords (in brackets) from a deeply rooted article that has been indexed for 10 years and closed contribute 'imprint' to Google's search results for nearly 10 years. What we see is:

• There are 36 other versions of the article.

• Two versions have a higher position than the original.

• One of these is of low quality on a poor quality site.

• The original page lost 75% of Google US organic traffic by Panda.

• This traffic comes from over 1,000 different keywords.

What to do when Panda is affected

Google suggests:

' Once you know exactly how much you've been affected by this change, you should evaluate all the content on the main page and try to improve overall quality across the site. Removing low-quality pages or moving them to a new domain can help boost rankings with higher quality content . '

More detail:

• Search and remove the most affected pages.

• Find differences between affected and unaffected pages.

• Check for changes in the essentials on the affected pages but be sure to use this analytical method carefully because the pages that are most affected may not be the ones that make you penalized.

• Create a list of different page types. For example, forums, quality articles, low quality articles, quality categories, low quality categories, products, blog posts, . Give this list a line in the spreadsheet file. and start building a table.

• Add columns for related factors, such as 'lots of ads', 'less content', 'identical', 'all matches' . as well as page numbers and% organic traffic reductions in Google US. Enter the values for each page type.

• See how many% of pages on the site are taken away by low quality sites and improve them.

• If you are retrieving items or copying content from other sites, replace them with quality original content or check by removing some of these pages (or even all).

• If your site has a large number of duplicate content pages, poor content or almost no content, improve / remove or block them from Google with robots.txt .

• If the site has multiple pages that overlap with the content of the site itself, add the tag rel = canonical on duplicate pages. This will help Google identify these pages as not deceptive.

• Correct any excessively optimized pages.

• Upgrading anything can help the user experience better.

• Provide more users when they are new to a page. For example, images, videos, links to the best content pages.

• If possible, make the page content language more accessible and practical.

• Advertise content on social networking sites, including Twitter and Facebook.

• If you make sure your page is clearly clean with Google, let them know about it but don't expect it.

Take these changes immediately (if possible) in the hope of potentially reducing losses quickly. By improving the content of the article, then you can make up for what you have lost and don't forget to check again if you went into the 'crash' again.