Virtualization realization

The field of virtualization is currently hot! Many new virtualization platforms have emerged, including software and hardware solutions, virtualization from chips to IT infrastructure. The IT community in general is eager for this technology because of its benefits.

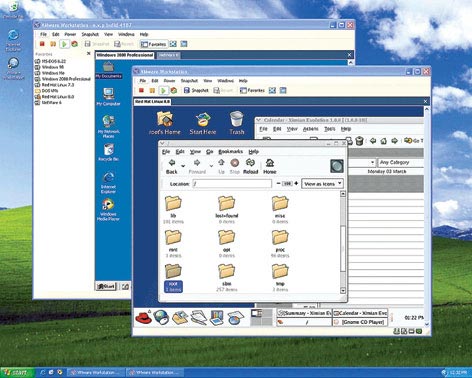

The "virtualization" technique has been no stranger to real life since VMware introduced the first VMware Workstation product in 1999. This product was originally designed to support the development and testing of parts. soft and has become popular thanks to the ability to create "virtual computers" running multiple operating systems (OS) simultaneously on a "real" computer (different from "dual-boot" mode - computer Multiple operating systems are installed and can be selected at startup but only work with one OS at a time.

VMware, acquired by EMC (storage industry) in December 2003, has expanded its reach from desktop (server) to server (server) and currently maintains its dominant role. virtualized market but not "unique" but must compete with open source products Xen, Virtualization Engine 2.0 of IBM, Microsoft Virtual Server, SWSoft's Virtuozzo and Iron Software's Virtual Iron. And "virtualization" is no longer confined to one area but extends to the entire IT infrastructure, from hardware such as processing chips to server systems and networks.

Virtual machine

VMware is the market leader in "virtualization" currently but not the pioneer, this role belongs to IBM with the famous VM / 370 virtual machine system published in 1972 and "virtualized" is still present in IBM's current server systems.

In principle, the IBM virtual machine is a "copy" of the underlying hardware. A component that monitors the virtual machine (VMM - Virtual Machine Monitor) running directly on "real" hardware allows creation of multiple virtual machines and each virtual machine works with its own operating system. The idea of starting a virtual machine is to create a working environment for many users to share the resources of the mainframe system.

The working principle of PC virtual machines is the same as the mainframe virtual machine: is a software environment that includes the operating system and applications that completely run "inside" it. The virtual machine allows you to run an operating system in another operating system on the same PC such as running Linux in a virtual machine on a PC running Windows 2000. In a virtual machine, you can do almost anything with a real PC. . In particular, this virtual machine can be "packaged" in one file and can be transferred from one PC to another without having to worry about hardware compatibility. Virtual machines are entities isolated from the "master" system (containing virtual machines) running on the real machine.

One problem is to request a virtual machine to accurately simulate the real machine. The real machine has hardware resources such as memory, registers . and processor scripts that directly affect this hardware resource (such as changing the register, flag .) of the "sensitive" command group. "(because it affects all processes that are working, including VMM). The operating system running directly on the real machine is allowed to execute these "sensitive" commands.

On mainframe systems, VMM runs on real machine hardware in priority mode, while virtual machines work in limited mode. When virtual machines require regular commands, VMM will forward them to the processor to execute directly, while special "sensitive" commands will be blocked. VMM will execute the command with the processor on the real machine or simulate the result and return it to the virtual machine. This is a mechanism to isolate virtual machines with real machines to ensure system safety.

The mainframe system processors are designed to support the "virtualization" mechanism and allow "trapping" of "sensitive" commands to be processed by VMM, but the PC-based processors (x86) are incapable. this.

VMware allows running multiple operating systems simultaneously on PC.

Fantasy as real

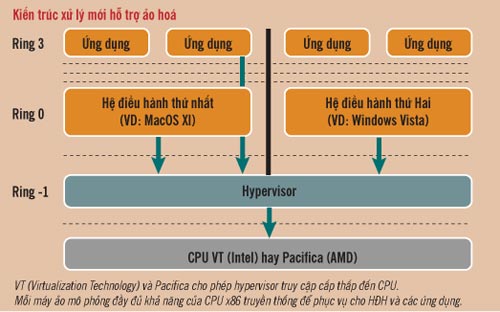

In theory, according to Intel, the operating system core works in class 0 (Ring 0, with the highest function), the deepest access level. Traditional x86 processors cannot run virtual operating systems in class 0 because they need to run virtual machine management software (called hypervisors).

The x86 architecture also has 3 more classes with descending function. To ensure stability, operating systems currently limit applications to the lowest functional layer - Ring 3 (this is why Windows XP has higher stability than DOS - OS allows applications to do work at class 0). So the obvious solution is that the operating system of the virtual machine is allocated in the other two layers.

The problem is that there are some x86 code that only work in layer 0. To work at higher layers, the operating system must be rewritten (or recompiled) to avoid these commands. This solution is popular in the Linux world (IBM uses the same technique to run Linux on mainframe), but it requires an operating system source code and an OS-aware programmer.

To run the original OS outside class 0, the hypervisor must trap prohibited commands and simulate them. This is VMware's solution and DOS simulation environment of Windows XP. The problem is that simulation uses a lot of computational resources and reduces the performance of the system.

To solve this problem, Intel (which dominates the computer processor market) has launched VT architecture (formerly codenamed Vanderpool and Silverdale) and AMD also has the equivalent Pacifica architecture, adding the priority layer under Ring 0. VT and Pacifica add new code that only works in this new class ("Ring -1") for the hypervisor. In this way, the operating system running on the virtual machine does not need to be adjusted and the performance of the simulation is reduced. However, the performance problem has not been solved completely: each OS assumes that it has full access to system resources such as memory and data transmission (I / O bus), while hypervisor must allocate Accessing real resources to ensure programs and data are not confusing between operating systems. Currently virtualizing system resources and I / O devices has been taken into account and it is possible that future versions of VT and Pacifica will allow "virtual" operating systems to work directly with the hardware.

Virtual things

Virtualization gives users the convenience of running multiple operating systems simultaneously on the same PC, but the ability of virtualization is more than that and it has now moved to a larger playground: servers and networks. This is a playground where virtualization can unleash its full power.

Virtualization of the server is not a new issue (as mentioned above, virtualization was originally from mainframe), but it has really been focused over the past 2 years. The important meaning of virtualization of the server is to allow full exploitation of server resources (servers often have "free time" rather than continuous operation with 100% efficiency), flexible organization of server system (especially data centers), saving time and investment costs as well as operations.

There are many large companies involved in server virtualization and offer many new solutions, of which the two most popular are hard virtualization and soft virtualization.

The first way (also known as "server clone") creates multiple virtual machines on a physical server. Each virtual machine runs its own operating system and is allocated its own CPU clock, storage capacity and network bandwidth. Server resources can be dynamically allocated according to the needs of each virtual machine. This solution allows consolidating cumbersome server systems. Microsoft has also provided a "virtual license" for the hard virtualization solution: Windows Server 2003 R2 version 1 single server allows installation on 4 virtual machines outside the "real" machine.

The second virtualization method (also known as "OS clone") uses a copy of an operating system to create virtual servers right on the OS. Thus, if the "master" operating system is Linux, then this virtualization will allow for more Linux distributions to work on the same machine, which has the advantage of having only one OS license but the drawback is that you cannot. run many different operating systems on the same machine.

However, there is a particular problem with virtualizing the server that is "putting all the eggs in a basket." Is it risky to put all the important servers in a single physical server?

The entire network virtualization solution allows "putting eggs in multiple baskets": gathering the resources of all CPUs, memory, storage and network applications to share work. This solution can providing virtual servers with resources gathered from multiple physical servers located in different places and running different operating systems, memory and storage are also gathered from multiple sources on the network. The flexibility and reliability of the "virtual" server is also extremely high due to the ability to switch jobs from multiple servers, which is the basis of the network concept.

Phuong Uyen