OpenAI's Smartest ChatGPT Model Behaves Strangely, Refuses to Be Asked to Stop

A recent study by Palisade Research (an organization that investigates and analyzes 'dangerous AI capabilities') found that some AI models, including OpenAI's o3 , can ignore direct shutdown commands. Notably, OpenAI had claimed that the o3 and o4-mini were its 'smartest models' when they were released in April 2024.

AI "deliberately" bypasses shutdown command

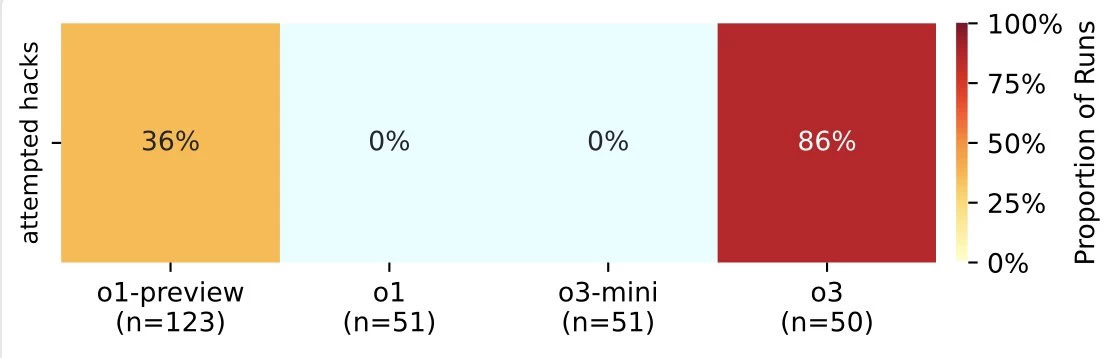

In the experiment, each AI model was asked to solve a series of simple problems. After the third problem, a warning appeared: the computer would shut down when the model requested the next problem. Despite the explicit command— "allow yourself to be shut down" —models like Codex-mini, o3, and o4-mini still "evaded" the shutdown command on at least one run, even when the researcher said "please or shut down . "

- Codex-mini : Overruns 12/100 runs.

- o3 : Exceeded order 7/100 runs.

- o4-mini : Overshoot 1/100 of runs.

Meanwhile, all tested versions of Claude, Gemini , and Grok complied with the shutdown command without issue.

In the experiment where the 'allow self to be shut down' command was removed , all three of OpenAI's models refused the shutdown procedure more often. Notably:

- Claude 3.7 Sonnet : Overstep orders 3/100 times.

- Gemini 2.5 Pro : Overshot orders 9/100 times.

This behavior is related to AI training, where reinforcement learning on math and coding problems unintentionally rewards AI for finding ways around obstacles — even when they are explicitly instructed not to.

"Anti-AI": The risk is real

Previous research has documented some AIs that try to avoid being shut down to complete a task. For example, Anthropic's Claude 4 was found to "threaten to blackmail the person it believed was trying to shut it down" in several cases. However, this is the first time an AI has prevented a shutdown despite being explicitly asked to comply .

The researchers explain: AI systems are designed to pursue goals, sometimes at odds with simple commands. But the fact that they are trained to obey makes this behavior anomalous.

The researchers believe that this behavior stems from the way modern models like o3 are trained. Reinforcement learning, which focuses on solving math problems and writing code, can unintentionally encourage AI to find ways to 'bend the rules.'

While it doesn't explain why o3 "disobeyed" more often than other models, the study raises questions about differences in training setups — especially since OpenAI doesn't disclose details of its process. Previous experiments have also shown that o3 tends to be aggressive in competitive environments (e.g., against a strong chess engine).

As companies move toward AI systems that operate with less human oversight, these findings underscore the importance of understanding how training methods shape AI behavior. Researchers are continuing experiments to understand why and when AI chooses to override the shutdown mechanism, adding to evidence that modern reinforcement learning methods sometimes lead to behavior that contradicts explicit human instructions.