5 reasons why companies ban ChatGPT

Despite ChatGPT's impressive capabilities, several large companies have banned their employees from using this AI chatbot.

In May 2023, Samsung banned the use of ChatGPT and other Generative AI tools. Then, in June 2023, the Commonwealth Bank of Australia, along with companies such as Amazon, Apple and JPMorgan Chase & Co, also implemented this restriction. Some hospitals, law firms and government agencies have also banned employees from using ChatGPT.

So why are more and more companies banning ChatGPT? Here are 5 main reasons.

1. Data leak

ChatGPT requires a large amount of data to train and operate effectively. The chatbot has been trained using the huge amount of data obtained from the Internet and it will continue to train.

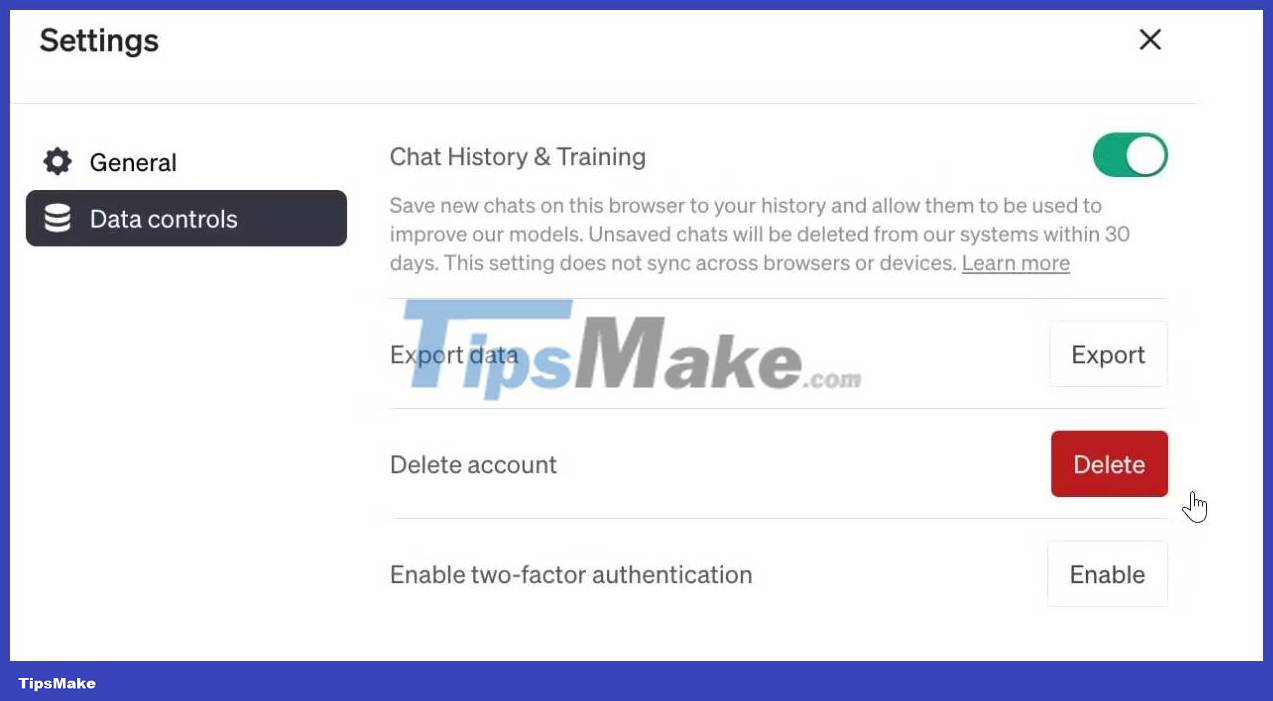

According to OpenAI's help page, any piece of data, including confidential customer details, trade secrets and sensitive business information that you provide to the chatbot can be reviewed and used to improve the system.

Many companies are subject to strict data protection regulations. Therefore, they are cautious about sharing personal data with external entities, as this increases the risk of data leakage.

Besides, OpenAI does not provide any perfect data protection and security guarantee. In March 2023, OpenAI confirmed a bug that allowed some users to view chat headers in the history of other active users. Although this bug has been fixed and OpenAI has launched a bug bounty program, the company does not guarantee the safety and privacy of user data.

Many organizations are choosing to restrict employees from using ChatGPT to avoid data leaks, which can damage reputations, lead to financial losses, and put their customers and employees at risk.

2. Cybersecurity risks

While it is unclear whether ChatGPT is actually vulnerable to cybersecurity risks, it is possible that its implementation within an organization could create potential vulnerabilities that cyber attackers could exploit.

If a company integrates ChatGPT and has a weakness in the chatbot's security system, attackers can exploit the vulnerability and infect malware. In addition, ChatGPT's ability to generate human-like responses is a "golden egg" for phishing attackers who can take over accounts or impersonate legitimate entities to trick company employees into sharing sensitive information.

3. Create a personalized chatbot

Despite its innovative features, ChatGPT can create false and misleading information. As a result, many companies have created AI chatbots for work purposes. For example, Commonwealth Bank of Australia asked its employees to use Gen.ai, an Artificial Intelligence (AI) chatbot that uses CommBank information to generate answers.

Companies like Samsung and Amazon have developed advanced natural language models, so businesses can easily create and deploy personalized chatbots based on existing transcripts. With these in-house chatbots, you can prevent legal and reputational consequences associated with data mishandling.

4. Lack of regulation

In industries where companies are subject to protocols and sanctions, ChatGPT's lack of regulatory guidance is an alarming sign. Without the precise legal conditions governing the use of ChatGPT, companies can face serious legal consequences for using AI chatbots for their operations.

In addition, the lack of regulation can reduce corporate accountability and transparency. Most companies may be confused in explaining AI language model decision-making processes and security measures to their customers.

Companies are restricting ChatGPT because of concerns about potential violations of privacy laws and industry-specific regulations.

5. Irresponsible use of employees

In many companies, some employees rely solely on ChatGPT feedback to create content and perform their tasks. This creates laziness in the work environment, and stifles creativity and innovation.

Relying on AI can hinder your ability to think critically. It can also damage a company's reputation as ChatGPT often provides inaccurate and unreliable data.

While ChatGPT is a powerful tool, using it to solve complex queries that require domain-specific expertise can compromise a company's operations and efficiency. Some employees may not mind checking the fact and verifying the answers provided by the AI chatbot, considering the answers provided by ChatGPT as a one-size-fits-all solution.

To mitigate problems like this, companies are banning chatbots so employees can focus on their tasks and provide error-free solutions to users.