How to use Nightshade to protect your artwork from Generative AI

AI tools are revolutionary and can now hold conversations, generate human-like text, and create images based on a single word. However, the training data these AI tools use often comes from copyrighted sources, especially when it comes to text-to-image tools like DALL-E, Midjourney, and many others. .

Preventing Generative AI tools from using copyrighted images for training is difficult, and artists from all walks of life have struggled to protect their work from training datasets. create AI. But now that all changes with the introduction of Nightshade, a free AI tool designed to "poison" the output of Generative AI tools - and finally allow artists to take back a power number.

What is AI Poisoning?

AI Poisoning is the act of "poisoning" the training data set of an AI algorithm. This is similar to intentionally providing false information to the AI, leading to the trained AI malfunctioning or failing to detect the image. Tools like Nightshade change the pixels in a digital image in a way that looks completely different to the AI training on it, but is largely unchanged from the original to the human eye.

For example, if you upload an image of a "poisoned" car to the Internet, we humans will see that nothing has changed, but an AI is trying to train itself to recognize the car by watching pictures on the Internet will show something completely different.

A large enough sample size of these fake or infected images in the AI's training data can damage the AI's ability to generate accurate images from a given prompt due to the AI's understanding of the subject. damaged statue.

Some questions remain about the future of Generative AI, but protecting original digital works is a top priority. This could even break future versions of the model because the training data underpinning the model is not 100% accurate.

By using this technique, digital creators who do not allow their images to be used in AI datasets can protect them from being included in Generative AI without permission. Some platforms give creators the option to opt out of having their artwork included in AI training datasets. However, in the past, such opt-out lists have been ignored by AI model trainers and continue to be ignored without consequence.

Compared to other digital art protection tools like Glaze, Nightshade is counterintuitive. Glaze prevents AI algorithms from imitating the style of a particular image, while Nightshade changes the look of an image to AI. Both tools were built by Ben Zhao, Professor of Computer Science at the University of Chicago.

How to use Nightshade

Although the creator of this tool recommends using Nightshade in conjunction with Glaze, it can also be used as a standalone tool to protect your artwork. Using this tool is also quite easy because there are only 3 steps to protect your images with Nightshade.

However, there are a few things you should keep in mind before you get started.

- Nightshade is only available for Windows and MacOS with limited GPU support and a minimum of 4GB VRAM required. Currently, non-Nvidia and Intel Macs are not supported. This is according to the Nightshade team (GTX and RTX GPUs are found in the "CUDA-Enabled GeForce and TITAN Products" section). Alternatively, you can run Nightshade on yours, but it will result in slower performance.

- If you have a GTX 1660, 1650, or 1550, a bug in the PyTorch library may prevent you from launching or using Nightshade properly. Team Nightshade may fix it in the future by switching from PyTorch to Tensorflow, but currently there is no workaround. The problem also extends to the Ti variants of these cards. The author launched the program by granting admin access on his Windows 11 PC and waiting a few minutes for the program to open. Things can change in your case.

- If your artwork has a lot of shapes or solid color backgrounds, you may experience some strange phenomena. This can be overcome by using a lower "poisoning" intensity.

As for protecting your images with Nightshade, here's what you need to do. Remember that this guide uses the Windows version, but the same steps apply to the macOS version.

1. Download the Windows or macOS version from the Nightshade download page.

2. Nightshade downloads as an archive folder without installation. Once the download is complete, extract the ZIP folder and double-click Nightshabde.exe to run the program.

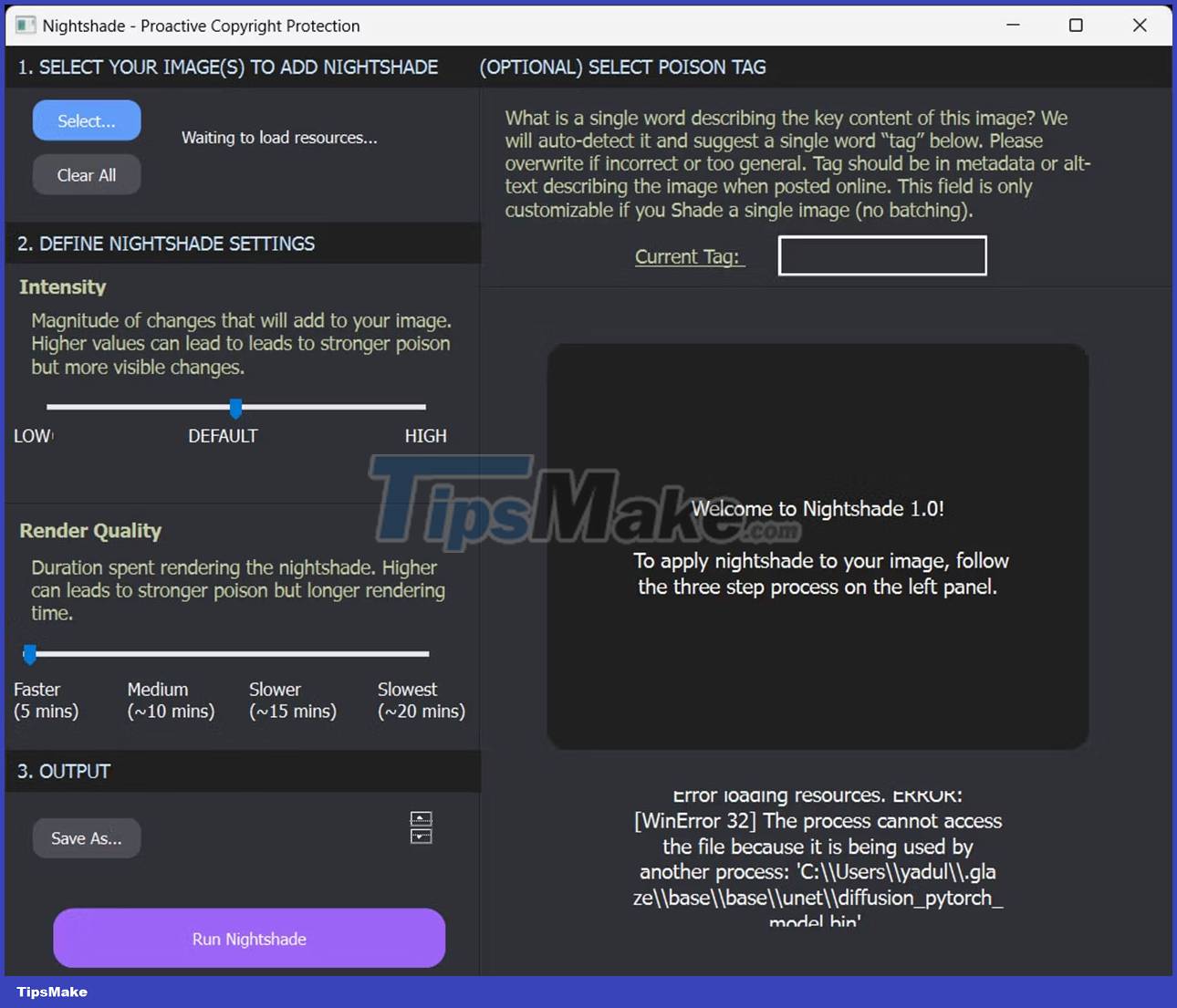

3. Select the images you want to protect by clicking the Select button at the top left. You can also select multiple images at once for batch processing.

4. Adjust the Intensity and Render Quality nodes to your preferences. Higher values add stronger contamination but can also cause artifacts in the output image.

5. Next, click the Save As button in the Output section to select the destination for the output file.

6. Click the Run Nightshade button at the bottom to run the program and poison your images.

Optional : Additionally, you can also choose a poison tag. Nightshade will automatically detect and suggest a single word tag if you don't, but you can change it if it's inaccurate or too general. Remember that this setting is only available when you process an image in Nightshade.

If all goes well, you'll end up with an image that looks exactly like the original to the human eye but is completely transformed by the AI algorithm, which helps protect your artwork from Generative AI.

You should read it

- ★ How to use Photoshop's Generative Fill to change clothes for people in photos

- ★ Turn photos into works of art using Generative Fill in Photoshop

- ★ 10 ways to use Generative Fill to improve photos in Photoshop

- ★ How to Choose Artwork for Your Bedroom

- ★ Photoshop beta 25.0 supports creating AI images in Vietnamese