How is AI impacting mobile photography?

If you are wondering what the future of mobile phone development will look like, how well it will be improved and how well it works, the main answer is in AI.Surely in recent times you are no stranger to the adverts such as 'Camera AI' or 'Camera with artificial intelligence' . Ignore the hype and moves In marketing, AI technology in the past few years has really made incredible progress in the field of photography in general and on mobile devices in particular.Of course, AI has only been applied in photography for a short time, this technology will also do many interesting things in the future.Let's find out how AI has been, will and will affect photography!

- AlphaStar, Google DeepMind's artificial intelligence prevails over StarCraft II players 10-1

AI and outstanding benefits

As said, there are still a lot of gimmicks around this technology, however, the recent impressive progress in smartphone photography is really remarkable, and takes place primarily at level. software, algorithms, not sensors or lenses - and that's largely due to AI applications.So what role does AI play in photography?The answer is that this technology helps the camera become smarter, in particular the previously inanimate cameras will now be equipped with artificial intelligence to capture and analyze things. which it will capture.

Google Photos has provided a clear demonstration of just how powerful the combination of AI and photography will be when launching an AI camera app in 2015. Before that, the American giant has also used machine learning to classify images on Google+ for many years, but the launch of an artificial intelligence integration application has brought tremendous efficiency, of which the most valuable is The thousands of unmodified images have been analyzed, selected and converted into searchable databases extremely quickly.For a simple example, you have a photo gallery of cats, including hundreds of photos of many different cats, making it difficult to classify cat photos from previous species.However, with AI, things are too simple, all details will be analyzed, selected and classified with very low errors.

This idea was created and developed by Google after the acquisition of DNNresearch in 2013. At that time, DNNresearch had been intending to establish a deep neural network trained in data analysis and classification, articles This is called supervised learning.Supervised learning is a process that involves training the AI system with millions of different images, thereby enabling the AI to search for pixel-level visual clues to determine the category and segmentation. photo type.Over time, the algorithm is also becoming more effective in recognizing objects in a photo because it has 'absorbed' enough data needed through previously trained photographs.For example, recognizing a panda in a picture based on the data on the shape and color of the trained coat, or analyzing how the animals in the picture tend to be related to each other purpose of determining species.With more training for AI, people can search for more abstract terms, such as what image the other is referring to . Maybe there are no common visual indicators but the results will be Still clear and we can understand immediately.

- 6 photo editing software using Artificial Intelligence to 'elevate' your image

It takes a lot of time, effort and processing power to train an algorithm like this, but after the data center has completed its work, the algorithm will be able to be applied on those Mobile devices have lower performance than data centers without much trouble.Until now, the hardest work has been completed, and we are the ones who are enjoying the fruits.You can see that when your photos are uploaded to the cloud, Google can use their AI algorithms to analyze and label that image by category, and can also organize your entire library. automatically.About a year after Google Photos was released, Apple also announced a neural network-based image search feature that was trained in the same way, as part of the company's security commitment, so Actual classification is performed on each device processor without sending data.This process usually takes a day or two and takes place in the background after being set up.

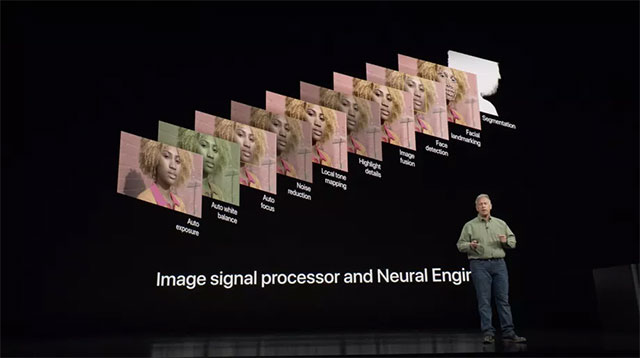

Smart photo management software is one thing, but AI and machine learning are thought to be the factors that have a greater impact on the first step in classifying a photo, which is to identify things before shooting.Yes, the lens can be improved to give a little faster speed, and the sensors may be slightly larger, but we will still face physical space constraints when cramming. a complex optical system into mobile devices is increasingly emphasizing thin, light elements.However, today, it's no wonder a camera phone is better than many dedicated camera devices (in some cases, of course), at least before post-processing.That's because traditional cameras have the advantage of lenses or sensors, but cannot compete with mobile cameras on some other types of hardware that are also essential in photography: Systems The chip contains the latest CPU, image signal processor and neural processing unit (NPU).

This is a hardware element used in a new technology called computational photography - a term that embraces all concepts, from deep field simulations in phones in portrait mode (capturing font deletion). , until algorithms that increase image quality to the amazing level of Google Pixel.Of course, not all elements of computational photography are relevant to AI, but AI is undoubtedly an important component of this new photography technology.

- Tanmay Bakshi, a 14-year-old boy, became an advisor to IBM, an AI expert

Apple has taken advantage of this technology to control the dual camera system on iPhone operating in portrait shooting mode.IPhone's image signal processor uses machine learning techniques to identify a person's figure with a camera, while the second camera will be responsible for deep-field reconstruction to help isolate 'objects'. be identified as a person and blur the background.Actually, the ability to identify people through machine learning is not something new when the feature was first introduced in 2016, and it has also been applied for a long time on the distribution tools. photo type.But managing this feature in real time with the right speed for smartphone cameras is a big breakthrough.

Clearly, Google is still the "leader" or rather the leader in this area, and the amazing results created on all three Google Pixel generations are the most convincing evidence.

HDR +, the default shooting mode, uses complex algorithms to merge multiple low-light frames into one to improve the overall brightness of the image.But AI can now do more.The advantage of machine learning is that the system can only get better over time, not backward.Google trained its AI with a huge dataset of photos that are specifically labeled, like with Google Photos software, and the process is still ongoing.In particular, Pixel 2 has created such a dramatic new 'image quality level' that some reporters also say that using Pixel 2 in their work is even more fun than using a DSLR.

Night Sight is a clear proof of the important role of software in photography

With the launch of Night Sight a few months ago, Google has once again confirmed the undeniable importance of software algorithms in mobile photography.Specifically, this new feature on Pixel will combine long exposure strips and use machine learning algorithms to calculate the level of white balance as well as color more accurately and the results are amazing.This feature can be found on all Pixel generations (even without optical stabilization), however, it works best on Pixel 3 because algorithms are designed to be compatible. with the latest hardware closest.In short, Google has demonstrated to the world how software can change the photography industry, and in terms of mobile photography, software is even more important than hardware.

However, there is still room for hardware to make a difference, especially when it is supported by AI.Honor's new View 20 smartphone, along with its parent Nova 4, were the first to use Sony's IMX586 image sensor - larger than most competitors and for photo details. at 48 megapixels, showing high resolution never seen on any previous phone.But this means that the manufacturer is trying to cram a lot of small pixels into a small space, which can cause image quality problems.However, in tests with View 20, the Honor Ultra AI has demonstrated superiority in maximizing the resolution of the sensor, as well as decoding unusual sensor color filters to open more detail. Details for photos.Thereby, we can own huge photos taken with mobile phones.

Typically, the image signal processor plays a very important role in the phone's camera performance, but it seems that in the future NPU will take on a bigger role when the advances in imaging are calculated. mass application.Huawei was the first company to announce the system chip with dedicated AI hardware, Kirin 970, and then the Apple B11ic Apple A11.Qualcomm, the world's largest mobile processor chip provider, has also made machine learning a major focus in its products.In addition, Google has developed its own chip called Pixel Visual Core, to support image-related tasks for AI.

- Top best photography smartphone in 2018

Photography is an important application, and the development trend of the smartphone world is to improve imaging capabilities

AI chips - this type of hardware will become increasingly important for ensuring the efficiency and operability of machine learning on mobile devices featuring photography by simply operating the machine. , there will be very high requirements in terms of processor power.Remember that this type of algorithm provides processing power for Google Photos to be trained on large, powerful computers with high-performance GPUs and tenor cores before being applied to your photo library.In general, the ability to perform machine learning calculations on mobile devices in real time is still an outstanding technology.

Google has shown impressive applications that can reduce the burden of handling when operating machine learning on mobile devices, while deep neural tools are also getting faster and faster year by year.Although it is only at the early stages of computational photography, there have been a few real benefits that this technology offers for mobile camera systems.In fact, of all the possibilities and applications given by the hype wave of AI over the past few years, the most practical application of AI is now in photography.The camera is an essential feature of any phone and AI is the best tool to improve the ability to take pictures on the phone, at least for the moment.

See more:

- The most remarkable smartphone models 5G will be released in February, who will lead the game?

- [Infographic] Future work when artificial intelligence gradually replaces people

- AI has created faces like real people, faking cars, houses and animals

- Scientists have created the world's strongest AI, defeating the best AI in chess