What is Apple's Neural Engine? How does it work?

ANE can generate advanced on-device features like natural language processing and image analysis without mining into the cloud or using excessive power.

Let's explore how ANE works and its evolution, including the reasoning and intelligence it provides on Apple platforms and how developers can use it in other applications. third party applications.

What is Apple Neural Engine (ANE)?

Apple Neural Engine is the marketing name for a cluster of highly specialized computing cores optimized for the power-efficient execution of a Deep neural network on Apple devices. It accelerates machine learning (ML) and artificial intelligence (AI) algorithms, providing tremendous speed, memory, and power advantages over the main CPU or GPU.

ANE is a big part of why the latest iPhones, iPads, Macs, and Apple TVs respond quickly and don't get hot during heavy AI and ML computations. Unfortunately, not all Apple devices have ANE - the Apple Watch, Intel-based Macs, and devices older than 2016 all lack ANE.

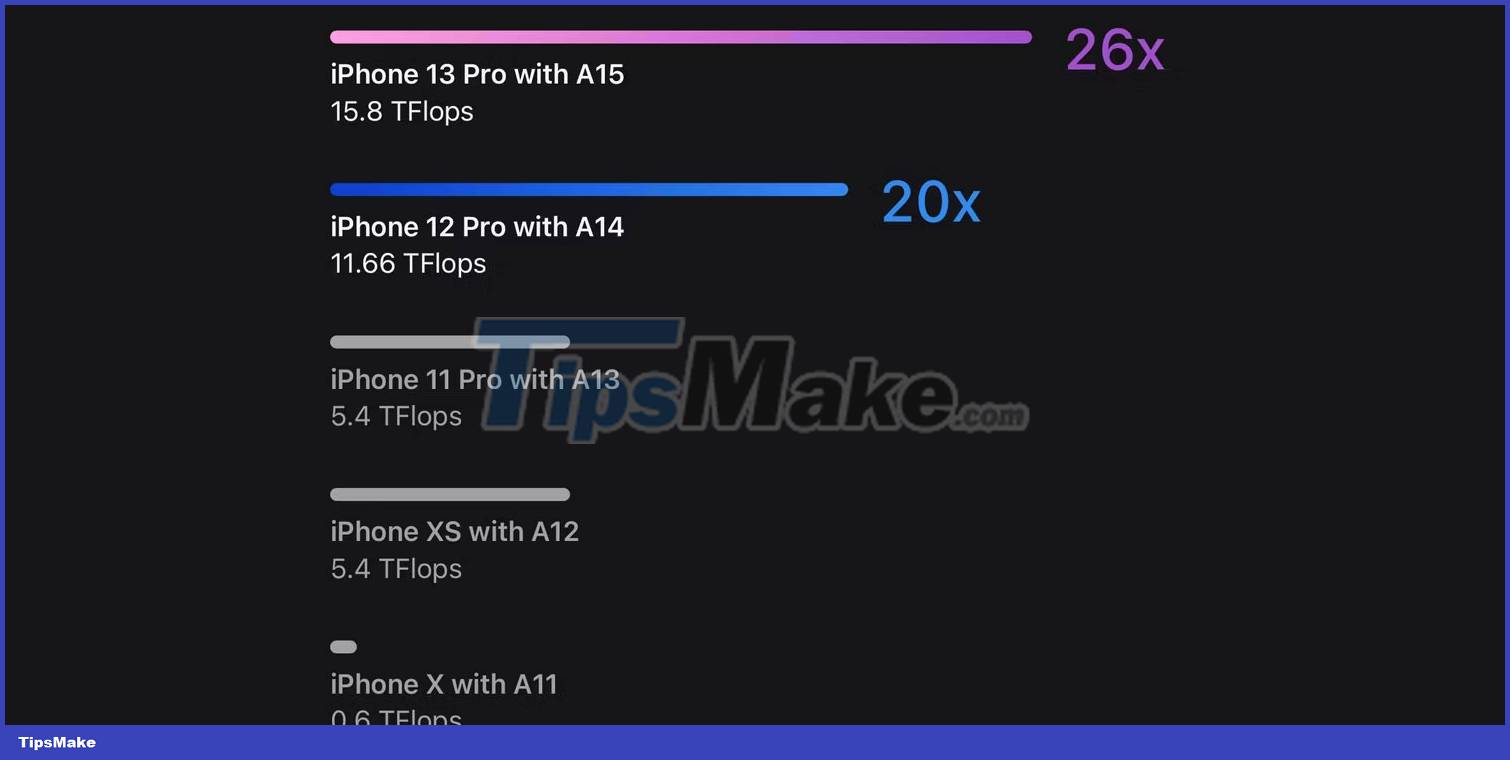

The first ANE that debuted in Apple's A11 chip on the iPhone X in 2017 was powerful enough to support Face ID and Animoji. The latest ANE in the A15 Bionic chip is 26 times faster than the first version. Today, ANE enables features like offline Siri, and developers can use it to run pre-trained ML models, freeing up CPU and GPU to focus on more relevant tasks. .

How does Apple's Neural Engine work?

ANE provides optimized arithmetic and control logic to perform extensive computing operations such as multiplication and accumulation, commonly used in ML and AI algorithms such as image classification, media analysis. , machine translation, etc.

According to an Apple patent entitled "Multi-Mode Planar Engine for Neural Processor", the ANE consists of several neural engine cores and one or more multi-mode planar circuits.

The design is optimized for parallel computing, where many operations, such as matrix multiplication that run in trillions of iterations, must be performed concurrently.

To speed up inference in AI algorithms, ANE uses predictive models. In addition, ANE has its own cache and only supports certain data types, which maximizes performance.

AI features powered by ANE

Here are some on-device features that you may be familiar with with ANE.

- Natural Language Processing : Faster, more reliable speech recognition for dictation and Siri; improve natural language learning in the Translate app and across the system; Instantly translate text in Photos, Camera, and other iPhone apps.

- : Find objects in images such as landmarks, pets, plants, books, and flowers using the Photos app or Spotlight search; Get additional information about recognized objects using Visual Look Up in places like Safari, Mail, and Messages.

- : People Occlusion and motion tracking in AR applications.

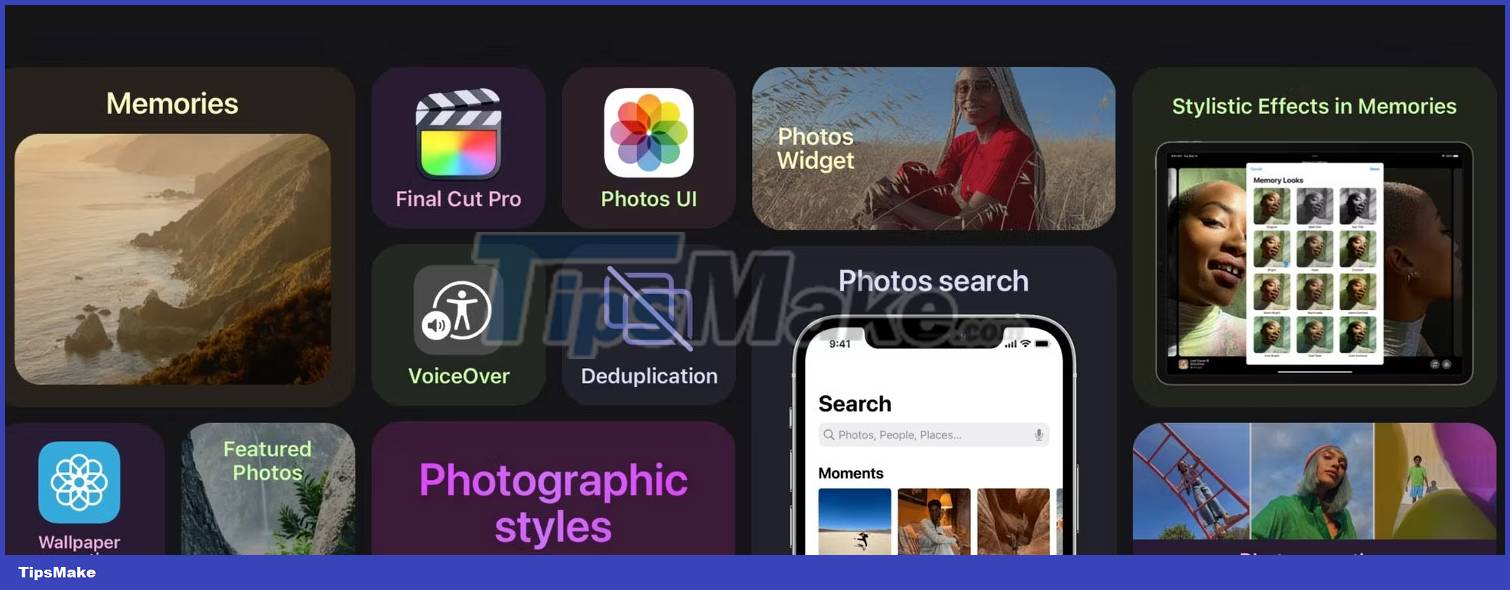

- Video analysis : Detect faces and objects on videos in applications like Final Cut Pro.

- Camera effect : Auto cropping with Center Stage; blur the background during a FaceTime video call.

- Game : Realistic effects in 3D video games.

- Live Text : Provides optical character recognition (OCR) in Camera and Photos, allowing you to easily copy handwriting or text like WiFi passwords or addresses from images.

- Computational photography : Deep Fusion analyzes pixels for better noise reduction, greater dynamic range as well as improved auto exposure and white balance, leveraging Smart HDR where appropriate; shallow depth of field photography, including night mode portraits; Adjust the amount of background blur using Depth Control.

- Insights : ANE is also used for Photographic Styles in the Camera app, style effects and commemorative arrangements in Photos, personalized recommendations like background recommendations, VoiceOver image captions, search Duplicate images in Photos, etc.

Some of the features mentioned above, such as image recognition, also work without ANE but will run much slower and drain your device's battery.

How can developers use ANE in apps?

Many third-party applications use ANE for unfeasible features. For example, the image editor Pixelmator Pro offers tools like ML Super Resolution and ML Enhance. And in djay Pro, ANE separates beats, instruments, and vocals from the recording.

However, third-party developers do not have low-level access to ANE. Instead, all ANE calls must go through Apple's software framework for machine learning, Core ML. With Core ML, developers can build, train, and run their ML models directly on the device. Such a model is then used to make predictions based on the new input data.

"Once a model is on a user's device, you can use Core ML to retrain or refine the model on the device, with the user's own data," according to the Core overview ML on Apple's website.

To accelerate ML and AI algorithms, Core ML leverages not only ANE but also CPU and GPU. This allows Core ML to run a model even without ANE. But with the presence of ANE, Core ML will run much faster and the battery will not be drained as quickly.