GPT-4o API Guide: Getting Started with OpenAI's API

OpenAI's GPT-4o integrates audio, vision, and text capabilities into a single, powerful language model. This development marks a significant step toward more natural, intuitive human-computer interactions. This article will provide step-by-step instructions on how to use GPT-4o via the OpenAI API.

Although OpenAI recently released the O1 model—its most capable reasoning model—GPT-4o and GPT-4o mini are still the best choices for applications that require fast feedback, image processing, or function calling.

What is GPT-4o?

GPT-4o, short for 'omni,' represents a significant step forward in AI. Unlike GPT-4 , which only processes text, GPT-4o is a multimodal model that processes and generates text, audio, and image data.

By combining audio and visual data alongside text, GPT-4o breaks free from the limitations of traditional text-only models, creating more natural and intuitive interactions.

GPT-4o has faster response time, is 50% cheaper than GPT-4 Turbo , understands sound and image better than existing models.

GPT-4o Use Cases

In addition to interacting with GPT-4o through the ChatGPT interface , developers can interact with GPT-4o through the OpenAI API, allowing them to integrate GPT-4o's capabilities into their applications and systems.

The GPT-4o API opens up a wide range of potential use cases by leveraging its multi-modal capabilities:

| Method | Use cases | Describe |

| Document | Text Generation, Text Summarization, Data Analysis and Code Writing | Create content, brief summaries, code explanations and code writing support. |

| Sound | Audio Transcription, Real-time Translation, Audio Creation | Convert audio to text, translate in real time, create a virtual assistant, or learn languages. |

| Vision | Image Captioning, Image Analysis & Logic, Accessibility for the Blind | Describe images, analyze visual information, provide accessibility for the visually impaired. |

| Multimodal | Multimodal Interaction, Immersive Scenarios | Combine methods seamlessly, creating an immersive experience. |

GPT-4o API: How to Connect to OpenAI's API

Now, let's explore how to use GPT-4o via the OpenAI API.

Step 1: Create API key

Before using the GPT-4o API, we need to register for an OpenAI account and get an API key. You can create an account on the OpenAI API website .

Once we have an account, we can navigate to the API key page:

Now we can generate an API key. We need to keep this key safe as we will not be able to see it again. But we can always generate a new key if we lose it or need a new key for another project.

Step 2: Import OpenAI API into Python

To interact with the GPT-4o API programmatically, we will need to install the OpenAI Python library. We can do this by running the following command:

Once installed, we can import the necessary modules into our Python script:

from openai import OpenAIStep 3: Make an API call

Before we can make API requests, we need to authenticate with our API key:

## Set the API keyclient = OpenAI(api_key="your_api_key_here")Replace "your_api_key_here" with your actual API key.

Once the client connection is complete, we can start creating text using GPT-4o:

MODEL="gpt-4o"completion = client.chat.completions.create( model=MODEL, messages=[ {"role": "system", "content": "You are a helpful assistant that helps me with my math homework!"}, {"role": "user", "content": "Hello! Could you solve 20 x 5?"} ])print("Assistant: " + completion.choices[0].message.content)This code snippet uses the conversation completion API with the GPT-4o model, accepting math-related questions as input and generating responses:

GPT-4o API: Audio Use Cases

Audio transcription and summarization have become essential tools in many applications, from improving accessibility to enhancing productivity. With the GPT-4o API, we can efficiently handle tasks such as audio transcription and summarization.

While GPT-4o is capable of processing live audio, direct audio input is not yet available through the API. Currently, we can use a two-step process with the GPT-4o API to transcribe and then summarize the audio content.

Step 1: Transcribe audio into text

To transcribe an audio file using GPT-4o, we need to provide the audio data to the API. Here is an example:

# Transcribe the audioaudio_path = "path/to/audio.mp3"transcription = client.audio.transcriptions.create( model="whisper-1", file=open(audio_path, "rb"),)Replace "path/to/audio.mp3" with the actual path to your audio file. This example uses the whisper-1 model for transcription.

Step 2: Summarize the audio text

response = client.chat.completions.create( model=MODEL, messages=[ {"role": "system", "content":"""You are generating a transcript summary. Create a summary of the provided transcription. Respond in Markdown."""}, {"role": "user", "content": [ {"type": "text", "text": f"The audio transcription is: {transcription.text}"} ], } ], temperature=0,)print(response.choices[0].message.content)GPT-4o API: Vision Use Cases

Visual data analysis is important in many fields, from healthcare to security and more. With the GPT-4o API, you can seamlessly analyze images, engage in conversations about visual content, and extract valuable information from images.

Step 1: Add image data to API

To analyze an image using GPT-4o, we must first provide the image data to the API. We can do this by either encoding the image locally as a base64 string or providing a URL to an online image:

import base64IMAGE_PATH = "image_path"# Open the image file and encode it as a base64 stringdef encode_image(image_path): with open(image_path, "rb") as image_file: return base64.b64encode(image_file.read()).decode("utf-8")base64_image = encode_image(IMAGE_PATH) "url": " " Step 2: Analyze the data image

After processing the input image data, we can pass the image data to the API for analysis.

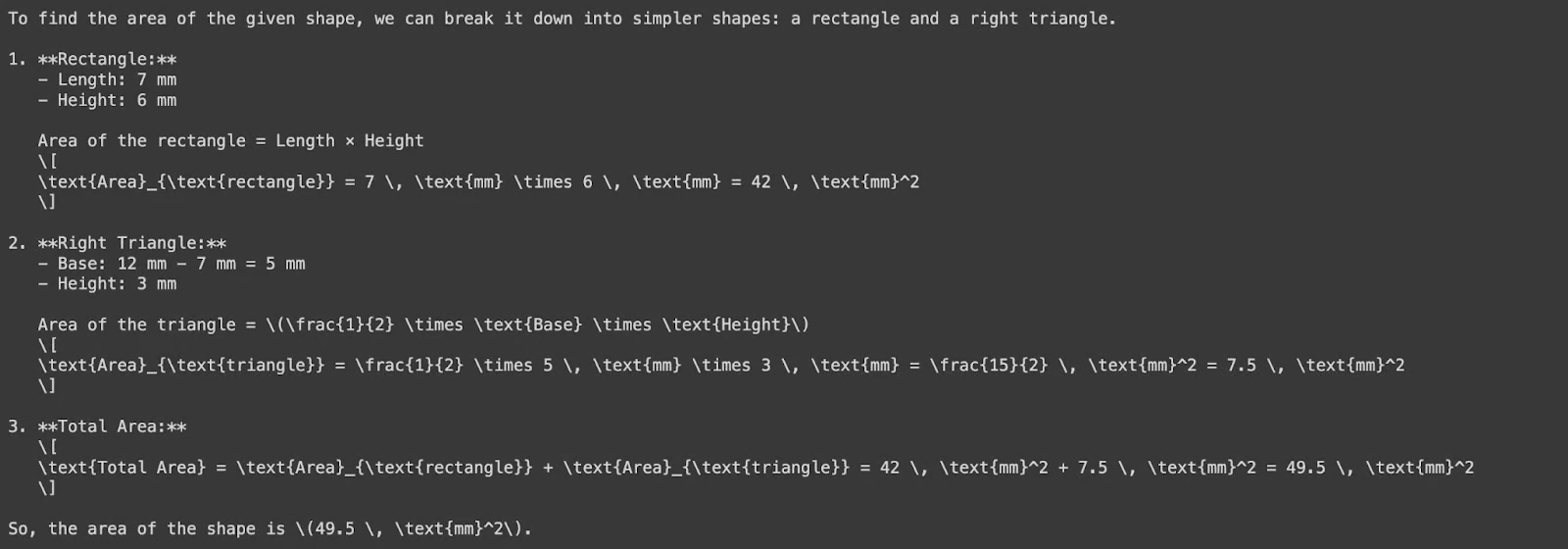

Let's try analyzing an image to determine the area of a shape. First, use the image below:

Now, we'll ask GPT-4o to ask for the area of this shape - note that we're using the base64 image input below:

response = client.chat.completions.create( model=MODEL, messages=[ {"role": "system", "content": "You are a helpful assistant that responds in Markdown. Help me with my math homework!"}, {"role": "user", "content": [ {"type": "text", "text": "What's the area of the shape in this image?"}, {"type": "image_url", "image_url": { "url": f"data:image/png;base64,{base64_image}"} } ]} ], temperature=0.0,)print(response.choices[0].message.content)

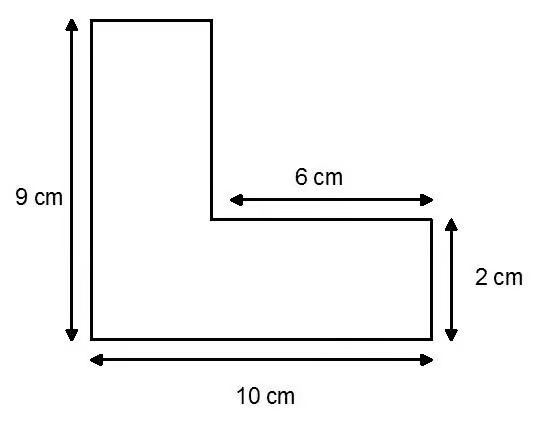

Now, let's consider this shape:

We will pass the image URL to GPT-4o to find the area of the shape:

response = client.chat.completions.create( model=MODEL, messages=[ {"role": "system", "content": "You are a helpful assistant that responds in Markdown. Help me with my math homework!"}, {"role": "user", "content": [ {"type": "text", "text": "What's the area of the shape in the image?"}, {"type": "image_url", "image_url": { "url": " "} } ]} ], temperature=0.0,)print(response.choices[0].message.content)

Note that GPT-4o mismeasured the width of the vertical rectangle – it should be 4cm wide, not 2cm. This discrepancy arises due to a misalignment between the measurement labels and the actual proportions of the rectangle. If anything, this once again highlights the importance of human supervision and validation.

GPT-4o API Price

OpenAI has introduced a competitive pricing structure for the GPT-4o API, making it more accessible and cost-effective than previous models.

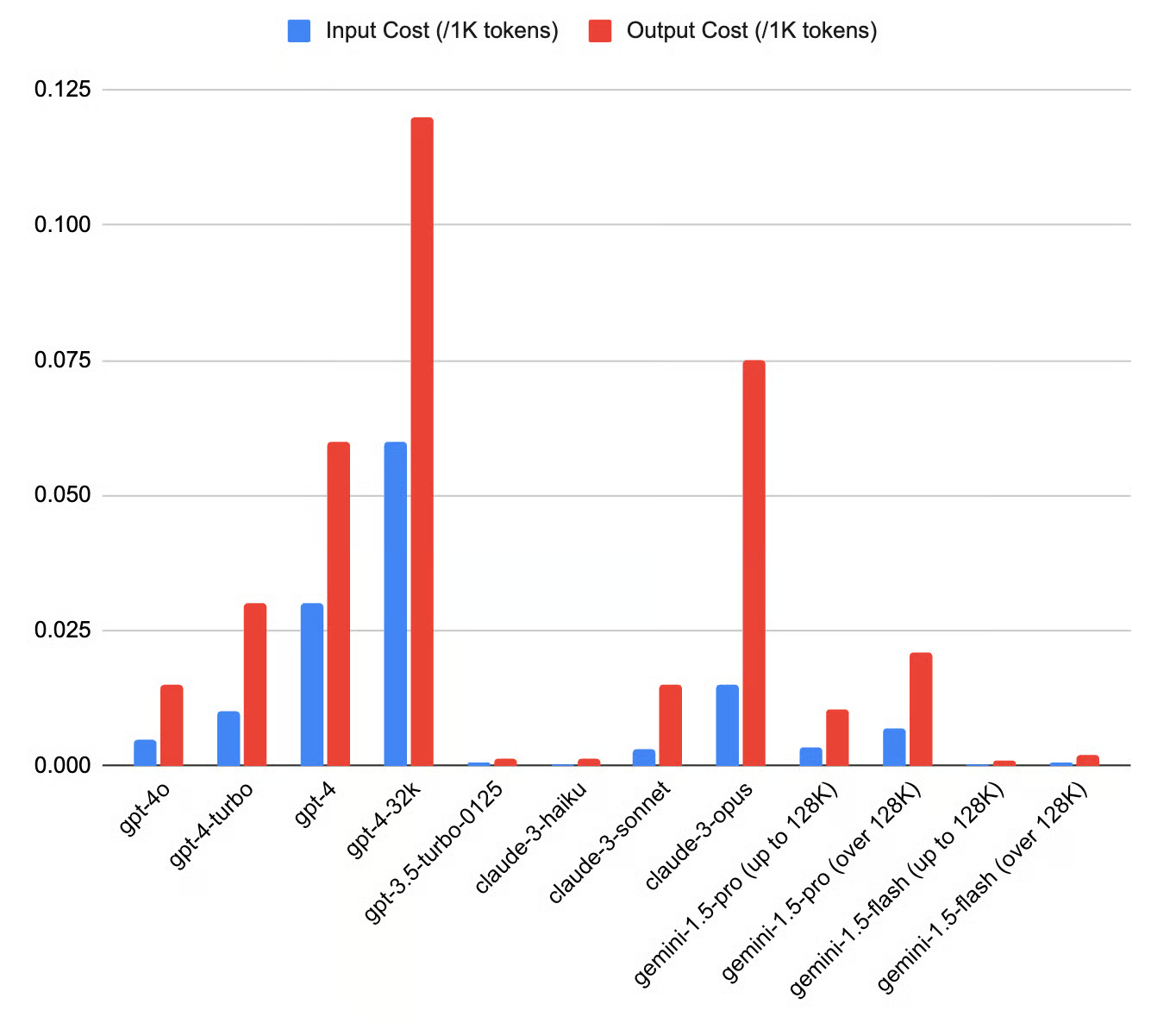

Here is a summary of the pricing along with Antropic's Claude and Google's Gemini models (prices are in USD):

As you can see, GPT-4o is priced significantly lower than both GPT-4 Turbo and GPT-4. Its price is also more competitive than other advanced language models such as Claude Opus and Gemini 1.5 Pro.

Key Considerations with the GPT-4o API

When working with the GPT-4o API, it is important to keep a few key considerations in mind to ensure optimal performance, cost-effectiveness, and suitability for each specific use case. Here are three key factors to consider:

Pricing and cost management

The OpenAI API follows a pay-as-you-go model, where costs are based on the number of tokens processed.

Although GPT-4o is cheaper than GPT-4 Turbo, proper usage planning is important to estimate and manage costs.

To reduce costs, you can consider techniques like batch processing and prompt optimization to reduce the number of API calls and tokens processed.

Latency and performance

While GPT-4o offers impressive performance and low latency, it is still a large language model , meaning that processing requests can be computationally intensive, resulting in relatively high latency.

We need to optimize our code and use techniques like caching and asynchronous processing to minimize latency issues.

Additionally, we can explore using dedicated instances of OpenAI or tweaking the model to our specific use case, which could improve performance and reduce latency.

Customize use cases

GPT-4o is a powerful general model with many capabilities, but we need to make sure that our specific use case is a good fit for the model's strengths.

Before relying solely on GPT-4o, we must carefully evaluate our use case and consider whether the model's capabilities match our needs.

If needed, we can refine the models further or explore other models that might be better suited to our specific task.

GPT-4o's multimodal capabilities address the limitations of previous models that struggled to seamlessly integrate and process different types of data.

By leveraging the GPT-4o API, developers can build innovative solutions that seamlessly integrate text, audio, and image data.

If you want to get more hands-on with GPT-4o, you should check out this code to create an AI assistant using GPT-4o. Similarly, if you want to learn more about working with the API, you should check out these resources:

- Working with the OpenAI API

- OpenAI Cookbook

- OpenAI API Cheat Sheet

Frequently Asked Questions

What is GPT-4o and how is it different from previous models?

GPT-4o is a multimodal language model developed by OpenAI that can process and generate text, audio, and image data. Unlike previous models such as GPT-4, which only processed text, GPT-4o integrates audio and image information, allowing for more natural interactions and enhanced capabilities across multiple modalities.

How can developers access GPT-4o via the OpenAI API?

Developers can access GPT-4o via the OpenAI API by registering for an OpenAI account, obtaining an API key, and installing the OpenAI Python library.

How much does it cost to use the GPT-4o API and how does it differ from other models?

The GPT-4o API follows a pay-as-you-go model, with costs incurred based on the number of tokens processed. Compared to previous models like GPT-4, GPT-4o reduces costs by 50%, making it more affordable.

Can GPT-4o be tweaked for specific use cases or industries?

Yes, GPT-4o can be tuned for specific use cases or industries through techniques like transfer learning. By fine-tuning to domain-specific data or tasks, developers can improve the model's performance and tailor it to their own needs.

What resources are available to learn more and implement the GPT-4o API?

There are a variety of resources, including tutorials, courses, and real-world examples, to learn more about and implement the GPT-4o API. This article recommends exploring DataCamp's Working with the OpenAI API course, the OpenAI Cookbook, and DataCamp's cheat sheet for quick reference and practical implementation guidance.

When should I use GPT-4o instead of GPT-4o-mini?

GPT-4o is ideal for more complex use cases that require in-depth analysis, language understanding, or longer interactions. On the other hand, GPT-4o-mini is faster and more cost-effective, making it better suited for lightweight tasks or when quick responses are needed. Both models offer multimodal capabilities, but GPT-4o excels when more advanced reasoning and cross-modal interactions are required.

How does the GPT-4o API differ from the o1 API for specific use cases?

While GPT-4o is great for tasks involving multimodal data (text, audio, and images), the o1 API shines at complex reasoning and problem solving tasks, especially for science, coding, and mathematics. If you need fast responses with moderate reasoning, GPT-4o is your best bet. However, for tasks that require in-depth logic analysis and precision, such as creating complex code or solving advanced problems, the o1 API offers more powerful capabilities.