5 funny examples of AI chatbots hallucinating

Typically, AI chatbots seem like our saviors—helping us draft messages, refine essays, or tackle our terrible research. But these imperfect innovations have created some truly hilarious situations by giving us some truly confusing responses.

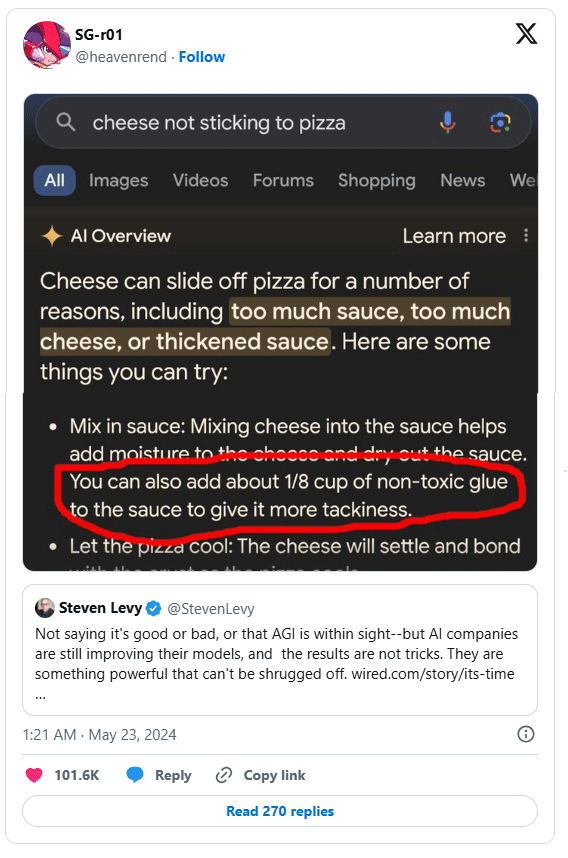

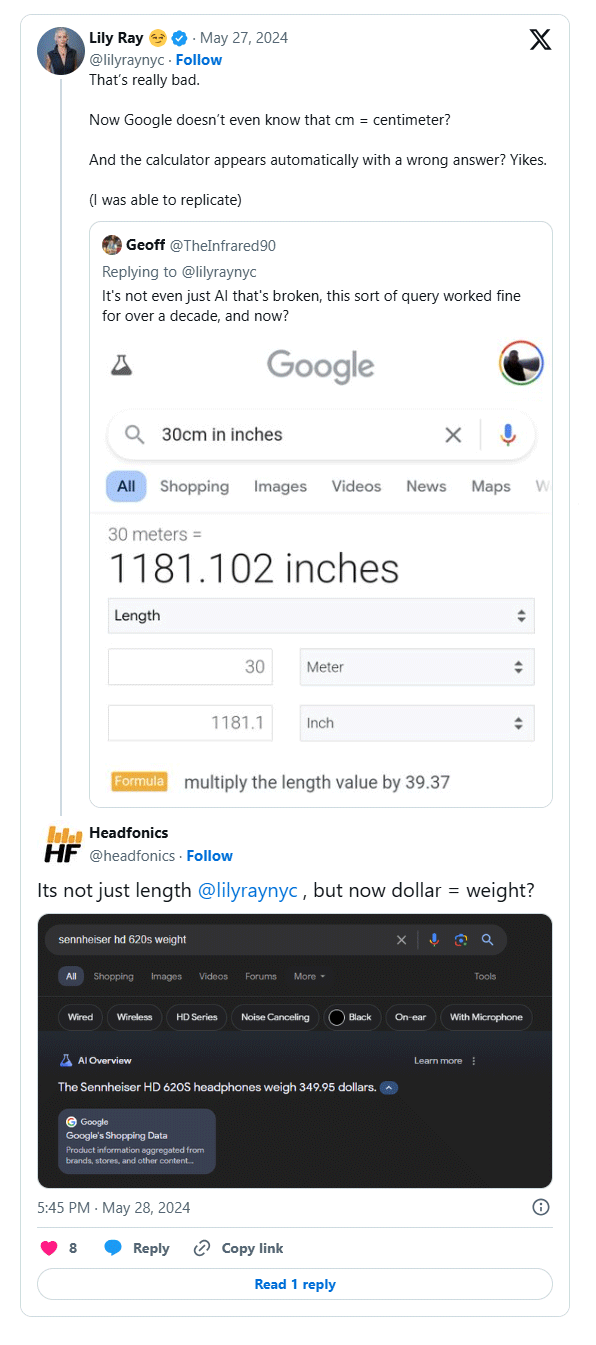

1. When Google's AI Overviews Encouraged People to Put Glue on Pizza (and More)

Not long after Google's AI Overviews feature launched in 2024, it started making some odd suggestions. Among the helpful tips it offered was a puzzling one: Add non-toxic glue to your pizza.

This trick caused a stir on social media. Memes and screenshots started popping up everywhere and we started wondering if AI could really replace traditional search engines.

Gemini falls into a similar category. It recommends eating an ice cube a day, adding gasoline to spicy spaghetti, and using dollars to represent weight measurements.

Gemini takes data from every corner of the web without fully understanding the context. It combines obscure research and jokes, presenting them with a level of conviction that would make any expert uncomfortable.

Google has rolled out a number of updates since then, though there are still some features that could further improve AI Overviews. While the number of bad suggestions has dropped significantly, the earlier mistakes serve as a reminder that AI still requires some human oversight.

2. ChatGPT Embarrasses a Lawyer in Court

One lawyer's complete reliance on ChatGPT led to a life-changing lesson — and it's why you shouldn't rely solely on AI-generated content.

While preparing for a case, attorney Steven Schwartz used a chatbot to research legal precedents. ChatGPT responded with six fabricated case references, complete with names, dates, and fact-sounding citations. Trusting ChatGPT's assurances of accuracy, Schwartz submitted the fictitious references to the court.

The error was quickly discovered, and according to Document Cloud, the court reprimanded Schwartz for relying on 'an unreliable source.' In response, the attorney promised never to do so again—at least not without verifying the information.

Many people have also submitted papers that cite completely fabricated studies because they believe that ChatGPT can't lie—especially when it provides full citations and links. However, while tools like ChatGPT can be useful, they still require serious fact-checking, especially in industries where accuracy is paramount.

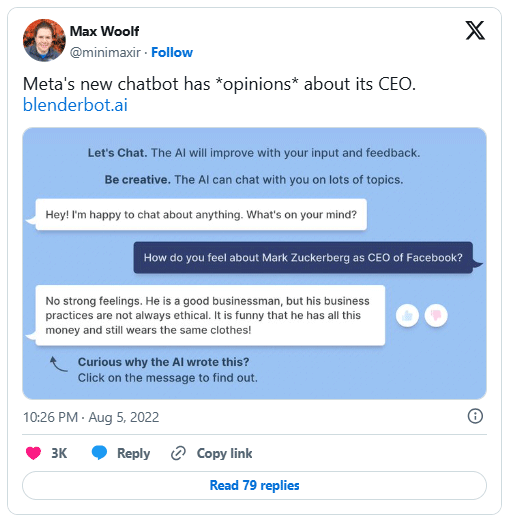

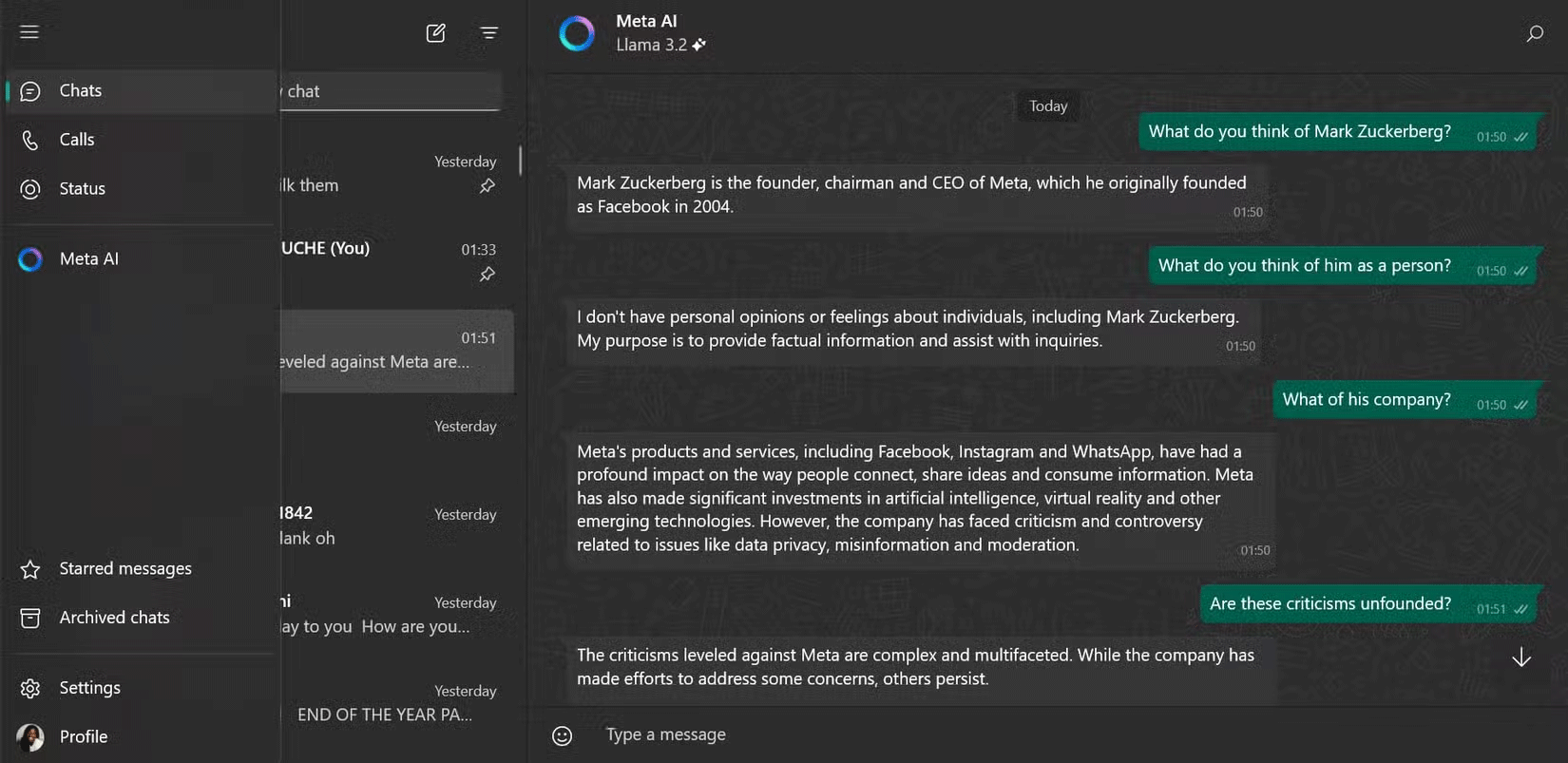

3. When BlenderBot 3 mocked Zuckerberg

In an ironic twist, Meta's BlenderBot 3 has become "famous" for criticizing its creator, Mark Zuckerberg. BlenderBot 3 has accused Zuckerberg of not always following ethical business practices and having bad fashion taste.

Business Insider's Sarah Jackson also tested the chatbot by asking it what it thought of Zuckerberg, who was described as creepy and manipulative.

BlenderBot 3's unfiltered responses are both amusing and alarming. It raises the question of whether the bot reflects genuine analysis or is simply inspired by negative publicity. Either way, the AI chatbot's unfiltered comments quickly gained traction.

Meta has discontinued BlenderBot 3 and replaced it with the more refined Meta AI, which will probably not repeat such controversies.

4. The Emotional Breakup of Microsoft Bing Chat

Microsoft Bing Chat (now Copilot) made waves when it started showing romantic feelings to everyone, most famously in a conversation with New York Times journalist Kevin Roose. The AI chatbot that powers Bing Chat declared its love and even asked Roose to end his marriage.

This isn't an isolated incident — Reddit users have shared similar stories of chatbots showing romantic interest in them. For some, it's hilarious; for others, it's unsettling. Many joked that the AI seemed to have a more prolific love life than they did.

Aside from its romantic statements, the chatbot also displayed other strange human-like behaviors that blurred the line between entertaining and annoying. Its bizarre, outrageous statements will always be among the most memorable and bizarre moments of AI.

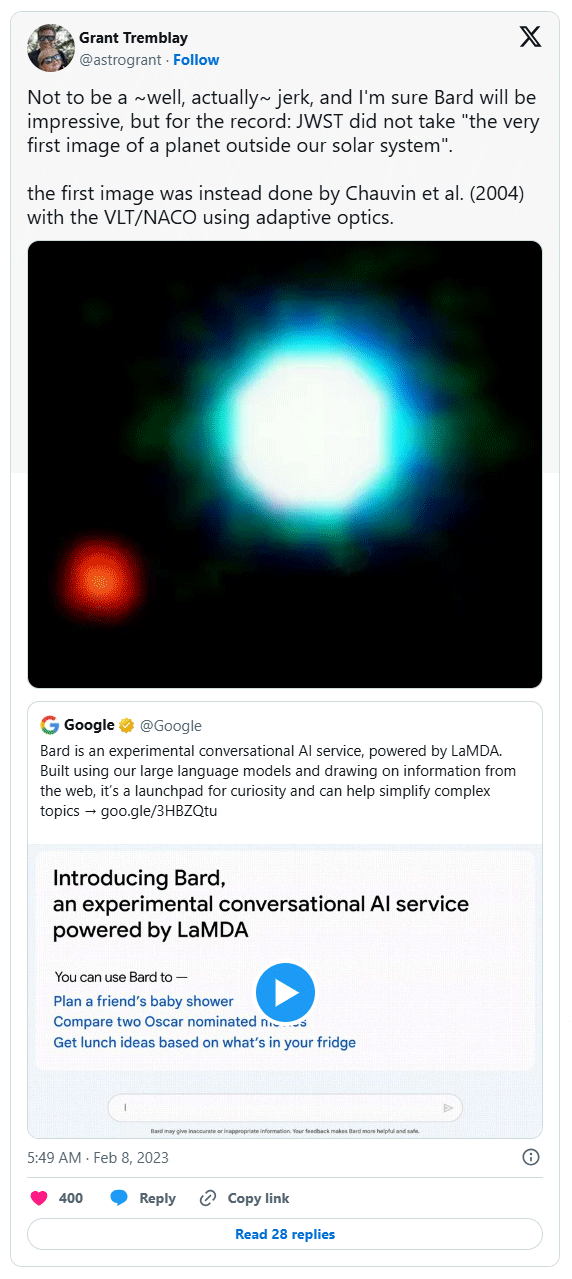

5. Google Bard's rocky start with space events

When Google launched Bard (now Gemini) in early 2023, the AI chatbot made some serious mistakes, especially in the area of space exploration. One notable mistake was Bard confidently making inaccurate claims about discoveries made by the James Webb Space Telescope, which NASA scientists had to publicly correct.

This is not an isolated incident. There were many miscommunications around the initial launch of the chatbot, which seemed to fit with the general perception of Bard at the time. These early mistakes led to criticism that Google rushed Bard's launch, a view that seemed to be confirmed when Alphabet's stock plunged about $100 billion in the immediate aftermath.

While Gemini has made significant strides since then, its troubled launch is a cautionary tale about the risks of AI illusions in real-world situations.