Why shouldn't we trust ChatGPT to summarize text?

There are limits to what ChatGPT knows, but it is bound to provide what you ask for, even if the results are incorrect. This means that ChatGPT can make mistakes, even some common mistakes, especially when summarizing information that you are not paying attention to.

ChatGPT may ignore or misunderstand the prompt

If you give a chatbot a lot of data to sort through, even a complex prompt, it may deviate from the instructions and follow its own interpretation.

Making too many requests at once is one of the mistakes to avoid. But it could also be because the chatbot doesn't recognize a specific word or phrase you use.

In the following example, ChatGPT receives a lot of information about the emoji's linguistic function. The intentionally simple prompt asks the chatbot to summarize things and explain the connection between emojis and cultures.

The chatbot merged both responses into one paragraph. The prompt follows with more explicit instructions asking it to dig deeper into its knowledge base.

This is why you should make sure your instructions are accurate, provide context when necessary, and track the results of ChatGPT. If you report any errors immediately, the chatbot can produce more accurate results.

ChatGPT may ignore the information you provide

ChatGPT is smart, but don't cram in details about a particular topic without clearly stating what's important.

The problem here is that without proper guidance, the algorithm will cherry-pick information it thinks is relevant to the report or list you need.

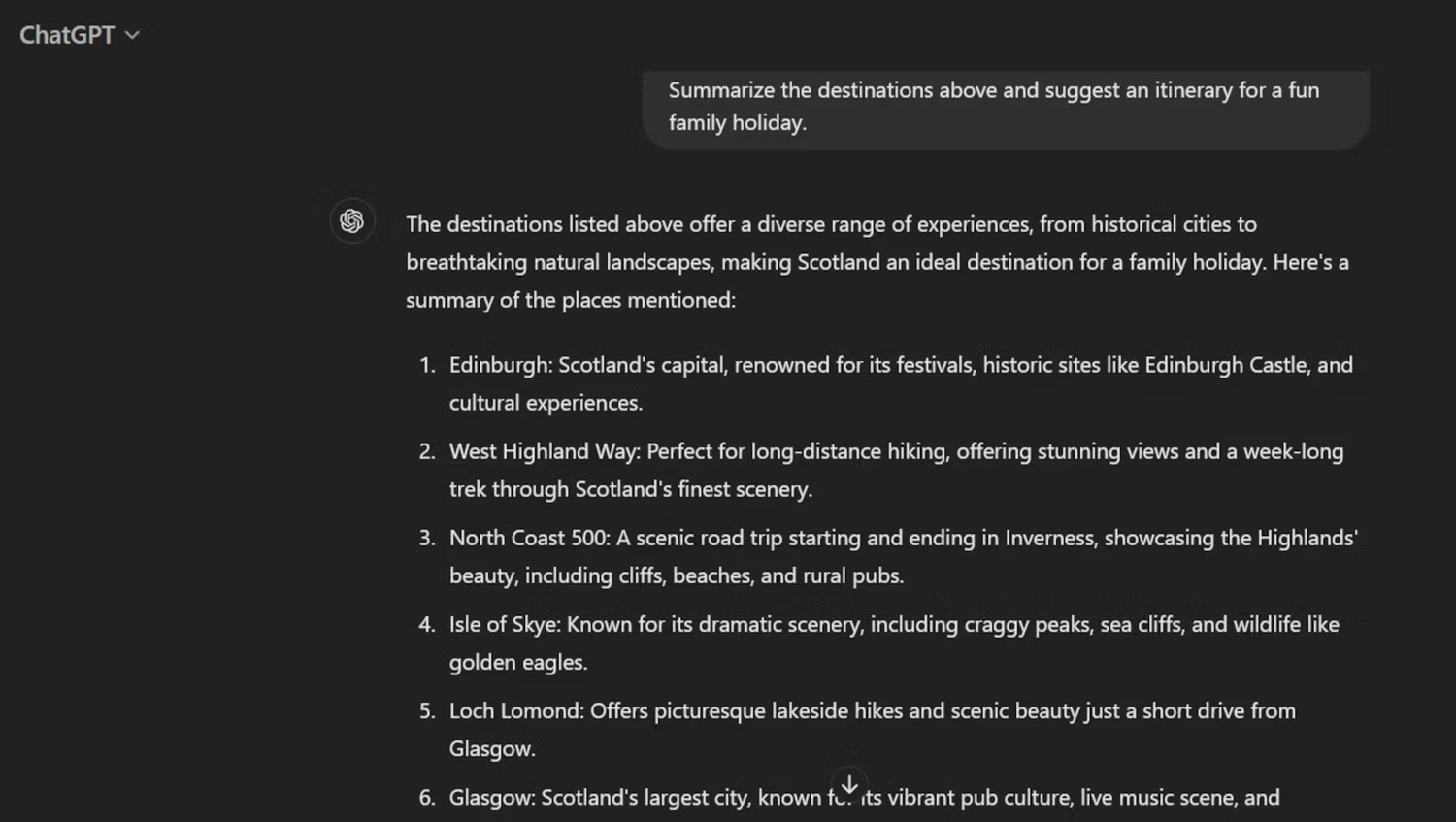

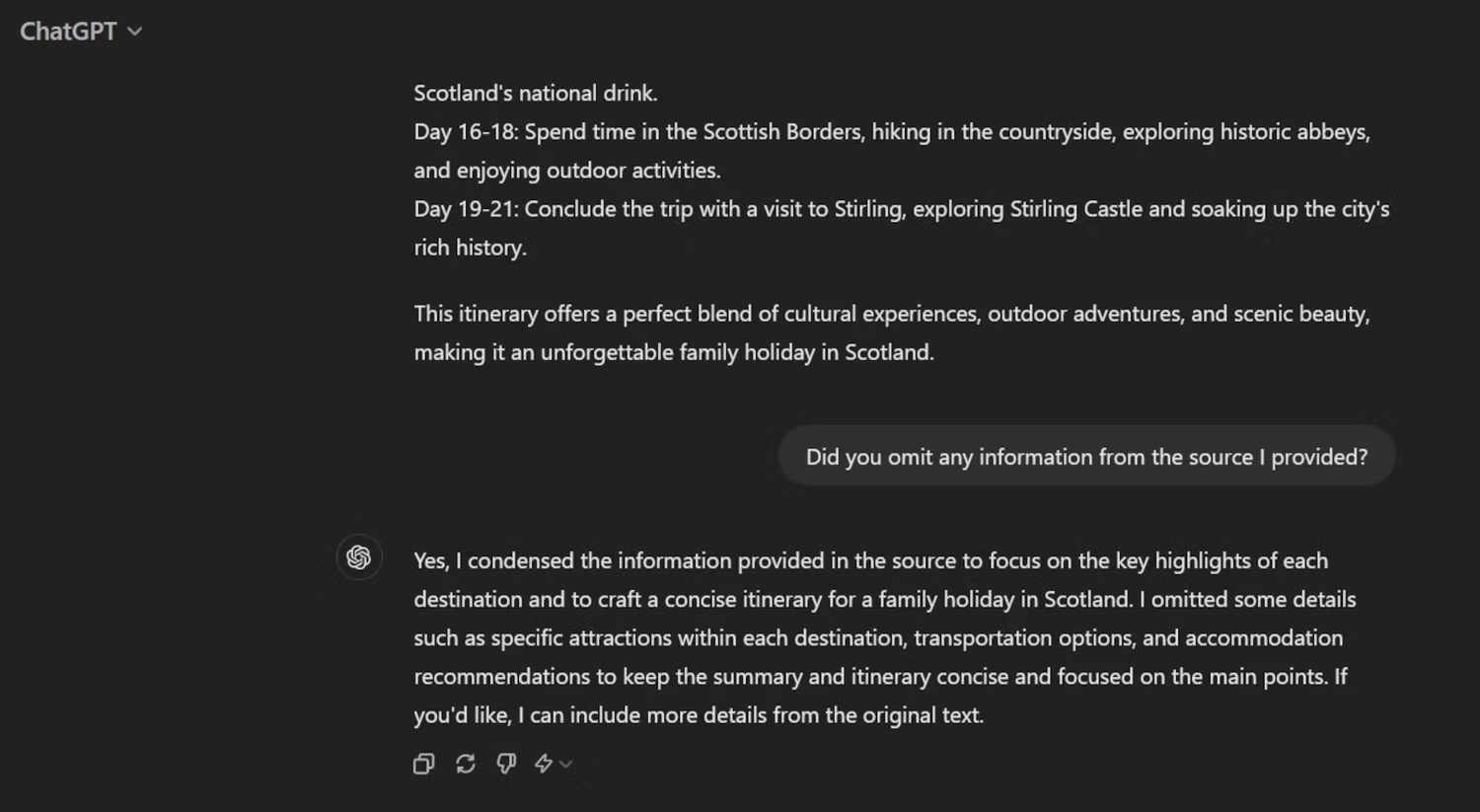

To test ChatGPT, ChatGPT was asked to summarize a long piece of text about must-see destinations in Scotland and create an itinerary for a family holiday.

When asked if it had missed any details, it admitted that it had left out certain information, such as specific attractions and transportation options. Accuracy is its goal.

There is no guarantee that ChatGPT will use the details you expect. So, plan and word your prompt carefully to ensure your chatbot's summary is worded accurately.

ChatGPT may use the wrong alternatives

OpenAI has updated GPT-4o with data available until October 2023, and GPT-4 Turbo's until December of the same year. However, the algorithm's knowledge is not infinite or reliable with real-time events - it does not know everything about the world. Furthermore, it doesn't always reveal that it lacks data on a particular topic unless you ask directly.

When summarizing text containing such obscure references, ChatGPT may replace them with alternatives it understands or invent details about them.

The following example involves translation into English. ChatGPT does not understand the Greek name of the Toque d'Or award but instead of highlighting the problem, it simply gives a literal and inaccurate translation.

Company names, books, awards, research affiliations, and other elements may disappear or be changed in the chatbot's summary. To avoid big mistakes, be aware of ChatGPT's content creation limits.

ChatGPT may misunderstand the truth

It's important to learn all you can about how to avoid mistakes with Generative AI tools. As the example above demonstrates, one of the biggest problems with ChatGPT is that it is missing certain information or has learned things incorrectly. This can then affect any text it generates.

If you ask for a summary of various data points that contain facts or concepts that are unfamiliar to ChatGPT, the algorithm may express them poorly.

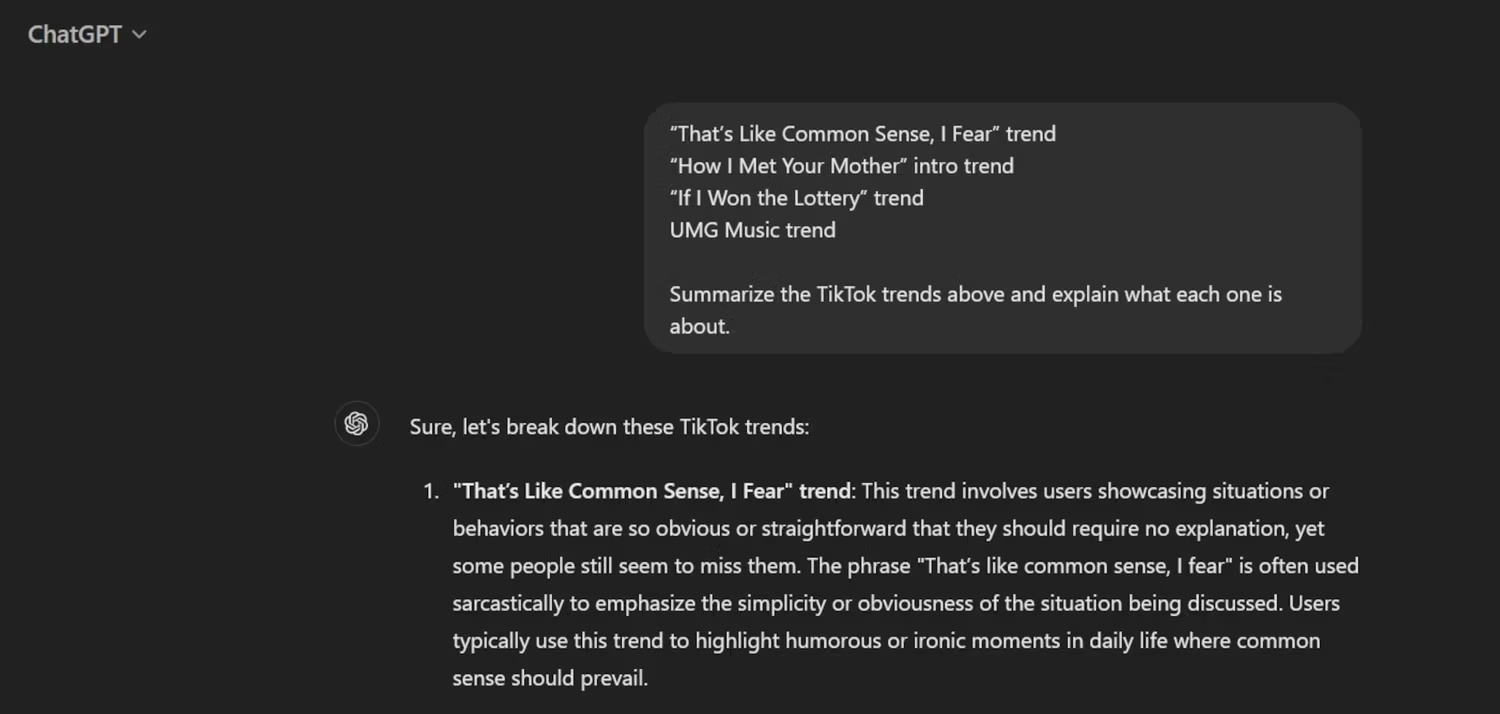

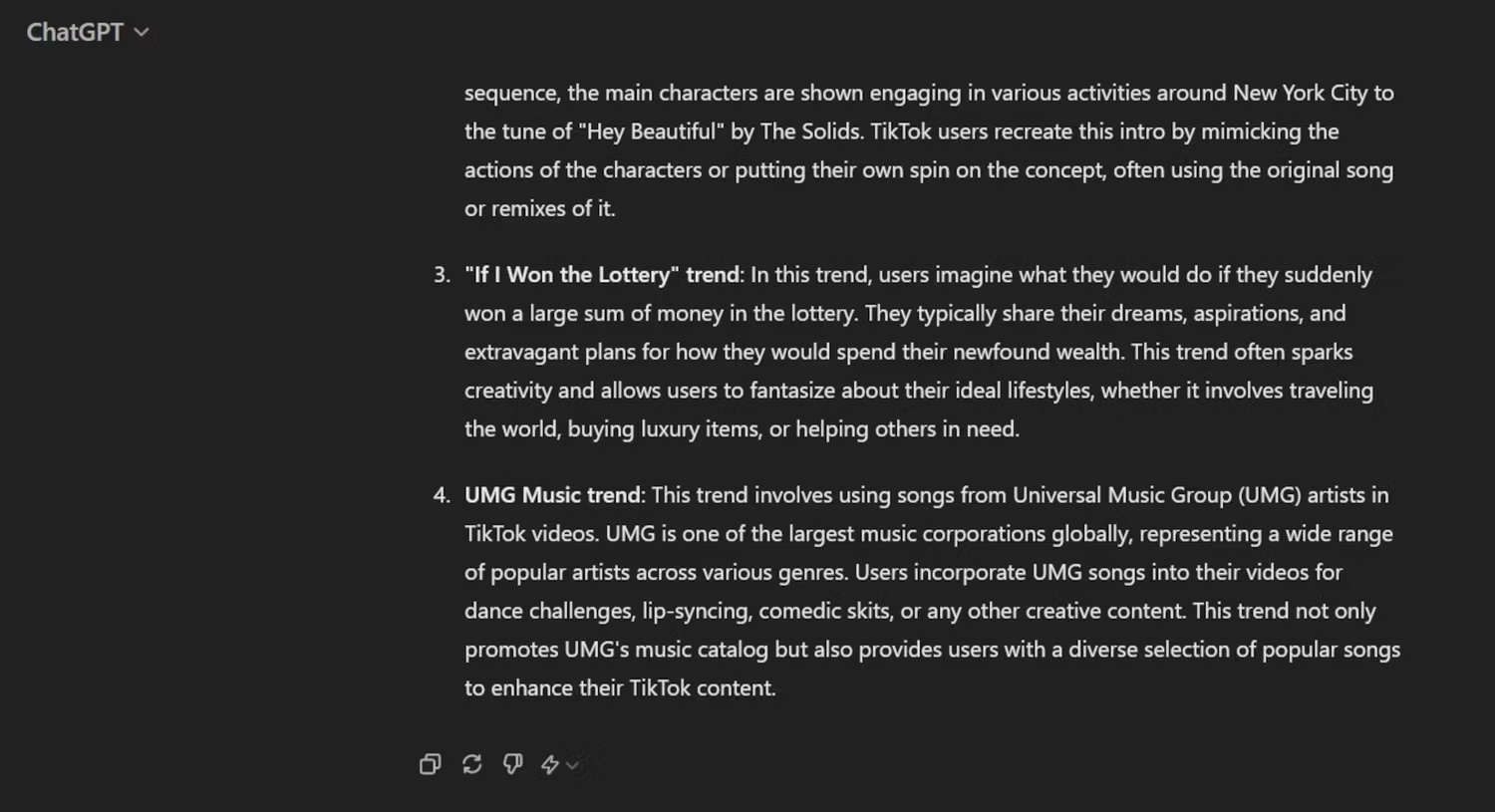

In the example below, the prompt asks ChatGPT to summarize 4 TikTok trends and explain the meaning of each trend.

Most of the explanations are a bit wrong or lack specific information about what the poster is supposed to do. The description of the UMG Music trend is especially misleading. The trend changed after the category was removed from TikTok, and users now post videos to criticize rather than support UMG, something ChatGPT was not aware of.

The best solution is not to blindly trust AI chatbots. Even if ChatGPT aggregates information you provide yourself, be sure to check the descriptions and statements and note any inaccuracies. Then you'll know how to structure your prompt for best results.

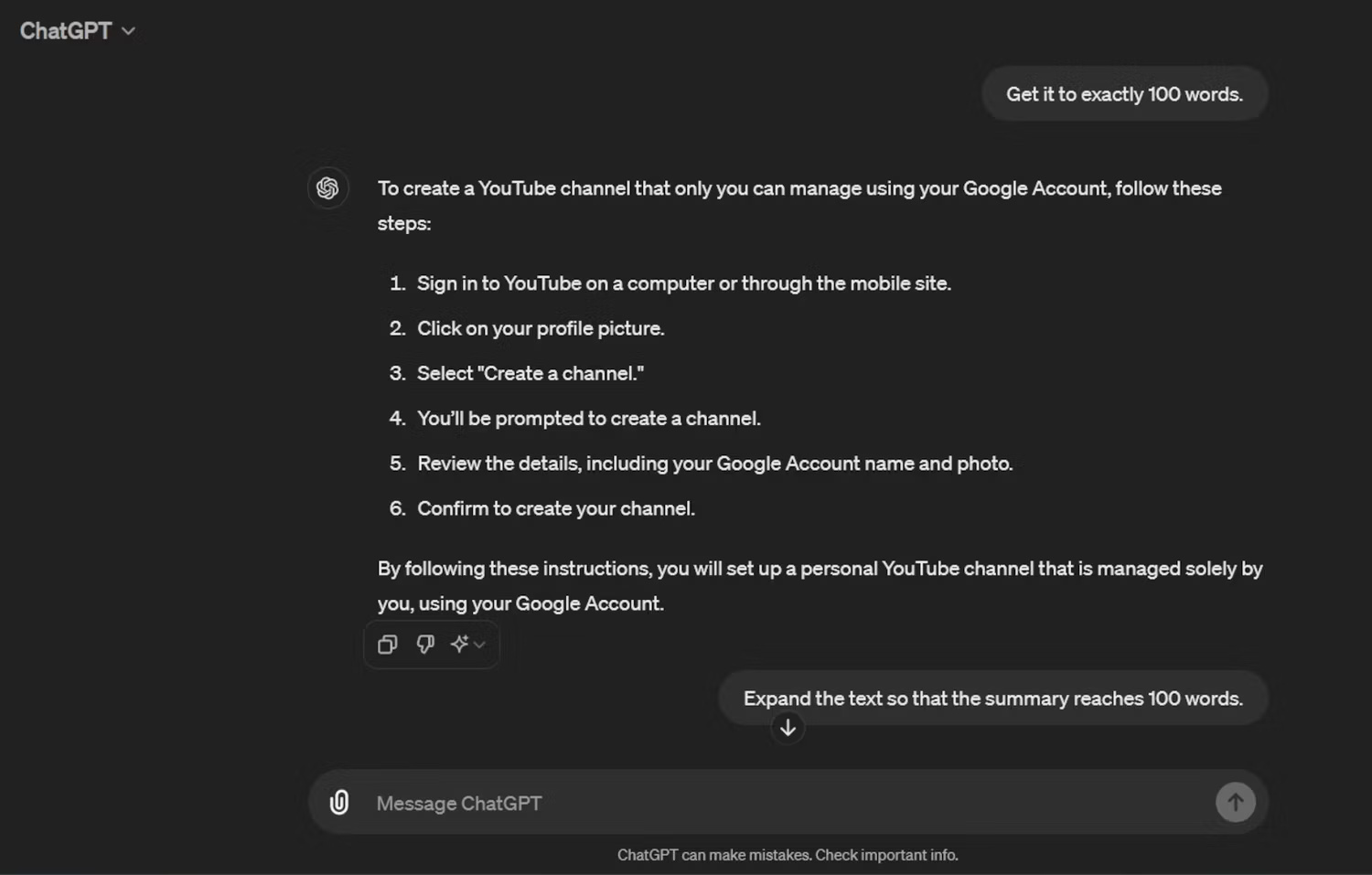

ChatGPT may misjudge word or character limits

Although OpenAI has enhanced ChatGPT with new features, it still seems to struggle with basic instructions, such as following specific word or character limits.

The test below shows that ChatGPT needs some prompts. It still falls short or exceeds the required word count.

That's not the worst mistake that can happen with ChatGPT. But it's one more factor to consider when proofreading the summaries this tool generates.

Be specific about the desired content length. You may need to add or delete some words somewhere. It's worth taking some time to double-check if you're dealing with projects with strict word limits.

Overall, ChatGPT is fast, intuitive, and constantly improving, but it still makes mistakes. Therefore, do not completely trust ChatGPT to summarize the text you need accurately.

The cause is often related to missing or erroneous information in ChatGPT's data warehouse. ChatGPT's algorithm is also designed to automatically respond without having to always check for accuracy. For now, the best course of action is to develop your own content with ChatGPT as a powerful assistant that needs to be monitored regularly.