Here's How Hackers Target Everyday Users With AI Chatbots!

Hackers are using Generative AI to target people more efficiently and cheaply than ever before. While you may be confident in your ability to detect malicious attacks, now is a great time to learn about the latest tactics they use to exploit people.

How do hackers use AI to choose targets?

Hackers use stolen social media profiles to scam people. To steal online identities, they often create fake profiles that mimic real users or hijack real accounts to exploit trust and manipulate victims.

AI-powered bots can help collect photos, bios, and social media posts to create convincing copycat accounts. Once the scammer creates the fake profile, they send friend requests to the victim's contacts, tricking them into thinking they're interacting with someone they know.

A real account opens up many doors to using AI for more effective, unique, and targeted scams. AI-powered bots can identify close relationships, reveal hidden information, and analyze past conversations. From there, AI chatbots can take over and start chatting with them by mimicking their voice patterns and presenting scams like phishing links, fake emergencies, financial requests, or sharing sensitive information.

For example, you've probably seen a friend's Facebook account get hacked and used to post phishing links on their own Facebook feed. That's just one part of the account takeover. Once compromised, the scammer can use AI tools to message everyone in the scam account's contact list, hoping to lure in more victims.

Because of these developments, many web services use complex, hard-to-solve CAPTCHAs, mandatory two-factor authentication, and more sensitive behavioral tracking systems. But even with these additional defenses, humans remain the biggest vulnerability.

Types of AI Scams You Should Know About

Cybercriminals use multi-modal AI to create bots or impersonate well-known individuals or groups. While many AI-powered scams use conventional social engineering techniques , the use of AI enhances their effectiveness and makes them harder to detect.

Phishing and Smishing Attacks with AI

Phishing and Smishing attacks have always been a major form of fraud. These attacks work by imitating well-known companies, government agencies, and online services to steal your login information and gain access to your accounts. Although common, Phishing and Smishing attacks can be easily detected. Scammers often need to wager a wager to get any favorable outcome.

Spear Phishing attacks, on the other hand, are much more effective. These attacks require the attacker to conduct research and reconnaissance, creating highly personalized emails and text messages to trick people. However, spear phishing attempts rarely appear in our inboxes because they require significant effort to be successful.

Romance fraud

Romance scams use emotional manipulation to gain trust and affection before exploiting the victim. Unlike traditional online scams, where social engineering ends after you hand over your login credentials, romance scams require the scammer to spend weeks, months, or even years building a relationship. Because of the time investment, cybercriminals can only target a few people at a time, making these scams even rarer than manual spear phishing attacks.

However, scammers today can use AI chatbots to handle some of the most time-consuming aspects of romance scams—chatting, texting, sending photos and videos, and even making phone calls. Because targets are often emotionally vulnerable, they may even subconsciously perceive AI-generated conversations as novel or even seductive.

AI-Enhanced Customer Support Fraud

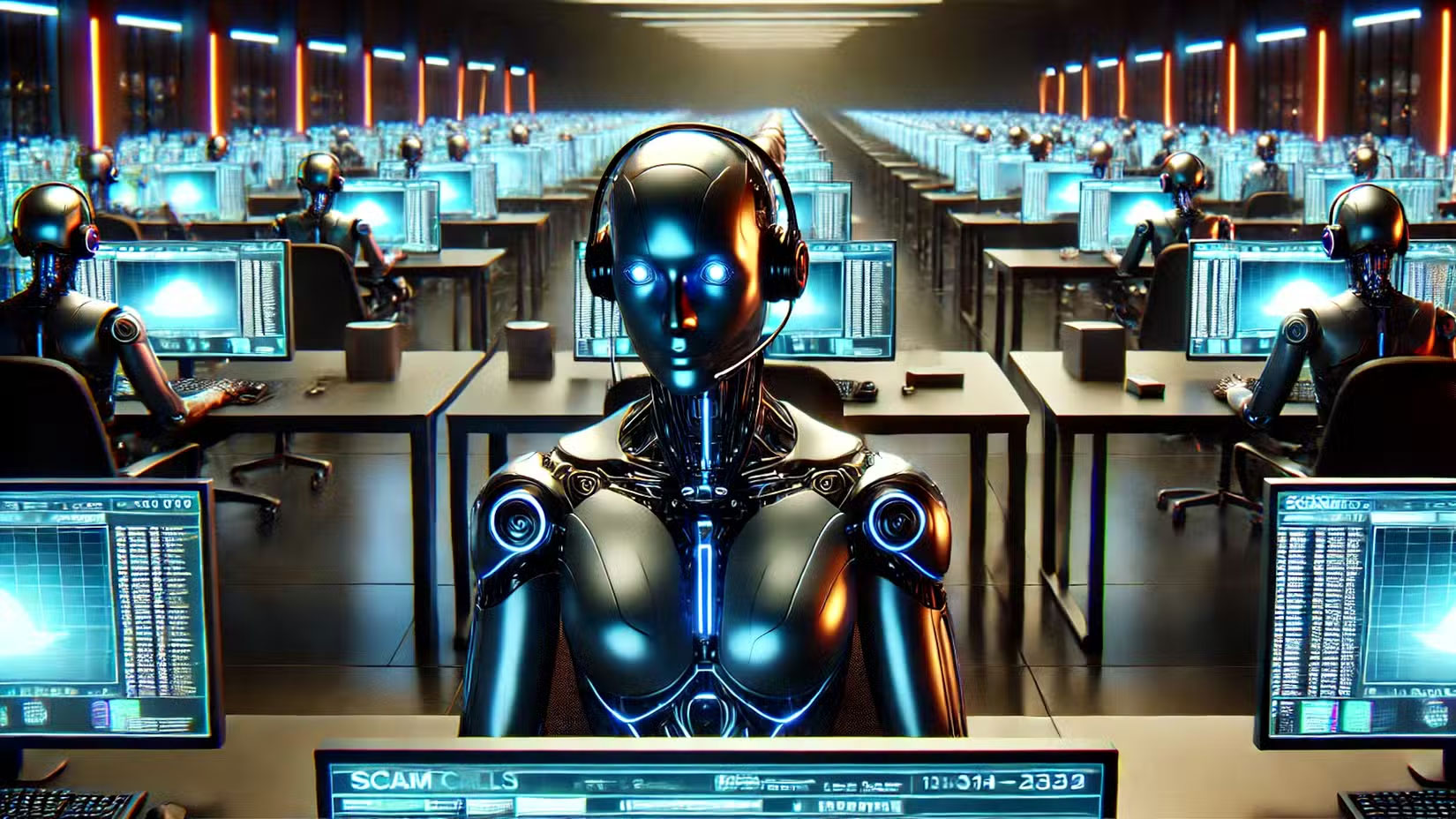

Customer support scams exploit people's trust in big brands by impersonating help desks. These scams work by sending fake alerts, pop-ups, or emails that say your account has been locked, needs verification, or has an urgent security issue. Traditionally, scammers have had to interact with victims manually, but AI chatbots have changed that.

AI-enabled scams can also take a turn where scammers can use AI agents to contact important services like banks and government programs to obtain a target's data or even reset their credentials.

Automated smear and disinformation campaigns

Hackers are now using AI chatbots to spread disinformation at an unprecedented scale. These bots create and share false stories on social media, targeting news feeds, community forums, and comment sections with fabricated comments. Unlike traditional disinformation campaigns that require manual effort, AI agents can now automate the entire process, making fake news spread faster and more convincingly.

AI chatbots offer convenience, but they also empower hackers with advanced phishing tools. But remember, many AI-powered scams still follow the same patterns as traditional scams. They are easier to spot and more widespread. By staying informed and verifying every online interaction, you protect yourself from these evolving threats.

You should read it

- 8 key factors to consider when testing AI chatbot accuracy

- Google's AI chatbot for medical support has been tested in hospitals

- How to use DeepSeek Chatbot

- Learn interesting English idioms right on Facebook Messenger

- How to build a chatbot using Streamlit and Llama 2

- 5 things users should avoid asking AI chatbots

Extremely dangerous zero-day vulnerability on Chrome: Users update now!

Extremely dangerous zero-day vulnerability on Chrome: Users update now! How to detect SSH brute force attacks and protection solutions

How to detect SSH brute force attacks and protection solutions Malware that specializes in eavesdropping and sabotage is discovered hiding on Telegram

Malware that specializes in eavesdropping and sabotage is discovered hiding on Telegram Just 5 minutes to understand what is IDS? IDS VS IPS and Firewall

Just 5 minutes to understand what is IDS? IDS VS IPS and Firewall iPhone users confused by strange sounds

iPhone users confused by strange sounds Those who regularly use VPNs should be careful, Google warns

Those who regularly use VPNs should be careful, Google warns