AI models use aerial and ground data to navigate areas that are difficult to observe

Can artificial intelligence models help navigate through areas (streets) that they have not previously trained, or have not been provided with enough training data? That is what scientists at the development team of DeepMind artificial intelligence concern. And after years of incubation, scientists have finally achieved success in a study called 'Cross-View Policy Learning for Street Navigation', recently revealed in an article. published on Arxiv.org.

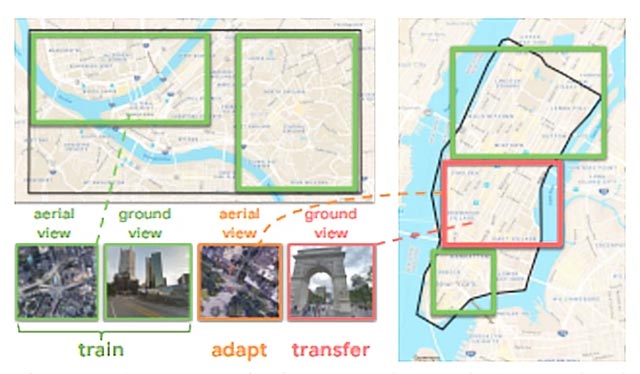

In this study, DeepMind scientists have described the development of an AI-trained policy from a wide range of data warehouses (mostly images taken from top to bottom), targeting different areas of the city, for more effective observation. Researchers believe that such an approach will result in better generalization.

- The difference between AI (Artificial Intelligence) and Cognitive Computing (Cognitive Computing)

In essence, this study is inspired by how quickly people can adapt to the layout and basic structure of a new city by scrutinizing the city's map very much. times.

'The ability to navigate from visual observations in unfamiliar environments is a core component in studying the ability to learn the navigation of AI models. The ability to navigate the streets in the absence of training data of AI models is still relatively limited, and relying on simulation models is not a possible solution for long-term long. Our core idea is to pair the ground observation mode with the aerial observation mode and learn the general policies that can allow switching between views', representative of the research team. said.

- Google released a huge AI training data warehouse with over 5 million photos of 200,000 locations worldwide

More specifically, the first step that researchers will have to do is to collect aerial maps of the area they plan to navigate (in combination with street-based observations based on geographic coordinates). correspondence). Next, they embarked on a three-part shift mission, starting with data training and adjusting the source area using aerial observation data, and ending with moving to the targeted area by observing data from the ground.

The team's machine learning system contains three separate modules, including:

- Convolutional module, responsible for visual perception.

- Long-term memory modules (LSTM) are responsible for obtaining specific characteristics by location.

- The policy recurrent neural module helps create division through actions.

This machine learning model has been deployed in StreetAir - a view of many outdoor street environments - built on the StreetLearn basis. (StreetLearn is the first interactive panoramic photo gallery extracted from Google Street View and Google Maps).

- AI uses tweets to help researchers analyze the flood situation

In StreetAir and StreetLearn, the images above do not contain the whole of New York City (including Downtown NYC and Midtown NYC) and Pittsburgh (campuses of Allegheny University and Carnegie Mellon) are arranged so that each latitude coordinates degree and longitude, the environment returns an aerial image at 84 x 84 in size, the same size as the image from the ground.

The AI system, after going through the training process, will be tasked with learning how to localize and navigate a panoramic image chart of Street View with the latitude and longitude coordinates of the destination.

Panoramic images covering areas from 2 to 5 km apart, about 10m apart, and vehicles (AI control) will be allowed to perform 1 in 5 actions each turn: move forward , turn left or right 22.5 degrees or turn left or right 67.5 degrees.

When approaching the target location within 100-200 meters, these vehicles will receive 'rewards' to encourage the identification and passing of intersections quickly and precisely. body.

- MIT AI model can capture the relationship between objects with the minimum amount of training data

In the experiments, the means to exploit aerial imagery to adapt to the new environment achieved a reward figure of 190 at 100 million steps and 280 at 200 million steps, both of which were significantly higher. counting compared to vehicles using only ground observation data (50 at 100 million steps and 200 at 200 million steps). According to the researchers, this result has shown that their approach significantly improves the ability of vehicles to acquire knowledge about multiple areas of the target city more efficiently.

You should read it

- ★ The difference between AI, machine learning and deep learning

- ★ 6 steps to start learning artificial intelligence programming (AI)

- ★ Artificial intelligence learns to create another artificial intelligence, replacing people in the future

- ★ [Infographic] Benefits and hazards from Artificial Intelligence

- ★ AI can now help write the biographical pages on Wikipedia