Learn about Edge Computing: New boundary and border computing of the Web

Recently, the Edge Computing concept made many people wonder, what technology is it. Edge Computing is boundary computing, so what does it have to do with cloud computing and what is boundary computing in essence?

Edge Computing has become one of the biggest trends in IoT in recent years. Big guys in the cloud like AWS, Rackspace, Google and Microsoft are increasingly interested in 'boundary' solutions to reduce huge costs for central computing (central computing). Telecommunications companies like AT&T are looking at the technology as a natural complement to their 5G initiatives. Device makers like Apple, Samsung and LG are also getting ready for this new change, when making the whole device ready to be compatible with 'boundaries'. Currently, this technology trend will continue to grow.

Edge Computing - What is boundary computing?

- The Egde - Boundary

- What is boundary computing?

- Why is boundary computing necessary?

- Emerging applications

- The difference between boundary computing and fog computing

- Exist in "boundary"

- IoT industry (IIoT)

- Iodine

- Benefits of Boundary Computing

- Why choose Boundaries?

- Managing device relations (DRM)

- Fog

- Boundaries and real time

The Egde - Boundary

The concept of "boundary" refers to the computational infrastructure aspect that exists close to the origin of the data. It is delivered by IT architecture and infrastructure, where data is processed on the periphery of the network, where the original data is closest.

What is boundary computing?

To understand the concept of boundary computing, consider this fact. When there are too many objects connected to the Internet, additional traffic loads mean a huge cost to run a data center. Therefore, boundary computing is developed as a completely new approach, where data analysis takes place closer to sources of information than some central locations. The only criterion is that data should be used near its origin (called 'boundary'). The following is Wikipedia's fairly accurate and comprehensive definition of boundary computing.

"Boundary computing is a distributed computing model, in which calculations are mostly or completely done on distributed device nodes, called smart devices or" boundary devices ", instead of primarily taking place in a centralized cloud environment, "Boundaries" refer to the geographic distribution of network nodes in the network - Internet of Things devices, located at the "boundary" of a business, urban or other network The driving force of boundary computing is to provide server resources, data analysis and artificial intelligence, closer to data collection sources and physical space systems. networks, such as sensors and intelligent drives Boundary computing is considered important in realizing the physical computer, the Smart, Ubiquitous computing (widely distributed computing model), multimedia applications such as enhanced virtual reality, gaming on the cloud and the Internet of Things. "

Simply put, boundary computing is a method of optimizing the cloud computing system by processing data calculations at the edge of the network, close to the most data source.

In IoT devices, 'boundary' data can be taken from temperature sensors in a thermostat, machine actuator or beacon (Bluetooth beacon). Every application on a smartphone device also qualifies as a 'boundary' application because it forwards information in real time.

Why is boundary computing necessary?

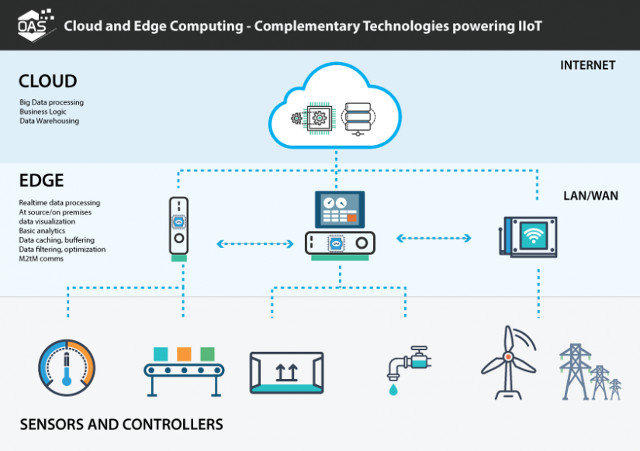

In the field of IoT, many applications are time-sensitive. Even a little delay can lead to extremely bad results. Furthermore, transferring large amounts of data is quite expensive and taxed on network resources. Boundary computing allows you to process data close to the source and only send relevant data over the network to the intermediate processor.

Think about unmanned cars - if the data has to move back and forth every time, an accident may happen. Or oil rigs in a poorly connected location and the cost of transporting upstream data to the cloud is huge. It would be better to have a small factor, deployed in 'boundaries' that can manage interactions with the cloud.

For a more practical example, a smart refrigerator does not need to constantly send internal temperature data back to the cloud analysis table. Instead, it can be configured only to send data when the temperature has changed beyond a certain point, or is notified to send data when the analysis table resumes. Similarly, one-way IoT security cameras only need to send data back to your device when it detects motion or when you explicitly convert data.

The boundary computing approach eliminates the latency, recoil, 'freezing' of images and other side effects of transporting data from a remote cloud. Clearly, it is in stark contrast to cloud computing and some even consider 'boundary' applications to be 'killer' to the cloud. By decentralizing analytical data, boundary computing neatly resolves issues of compliance with regulations and privacy.

In summary, the benefit of Boundary Computing is to allow clarification of the scope of computational resources to optimize processing:

- Time-sensitive data can be processed at the origin by the local processor (a device that has its own computing power).

- Intermediate servers can be used to process data close to the geographic location close to the source (this is assumed to be an acceptable intermediate delay, although real-time decisions should be made as close as possible to the origin)

- Cloud servers can be used to handle less time-sensitive data or to store long-term data. With IoT, you can see this manifest in the dashboard.

- The marginal application service significantly reduces the amount of data that must be migrated, traffic, and the distance the data is moved. This will reduce transmission costs, reduce downtime, and improve service quality.

- Boundary computing removes a large number of "bottlenecks" and major potential errors by emphasizing the dependence on the core computing environment. At the same time data security is improved because encrypted data is checked when it passes firewalls and other protection points, where viruses, data are compromised and hackers can be fooled soon. .

- The scalability of the boundary increases due to rationalization of CPU processing groups when needed, saving costs when real-time data transfer.

Applications of boundary computing

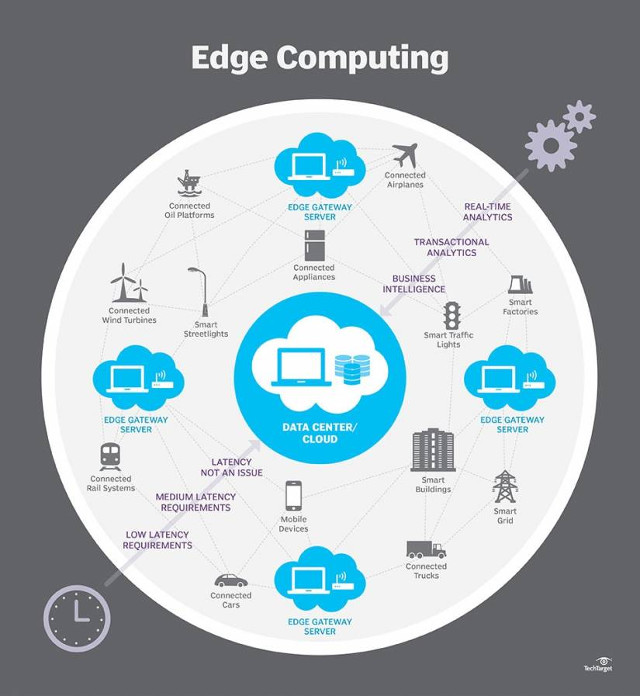

Boundary computing is a breakthrough technology that can become a 'world-changing' tool in the field of IoT along with 5G data rate. They have a natural role in smart cities, health care, storage solutions, food processing and other industrial-scale applications.

Specifically, in the IoT industry (IIoT), boundary computing is applied in Wind turbines, magnetic resonance scanners, industrial controllers, such as SCADA systems, industrial automation machines, smart grid systems, intelligent traffic light systems. In IoT, there are vehicles, mobile devices, traffic lights, household appliances.

Even in the consumer sector, boundary computing has a great power and influence. Currently, all of the leading companies in this field are shifting from a cloud-focused business model to the boundary. The Samsung ARTIK platform is becoming popular in commercial consumer devices. Google has Cloud IoT Edge including fully managed "boundary" services.

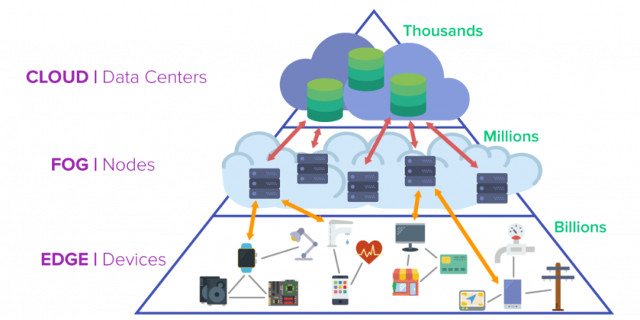

The difference between boundary computing and fog computing

In the middle of the boundary and the cloud is the Fog layer, which is a bridge to connect the boundary devices to the cloud data center.

Fog computing intelligently pushes down the local network level of network architecture, processes fog data of the gateway gateway IoT.

Fog computing pushes the ability to handle the calculation and communication of gateway or device gate boundaries directly into devices like PACs (programmable automation devices)

A common question is what is the exact difference between boundary computing and fog computing. Currently, both terms are used interchangeably. But, there are a few small differences between them.

Boundary computing occurs at the device and has a limited number of functions - acting as a 'path' between the cloud and the device. Fog computing occurs at a LAN node completely independent of the cloud. This means that fog computing is always the same as boundary computing, but if you say the opposite is incorrect.

In addition to data analysis, fog nodes also perform local network storage and connection tasks, such as a local base station for a 5G network.

Managing device relations (DRM)

To manage edge equipment, DRM, device management refers to the monitoring and maintenance of complex, intelligent, and interactive devices connected to each other over the internet. DRM is specially designed to communicate with microprocessors and local software in IoT devices

Device Relationship Management (DRM) is enterprise software that allows monitoring, management and storage of intelligent devices via the internet.

Boundaries and real time

Remote sensors and devices deployed require real-time processing. A centralized cloud system usually handles quite slowly in this case, especially when decision making must be done in microseconds. This is especially true for IoT devices in regions or regions with poor connectivity.

However, sometimes real-time processing requires cloud processing. For example, data aggregated by weather-reducing screens should be sent in real time to supercomputers.

Boundary computing embodies the focus of future IoT applications. The importance of boundary computing will be enhanced when consumer demand for reliability, security and privacy in accessing larger data. In fact, it has the potential to turn device owners into supervisors of their own personal information, not others. In 2019, further developments in this emerging field will remain the main focus for researchers.

See more:

- Lenovo is difficult to enter the cloud market

- The boundary between MacBook and iPad is about to fade away?

- Chrome browser exposes new security risks