Scientists develop AI that can turn brain activity into text

Reading thoughts that seem to be unique to movies, it turns out that they are about to appear in real life: scientists have developed an artificial intelligence that turns brain activity into text.

Although the current system works by reading nerve patterns that appear when someone is speaking, experts claim that in the future, it may support the communication of patients who cannot speak or type, like those with "internal key syndrome".

" We have not reached that level yet, but we think this may be the initial foundation for speech technology, " said Dr. Joseph Makin, co-author of the research and work at the University of California.

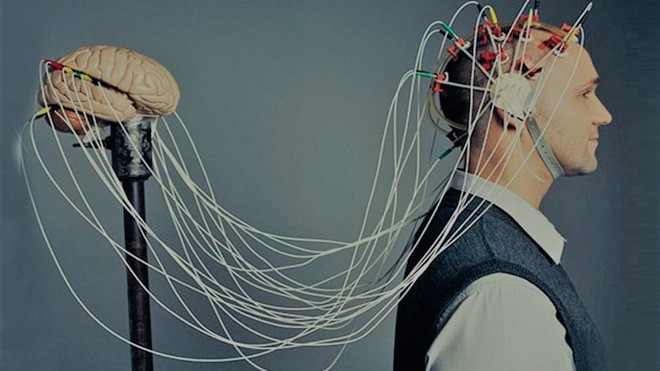

Makin and colleagues revealed that they had developed their system by recruiting four participants and attaching electrodes to their brains to monitor epilepsy.

Participants were asked to read 50 sentences aloud several times, including " Tina Turner is a pop singer ", " The bandits stole 30 jewels " . The team monitored the activity. Their nerves while they are speaking.

The resulting data is fed into a machine-learning algorithm, a type of artificial intelligence system that converts the brain activity data associated with each sentence spoken into a series of numbers.

To make sure those numbers only relate to the aspects of speech, the system compares the predicted sounds from many small parts of the brain activity data to the actual sound recorded. The sequence of numbers is then added to the second part of the system that converts it into a series of words.

Initially, the system produces sentences of little meaning. But when the system compares each string of vocabulary with the sentences read aloud by the participant, it improves and learns the relationship between the sequence of numbers and words, and which words will tend to. go together.

The team then tested the system again, producing text only from brain activity during speech.

The system is still not perfect. For example, " Those musicians harmonize marvellously " were misinterpreted as " The spinach was a famous singer ", and " A roll of wire lay near the wall " became " Will robin wear a yellow lily ".

However, the team found that the accuracy of the new system was much higher than previous approaches. Although the accuracy is different for each person, an average of only 3% of each sentence needs to be corrected - which is higher than that of human translation experts, whose error rate is about 5%. But the team emphasizes that unlike humans, algorithms can only process a small number of sentences.

" If you try beyond the 50 sentences used, the AI will decode much worse, " Makin said, adding that the system is more likely to rely on a combination of learning specific sentences and identifying words. brain activity, and identify general patterns in English.

The team also found that training an algorithm using one participant's data would require less training data from the end user - so the training process could be less annoying. more patients.

Dr. Christian Herff, an expert from Maastricht University who did not participate in the study, said that the study was really interesting because the system only used less than 40 minutes of training data for each participant, and a limited set of sentences, rather than millions of hours of training required by conventional algorithms.

" By doing so, they have achieved the precision that no one has yet achieved, " he said.

However, he noted that the system is still not useful for many patients with severe disabilities, because it relies on brain activity recorded from people who can speak out aloud.

" Of course this is a great study, but those patients can also use 'OK Google' for fast, " - he said. " This is not a thought interpreter, but an interpreter of brain activity during the speech process "

Herff said that people should not be too worried about others being able to read their minds: to read, you must attach brain electrodes, and the imaginary sentences in your mind are very different from your thoughts and emotions of each person.

But Dr. Mahnaz Arvaneh, a brain machine interface specialist at the University of Sheffield, says ethical issues need to be considered. " We are still very, very far from the moment when machines can read our meaning, " she said. " But that doesn't mean we shouldn't think about it and we shouldn't plan it in advance ."

You should read it

- ★ Artificial intelligence learns to create another artificial intelligence, replacing people in the future

- ★ 6 steps to start learning artificial intelligence programming (AI)

- ★ What happens if aliens are artificial intelligence?

- ★ [Infographic] Benefits and hazards from Artificial Intelligence

- ★ How can the AI see us behind the walls?